ML Training

In this section, we use auto-sklearn to train an ensemble ML model to accurately classify tumour rows as malignant or benign. Akumen provides a model to perform this task, that requires some minor configuration. It requires the following parameters:

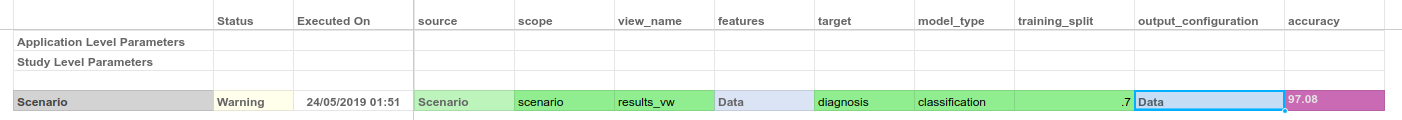

source: a scenario input, pointing to the dataset to be trained from. For our purposes, this is the ETL model we just wrote.view_name: name of the view to retrieve data from.scope: scope level of the data to retrieve from (see previous section).features: a JSON configuration section that defines the features to train the model with.target: the column that you’re attempting to classify data to.model_type:regressionorclassification, defines the type of ML to perform.training_split: what percentage of input data to use for training vs testing of the model. The default is 70%, and this is generally fine.

We provide support for using either the Akumen Document Manager or a third party cloud storage service for saving the exported data. Examples are provided below for performing either method.

Akumen Document Manager

To create an ML training model, you can do the following:

- Go to the App Manager, and select

Create Application -> Python Model, namedML Trainer - Breast Cancer. - Click the

Git Clonebutton on the toolbar, and enter the git url:https://gitlab.com/optika-solutions/apps/auto-sklearn-trainer-document.git. You can leave the username and password blank, just hit the refresh button next to branch, ensure the branch is onmaster. Clickok. - Go to the research grid and enter the following:

source: point to the ETL model.scope:scenarioview_name:results_vwfeatures: see below.target:diagnosismodel_type:classificationtraining_split: 0.7

For features JSON, we list each column under its associated type. A numeric type is any feature that is numeric-based (number and order is important). A categorical type is any feature in which order is not important, but each distinct value is (e.g. true/false, or colours, or similar). String values must be categorical. To ignore a feature, simply exclude it from this list - however, the model will automatically exclude any features it determines to be irrelevant anyway.

{

"numeric": [

"radius_mean",

"texture_mean",

"perimeter_mean",

"area_mean",

"smoothness_mean",

"compactness_mean",

"concavity_mean",

"concave points_mean",

"symmetry_mean",

"fractal_dimension_mean",

"radius_se",

"texture_se",

"perimeter_se",

"area_se",

"smoothness_se",

"compactness_se",

"concavity_se",

"concave points_se",

"symmetry_se",

"fractal_dimension_se",

"radius_worst",

"texture_worst",

"perimeter_worst",

"area_worst",

"smoothness_worst",

"compactness_worst",

"concavity_worst",

"concave points_worst",

"symmetry_worst",

"fractal_dimension_worst"

],

"categorical": []

}Then we execute the scenario. Once completed, you’ll notice that the model returns an indicative accuracy - in this case, roughly ~95-97%.

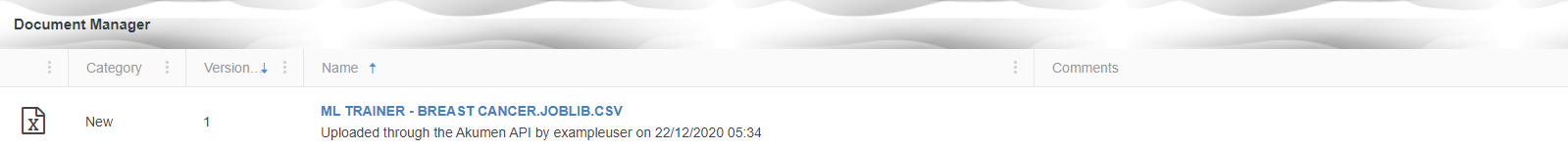

If you check the document manager, you’ll also see your model.

Third Party Cloud Storage

Alternatively, you may use AWS or Azure to store the model. The steps below are written using an S3 bucket.

Note: You will need to provide your own Amazon S3 bucket to store the model in AWS.

To create an ML training model using an Amazon S3 bucket, you can do the following:

- Go to the App Manager, and select

Create Application -> Python Model, namedML Trainer - Breast Cancer. - Click the

Git Clonebutton on the toolbar, and enter the git url:https://gitlab.com/optika-solutions/apps/auto-sklearn-trainer.git. You can leave the username and password blank, just hit the refresh button next to branch, ensure the branch is onmaster. Clickok. - Go to the research grid and enter the following:

source: point to the ETL model.scope:scenarioview_name:results_vwfeatures: see below.target:diagnosismodel_type:classificationtraining_split: 0.7output_configuration: see below.

For features, use the JSON provided above for the previous example.

For the output_configuration JSON below, we give a storage provider and keys (in this case, for S3 - but Azure Blob Storage is also supported).

Note: The name entered into bucket must match an existing s3 bucket in AWS. The key and secret values must also be updated to a valid AWS access key with appropriate permissions to the bucket.

{

"provider": "s3",

"bucket": "ds-model-bucket",

"key": "xxx",

"secret": "xxx",

"region": "ap-southeast-2"

}Then we execute the scenario. Once completed, you’ll notice that the model returns an indicative accuracy - in this case, roughly ~95-97%.

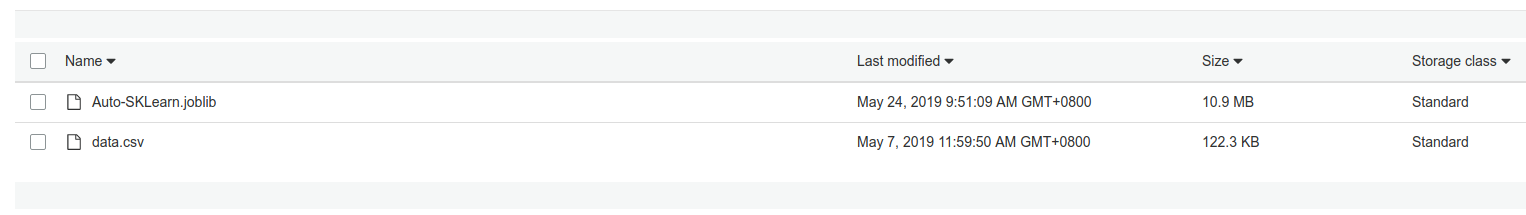

If you check the S3 bucket, you’ll also see your model.