Akumen Basics

Learning the Ropes

This chapter will guide you through the basics of the Akumen layout.

This chapter will guide you through the basics of the Akumen layout.

The Akumen main page consists of three sections:

Click the plus icon

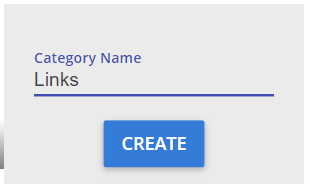

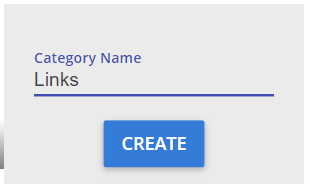

Type in your category name and click “CREATE”

Type in your category name and click “CREATE”

When hovering over a category, there are 4 icons that appear in the top right corner

These icons are:

To change the order that categories appear on the dashboard:

The sidebar contains nine links:

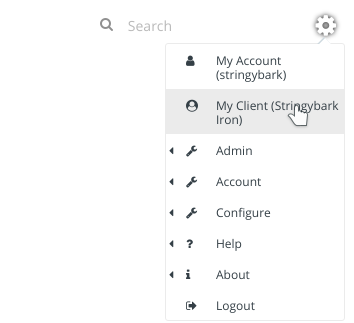

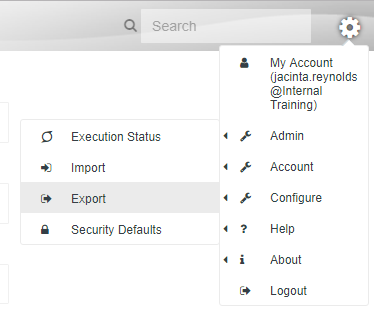

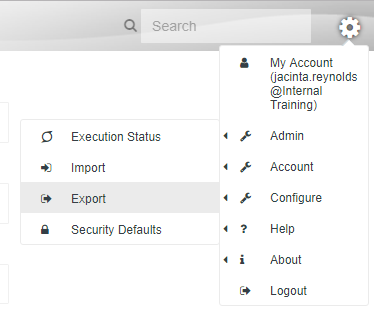

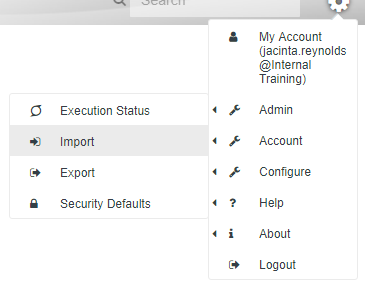

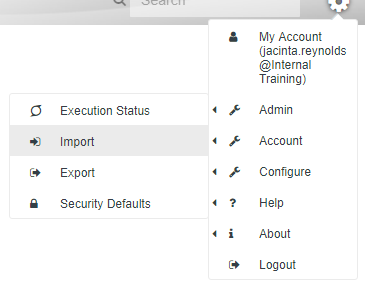

Settings are located under the cog icon at the top-right corner of the screen.

This provides you with a number of administration functions. These functions are for advanced users.

Depending on your permission level, you will be able to see and access different administrative areas within the settings menu.

Under Help, you can Raise a Ticket. Raising a support ticket will generate an email to support@idoba.com, which you can also email directly, about any issue or query you may have.

Selecting Logout from this menu will close your Akumen session.

To the left of the settings cog is the search box.

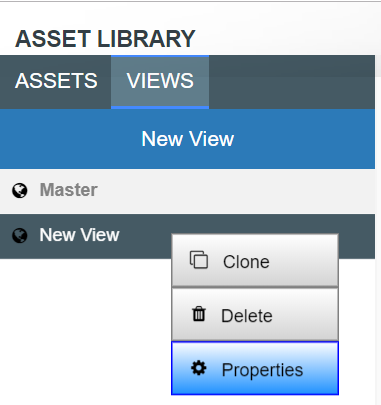

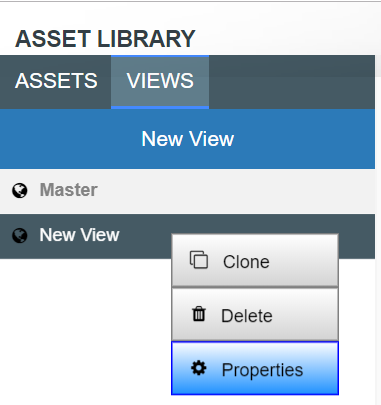

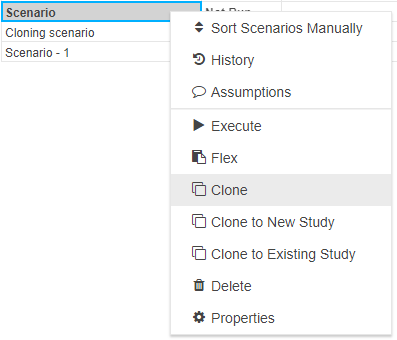

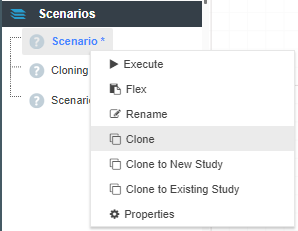

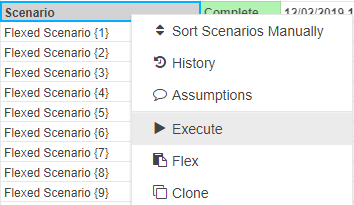

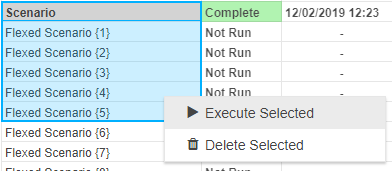

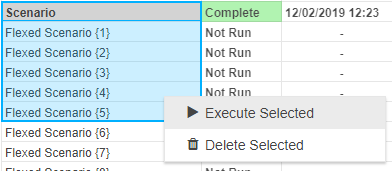

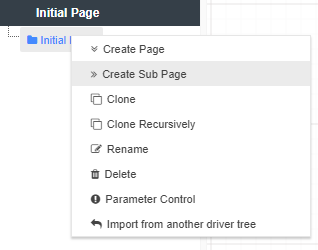

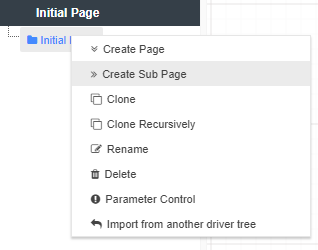

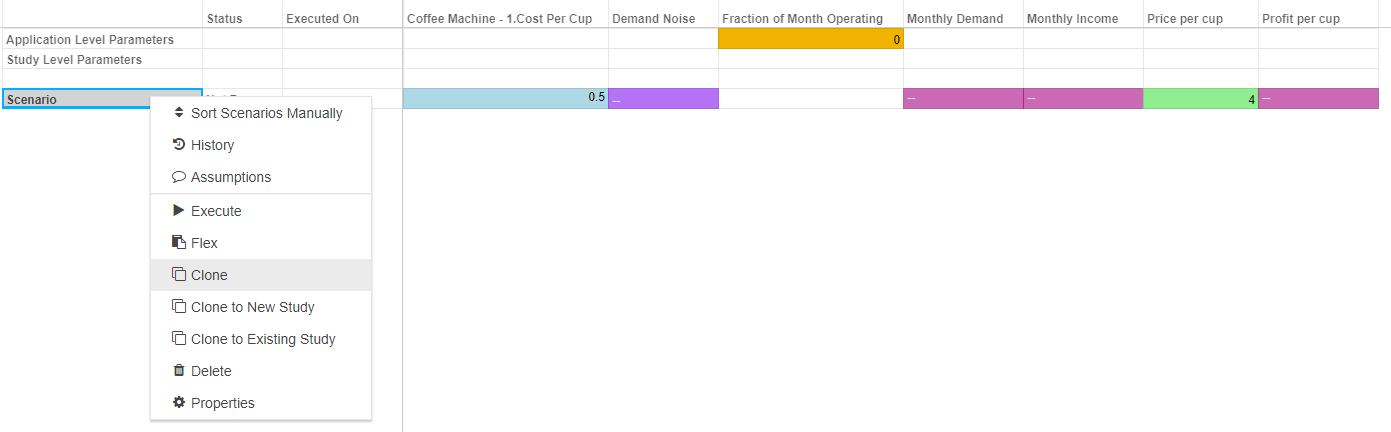

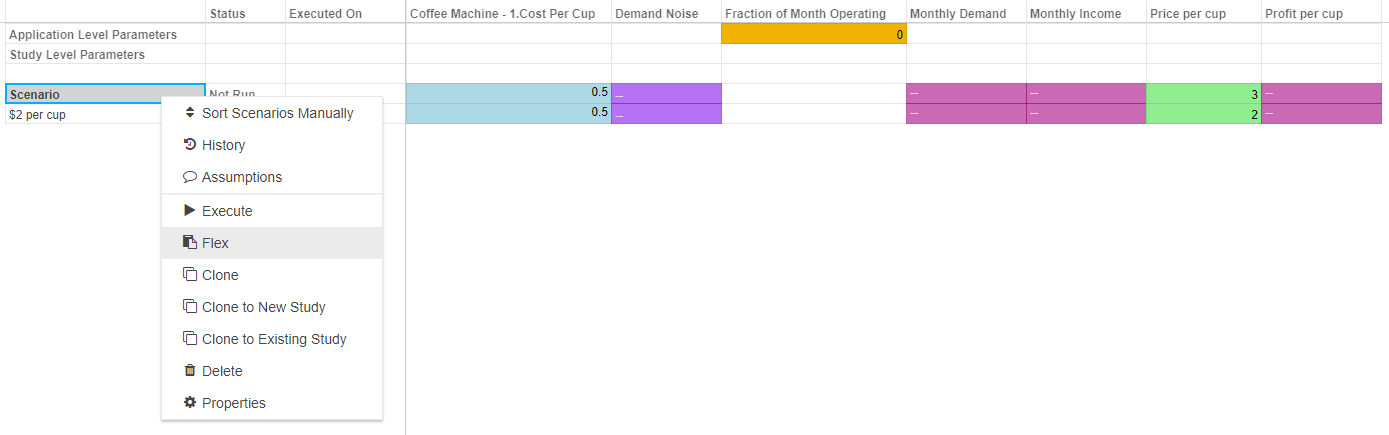

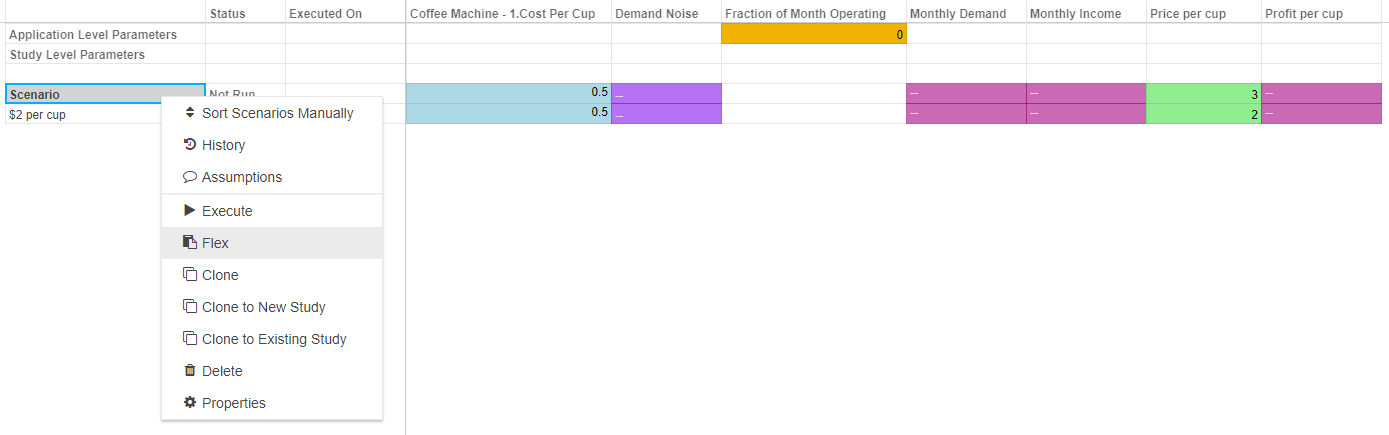

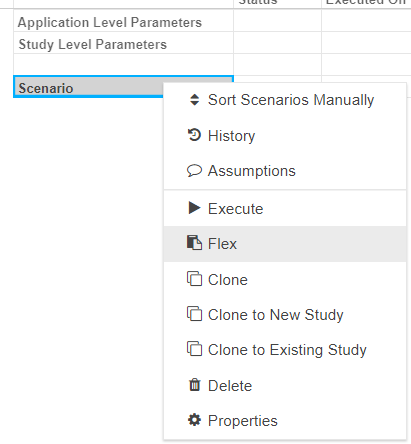

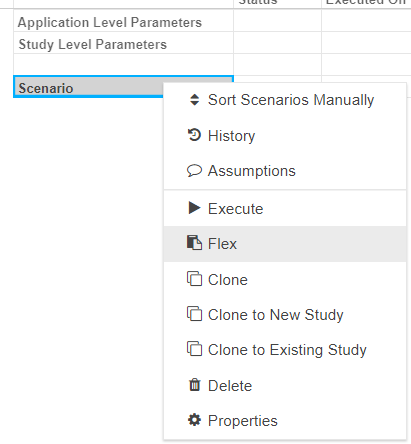

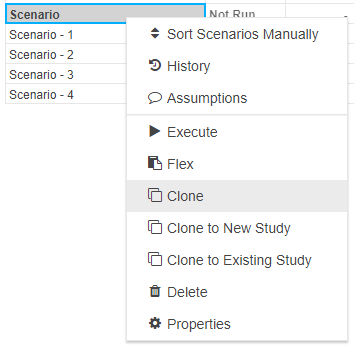

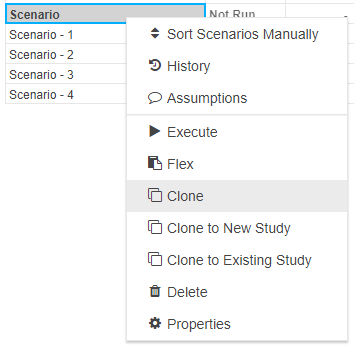

Metadata in Akumen is generally stored in grids, for example the Application Manager grid or the Pages grid. Each of these grids within Akumen has a right-click context menu that provides additional functionality, such as deleting, cloning or even viewing the properties of the selected item.

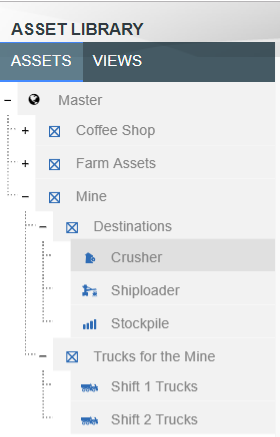

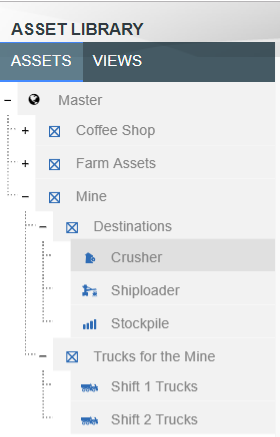

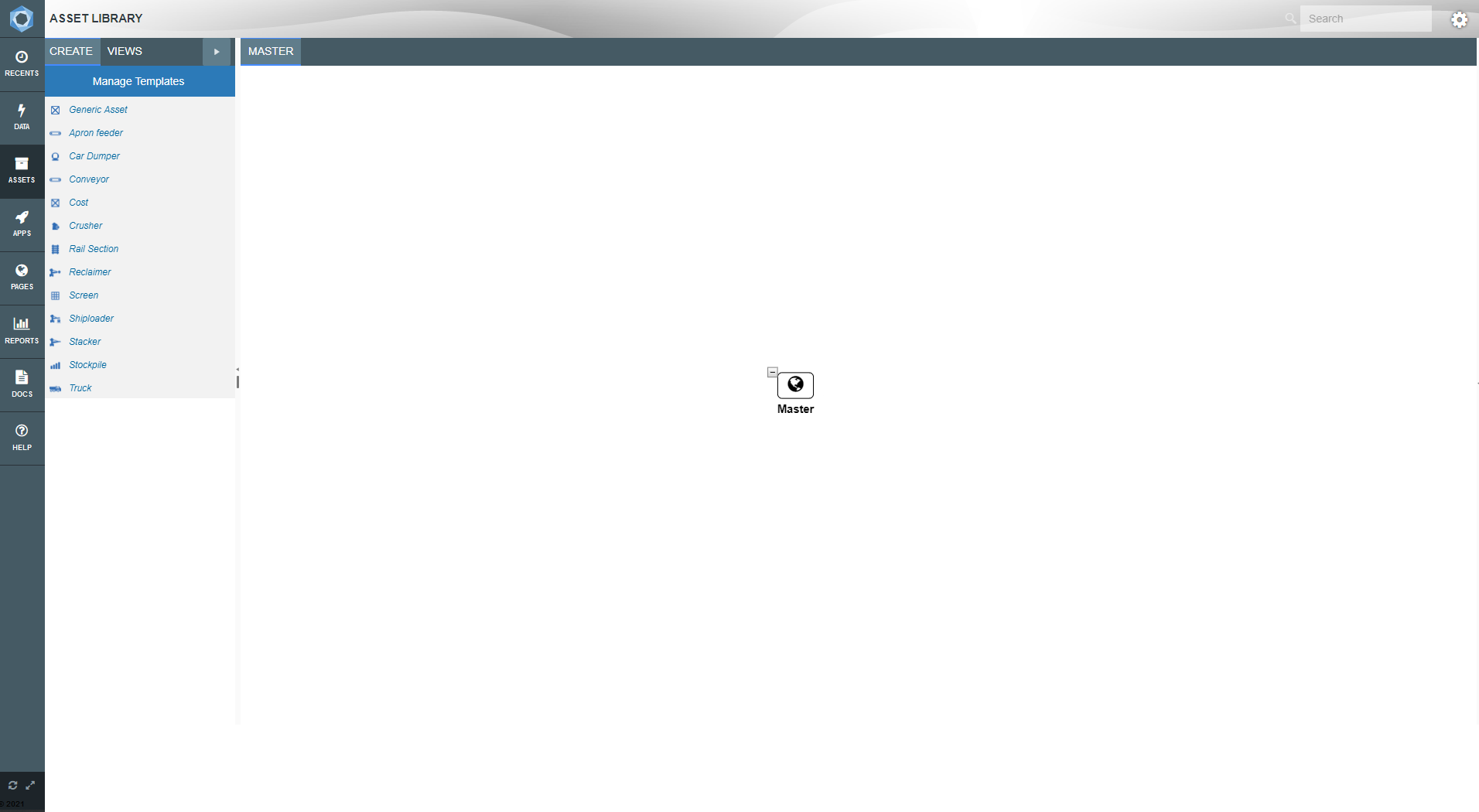

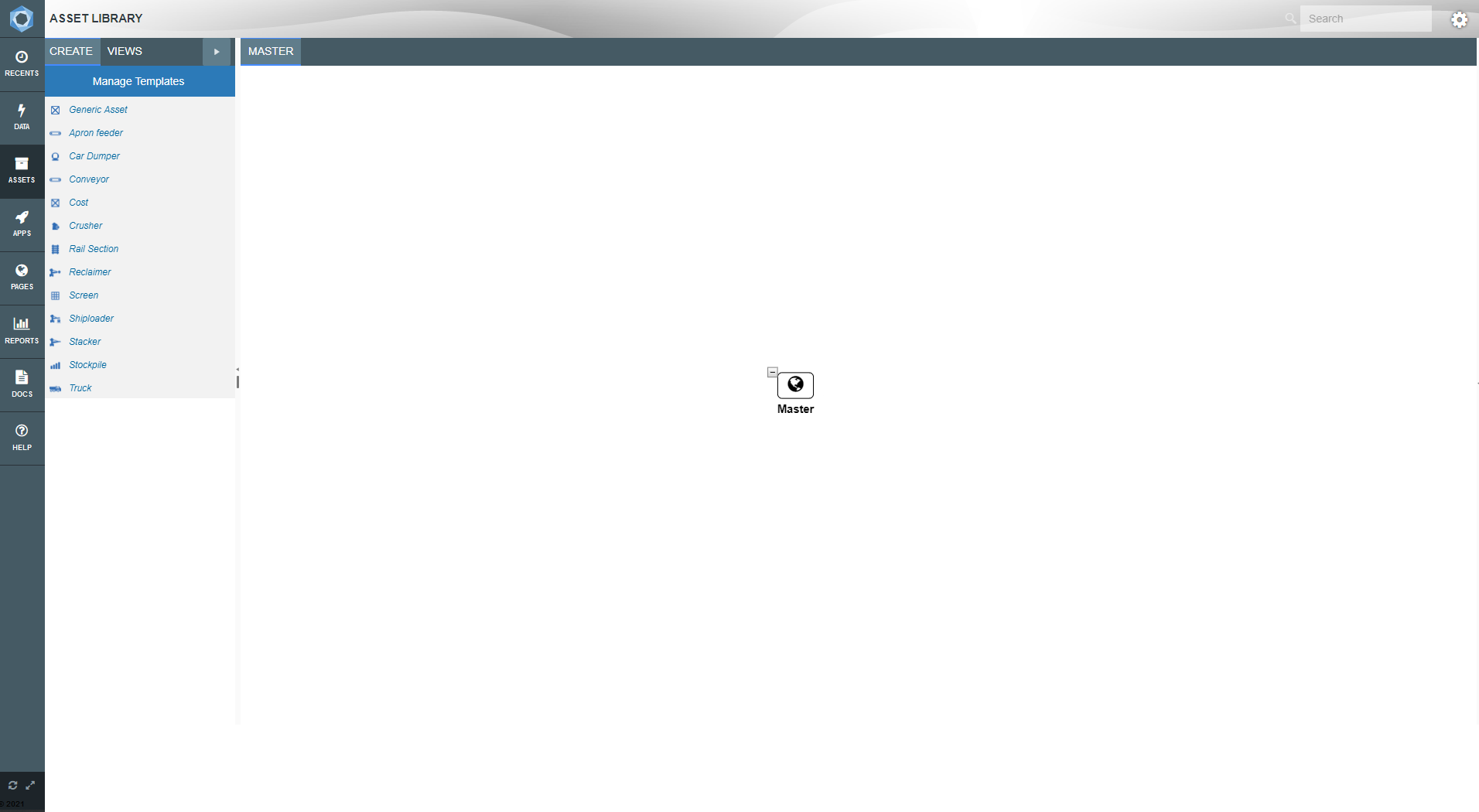

The Asset Library allows you to store data about your business.

To address the problem of data consistency and integrity, Akumen encourages the use of an Asset Library. The Asset Library acts as a single source of truth. It is a staging area for all the fixed values across the business.

An Asset is any “concept” in the business. These could be physical assets like infrastructure or equipment, or intangible assets like bank accounts, loans or rent.

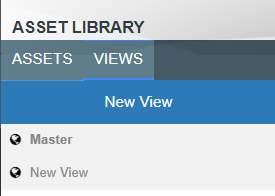

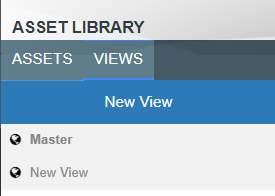

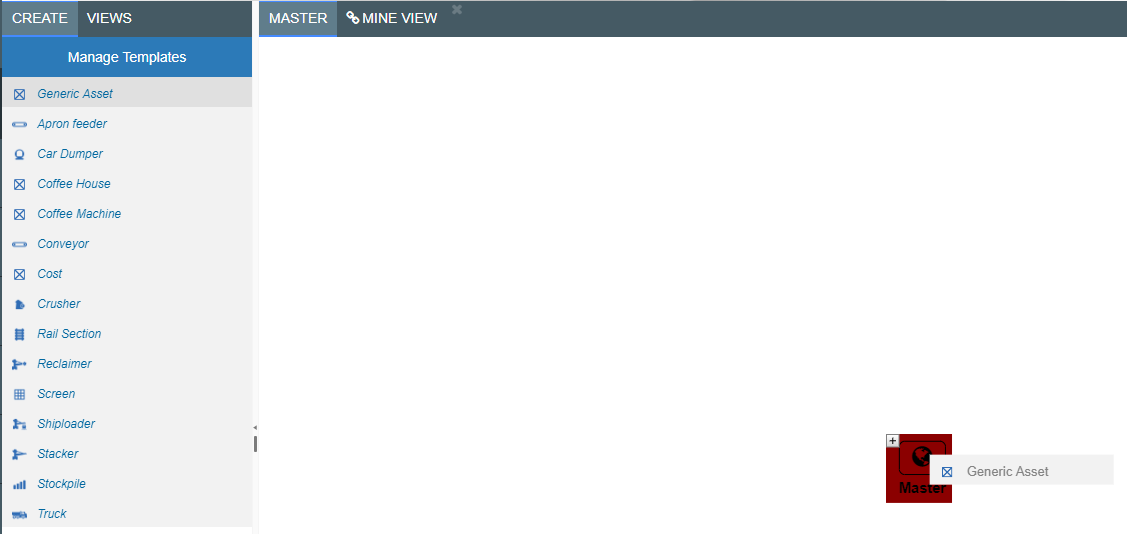

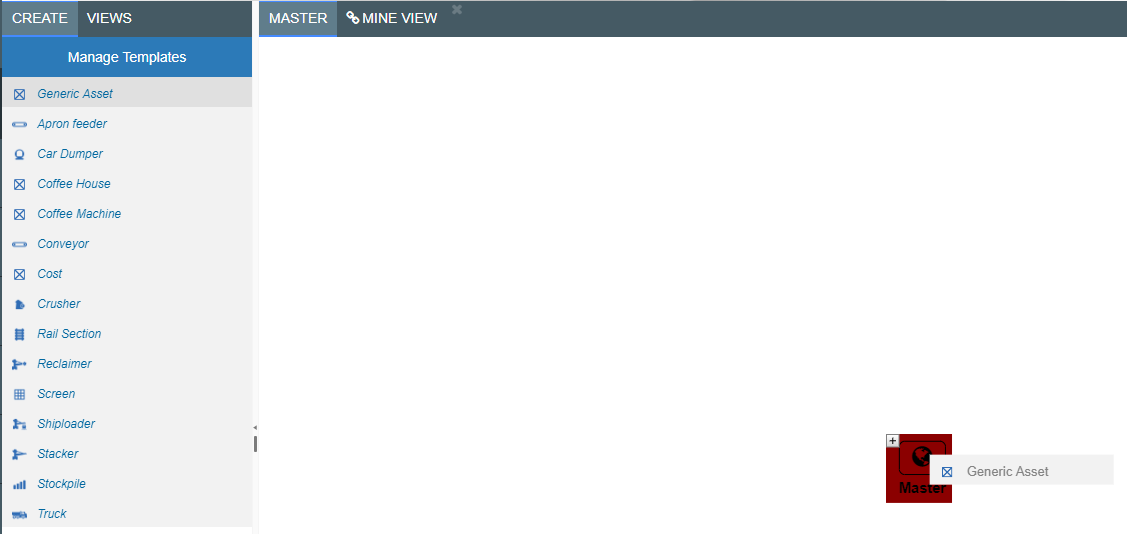

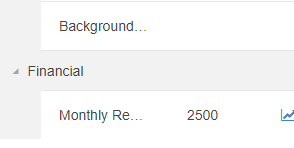

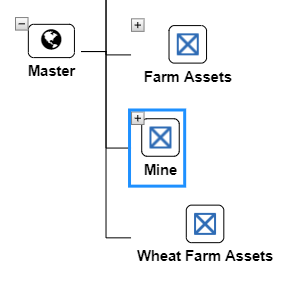

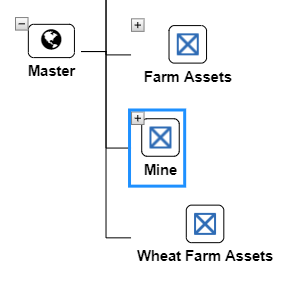

There are two main areas of the Asset Library as seen in the image below.

Asset templates provide a way of setting up the attributes of a template once. Every time that template is used to create a new asset, the attributes are automatically created with that asset. For example, creating an asset of type Crusher will include attributes such as: Name Plate Rate and Manufacturer.

They are accessible through the Manage Templates button in the Create tab in the Asset Library, or through the Cog, Configure, Asset Templates.

The Master View is where all of the fixed data for your applications are stored in a hierarchical format.

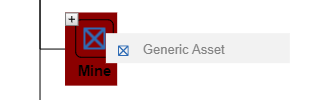

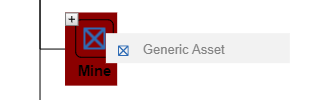

We’ve seen that the left pane has two tabs one of which is Create. The Create tab allows users to create assets based on templates.

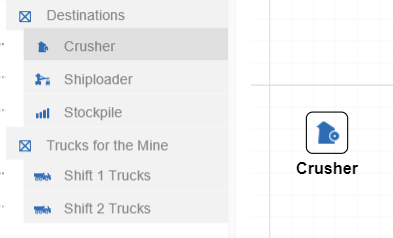

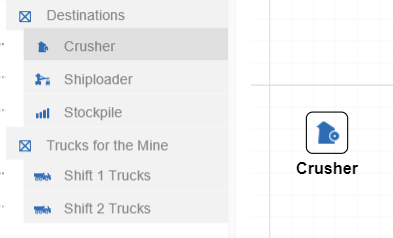

By dragging a template onto the Master View icon, users can attach an Asset to the Master View. If an asset template is dragged onto another asset than that asset becomes part of that branch.

To add an Asset to the Master view:

Each Asset must have a unique name so that they can be linked to from within applications.

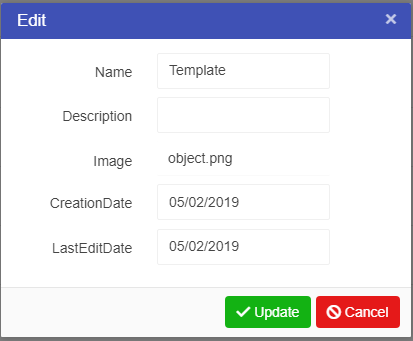

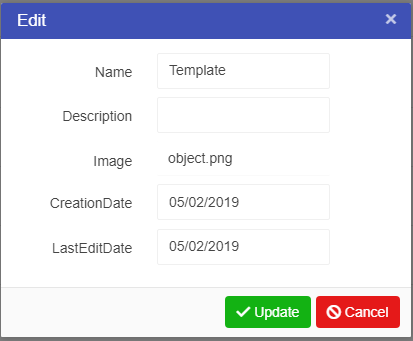

Every Asset template has a set of properties attached to it. For example a truck asset will have a specific set of properties attached to it which will differ from those of a cost or conveyor asset.

Each template has a selection of data points surrounding each Asset. These are called attributes. These attributes are only suggestions and each point does not need to be filled in for models to run. Models that do draw information from the Asset Library will only take the asset information available to it.

If a model is run that does require specific information from the Asset Library and it does not exist within the Asset, the model will leave that piece of information blank. If the information is required by the model an error will be returned when the model is run asking users to enter the required information.

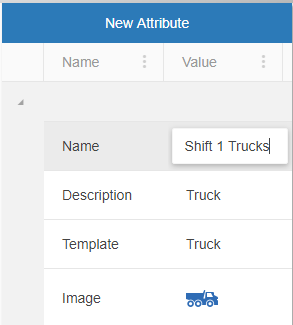

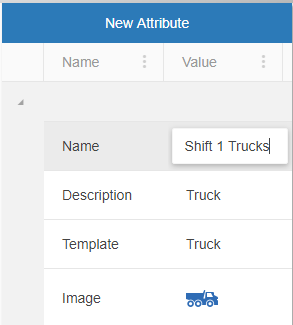

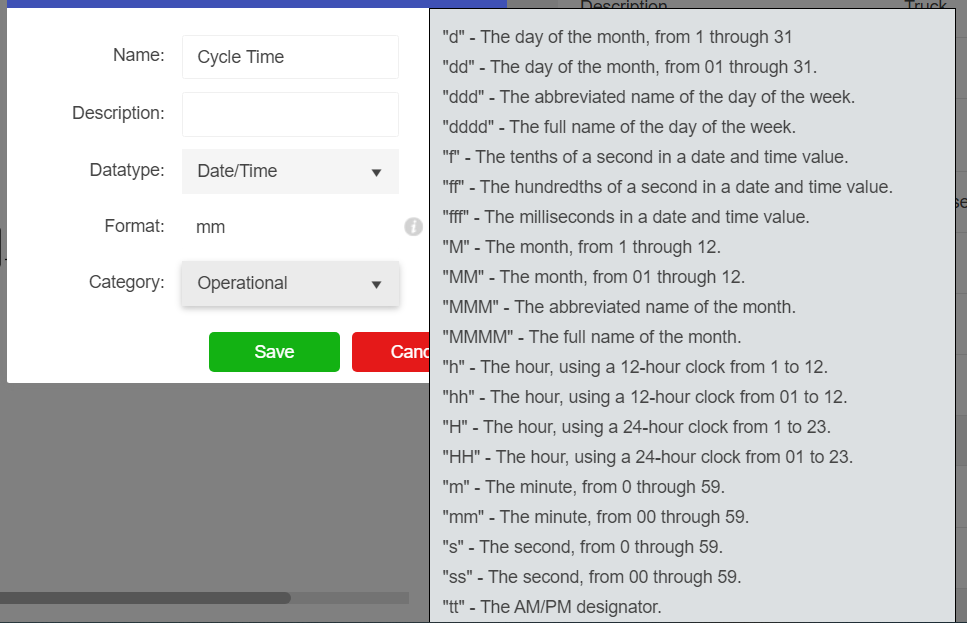

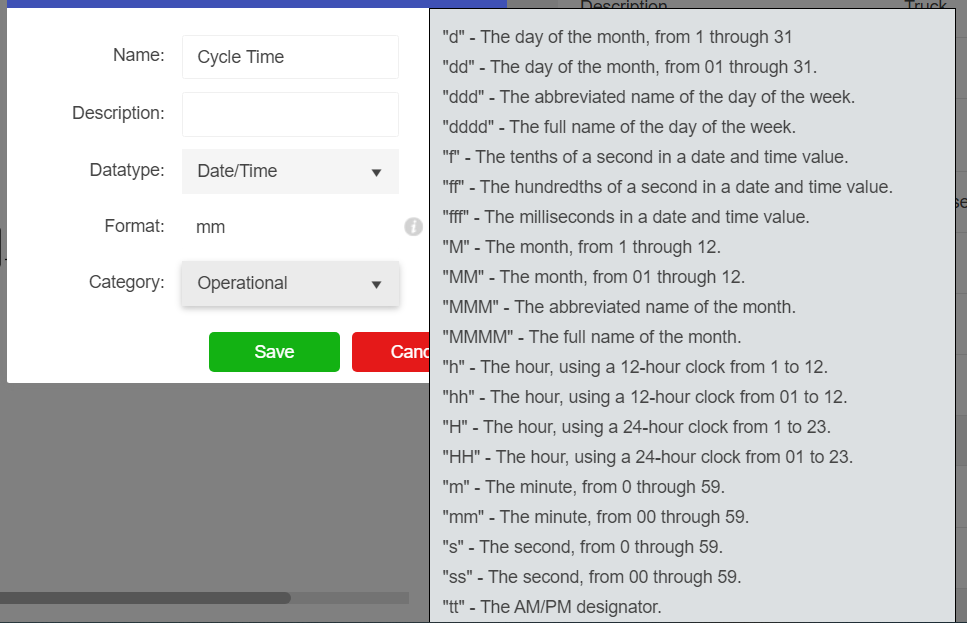

However, templates do not always have all the needed attributes for a particular Asset. Sometimes Attributes will have to be created in order to add extra information regarding the Asset. These can be added by simply hitting the “New Attribute” button in the configuration pane of a selected asset. Once an attribute has been created, either through the template or manually through the asset, selecting the asset will bring up a properties pane allowing the user to edit the value.

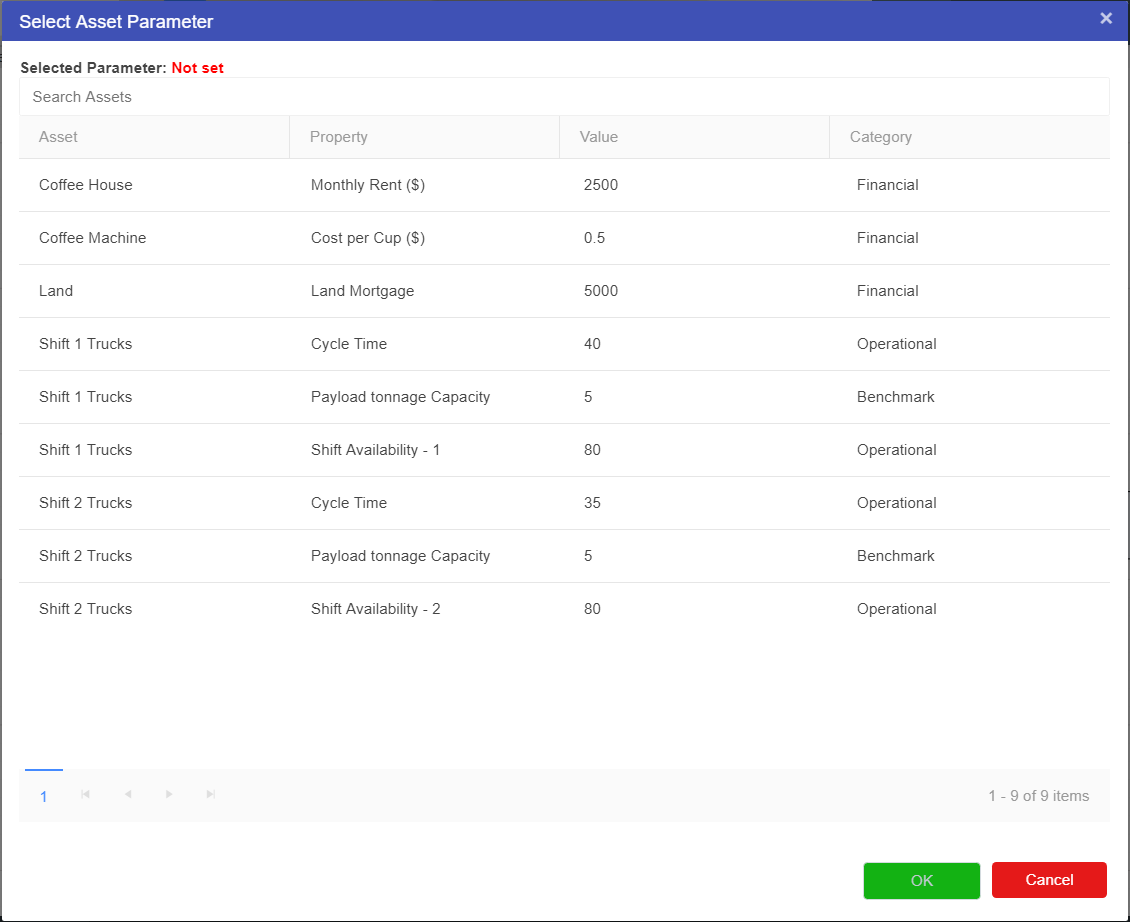

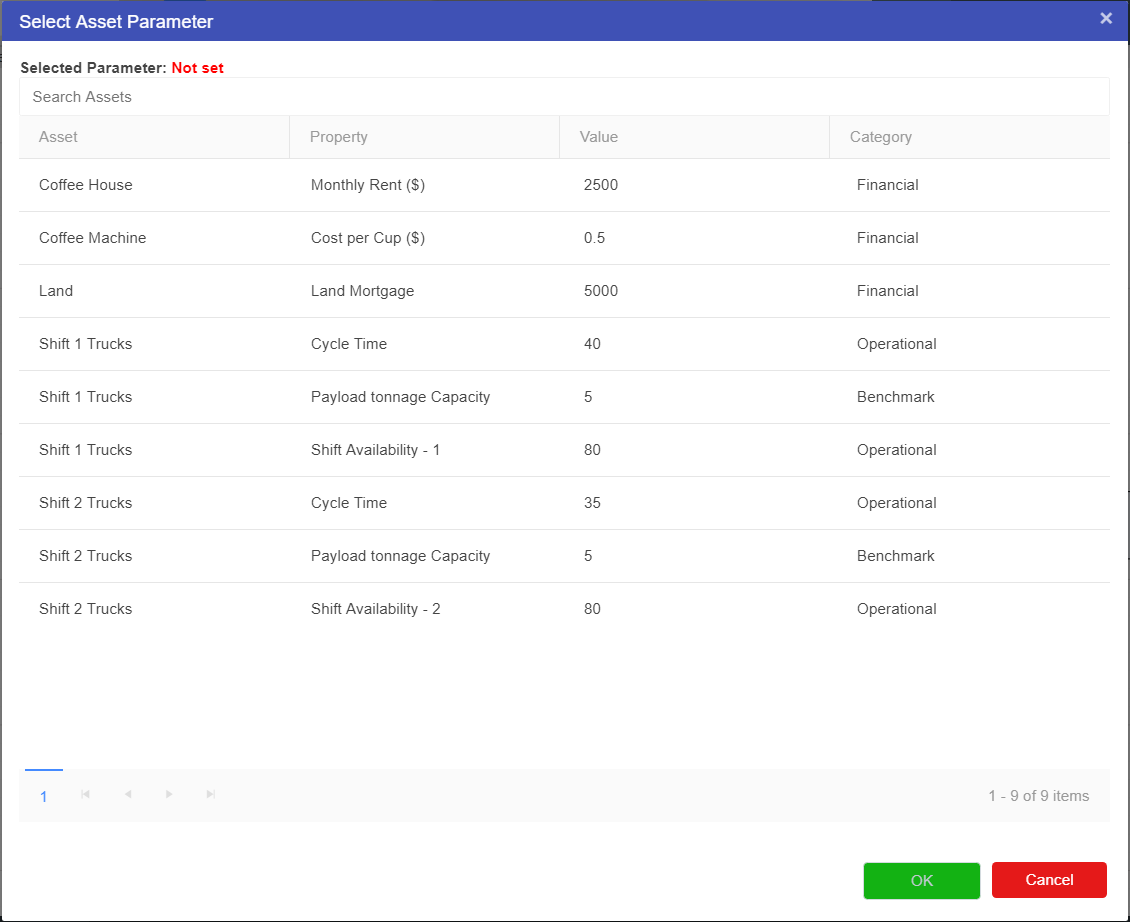

If no value is set for an attribute then that attribute will not appear in the Driver Model asset parameter node menu.

There are several default categories available - each attribute must be allocated to one category:

New categories can be added in the Asset Templates screen, under the “Categories” tab.

When a new attribute is created it will appear under the appropriate category in the configuration pane of a selected asset

Any asset attribute can have an assumption entered against that attribute. Simply Right Click the attribute, and hit the Assumptions button. A popup window will appear allow you to enter some rich text about the attribute.

Once the record is saved, a comment icon will appear next to the attribute in the properties grid.

Attributes can be marked as approved by right clicking and hitting the approve button. Once approved, a check icon appears next to the attribute, as well as a tooltip indicating the approver’s details. To remove the approval, simply alter the value.

Attributes can also be locked such that they cannot be edited. Similar to approvals, right click an attribute to lock it. A padlock will appear next to the attribute, and prevent editing. Note that this is different from security, as attributes can also be unlocked by right clicking.

The primary use case for this functionality is if you have a model that calculates an asset value, or populates it from an external source, you do not generally want users modifying this value. An API call can lock the parameter, but it also does not adhere to the same restrictions the user interface has in regards to locking (ie the value can be edited programmatically, just not through the user interface).

There are a number of different types of views. Each view allows users to see assets from the Master view in different ways:

To create a New View select New View at the top of the left pane in the views tab. A new view will automatically be created.

To change the type of view:

In the properties panel of the View you can also change the view’s:

We recommend giving each view a specific and unique name so that it can be easily identified from other views.

There are four types of Views you can create:

This view is exactly like your original Master View. The Master View shows all of the Assets in the Asset Library in the form of a hierarchy tree. Although similar to the Master View, the hierarchy view allows users to choose specific branches of the Master Tree they want to view. This is especially useful when setting up permissions as part of our recommended Best Practices for Akumen.

Hierarchy Views isolate user defined branches and when users select that view they will only be able to see that particular branch.

To create a Hierarchy View:

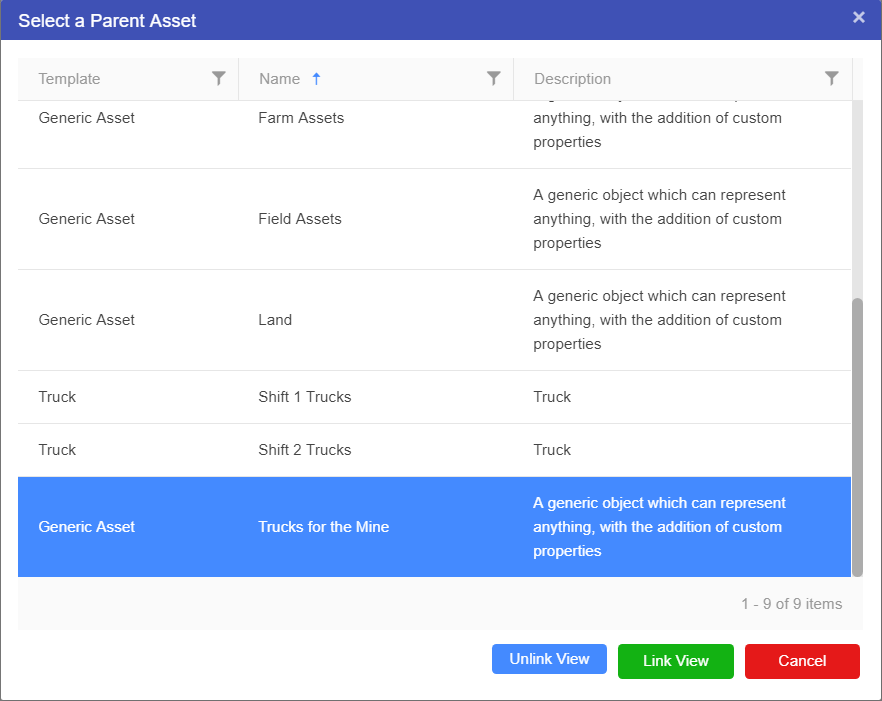

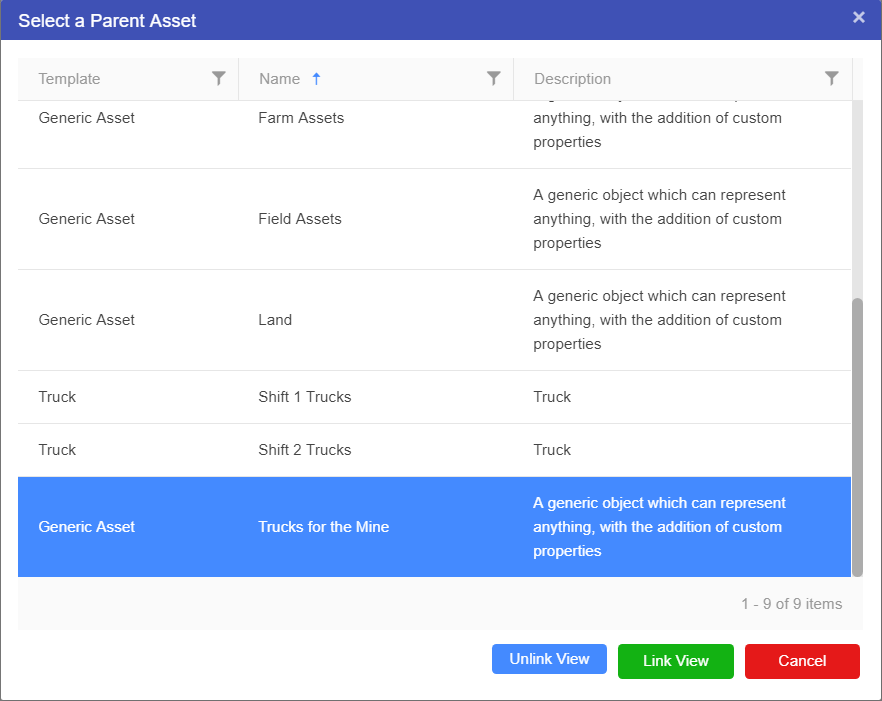

Hierarchy Views can be created from scratch by dragging and dropping assets from the Assets palette on the left of screen, or an asset can be set as a parent, in effect linking the current view to a section of the Master View.

To link a view to an asset in the Master view:

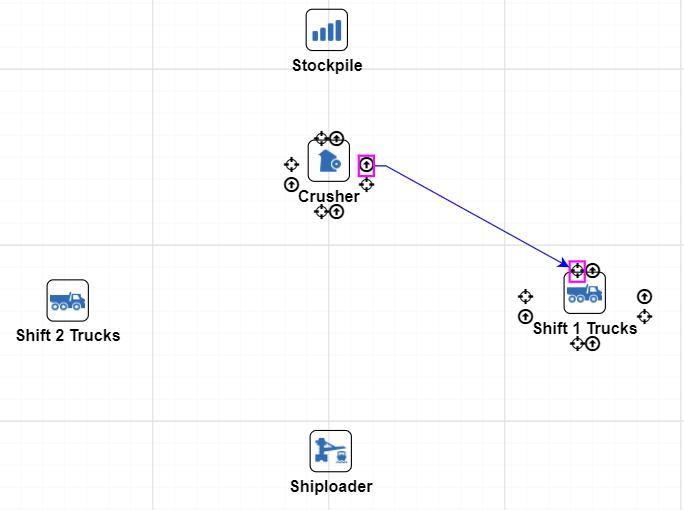

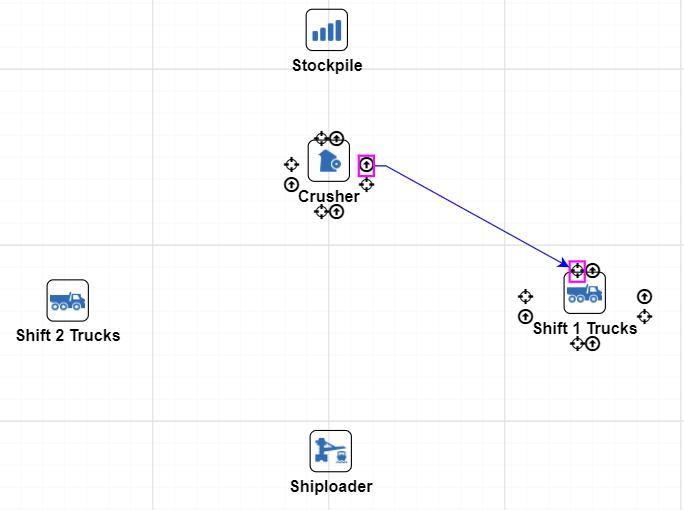

Is a way of representing data in the form of a network, similar to a mind map.

A Flow View allows users to create a flow diagram linking assets together. This is most useful when trying to model supply chains or logistics flows. Unlike Hierarchy Views Flow views are not linked on one specific asset of a branch. Users can draw on assets from all over the Master Tree to create their flow.

To create a Flow View:

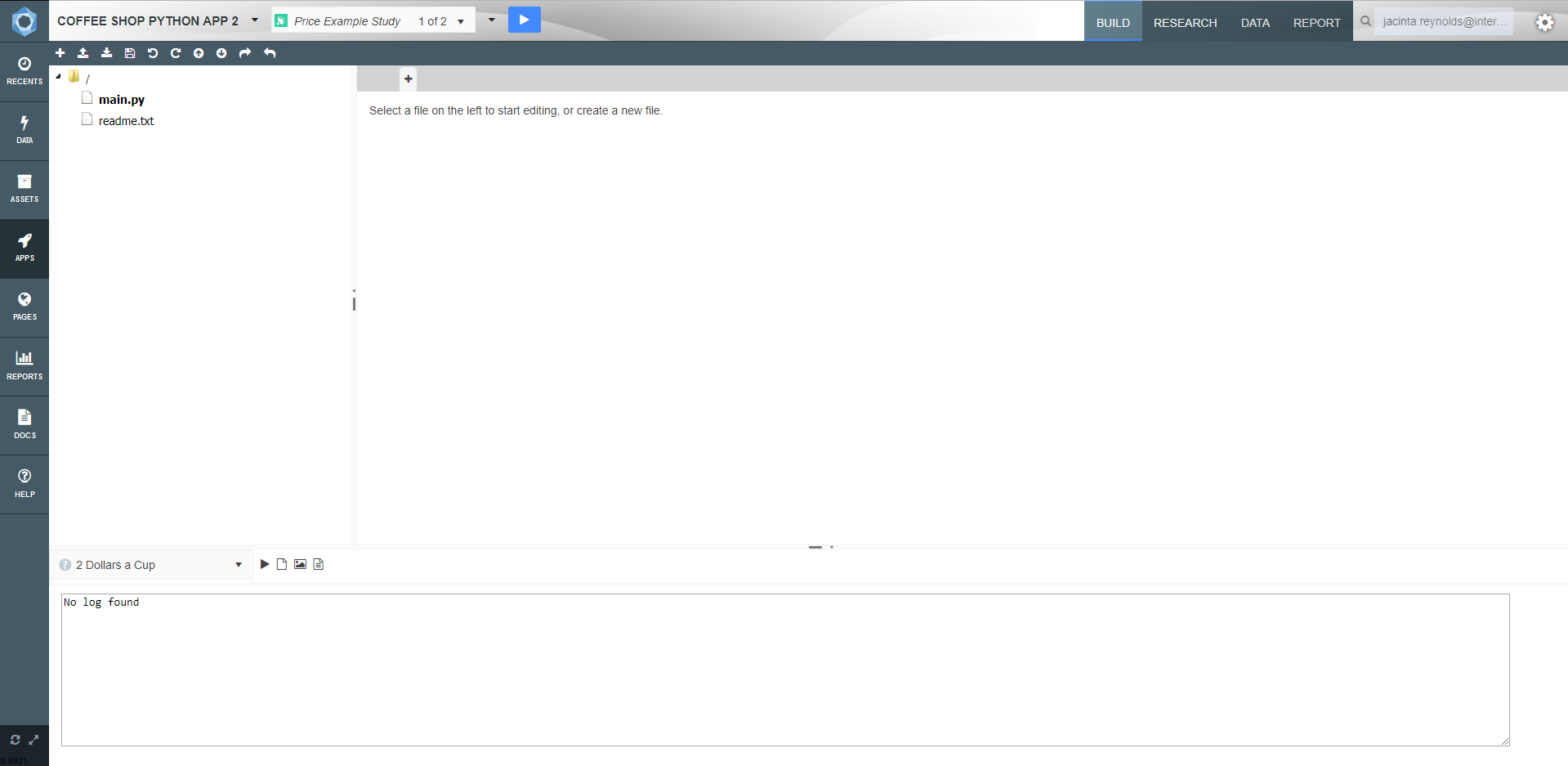

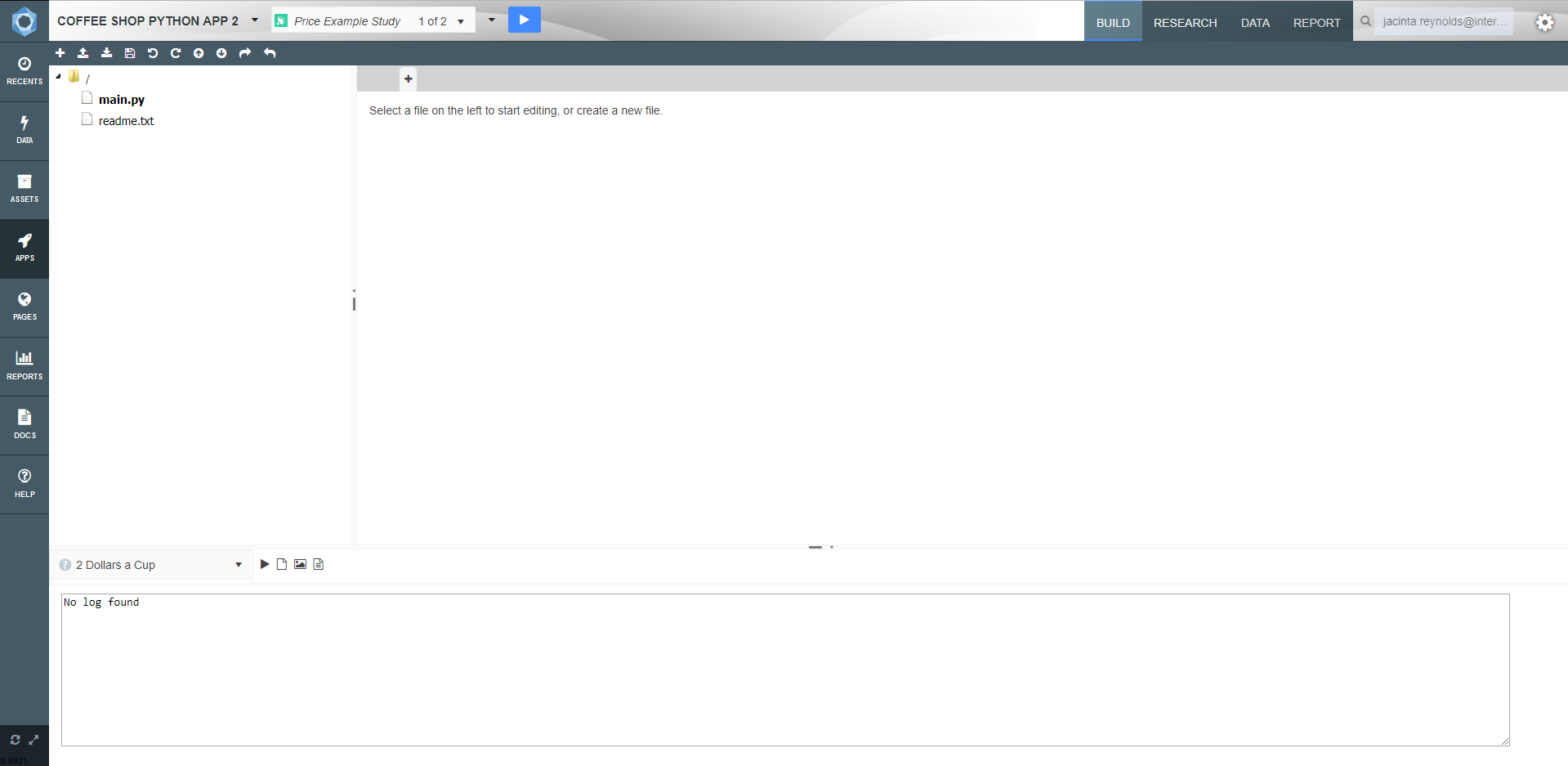

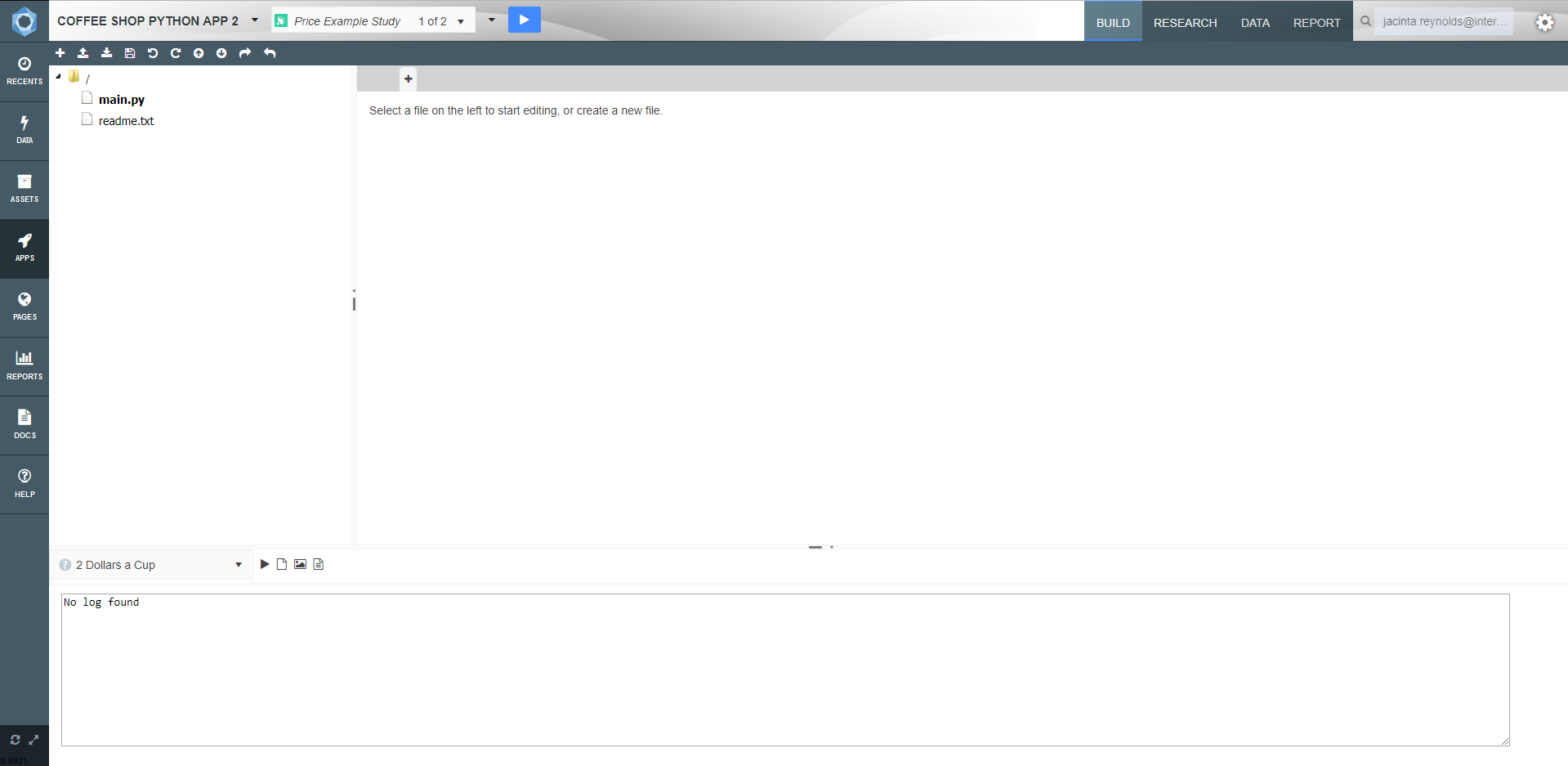

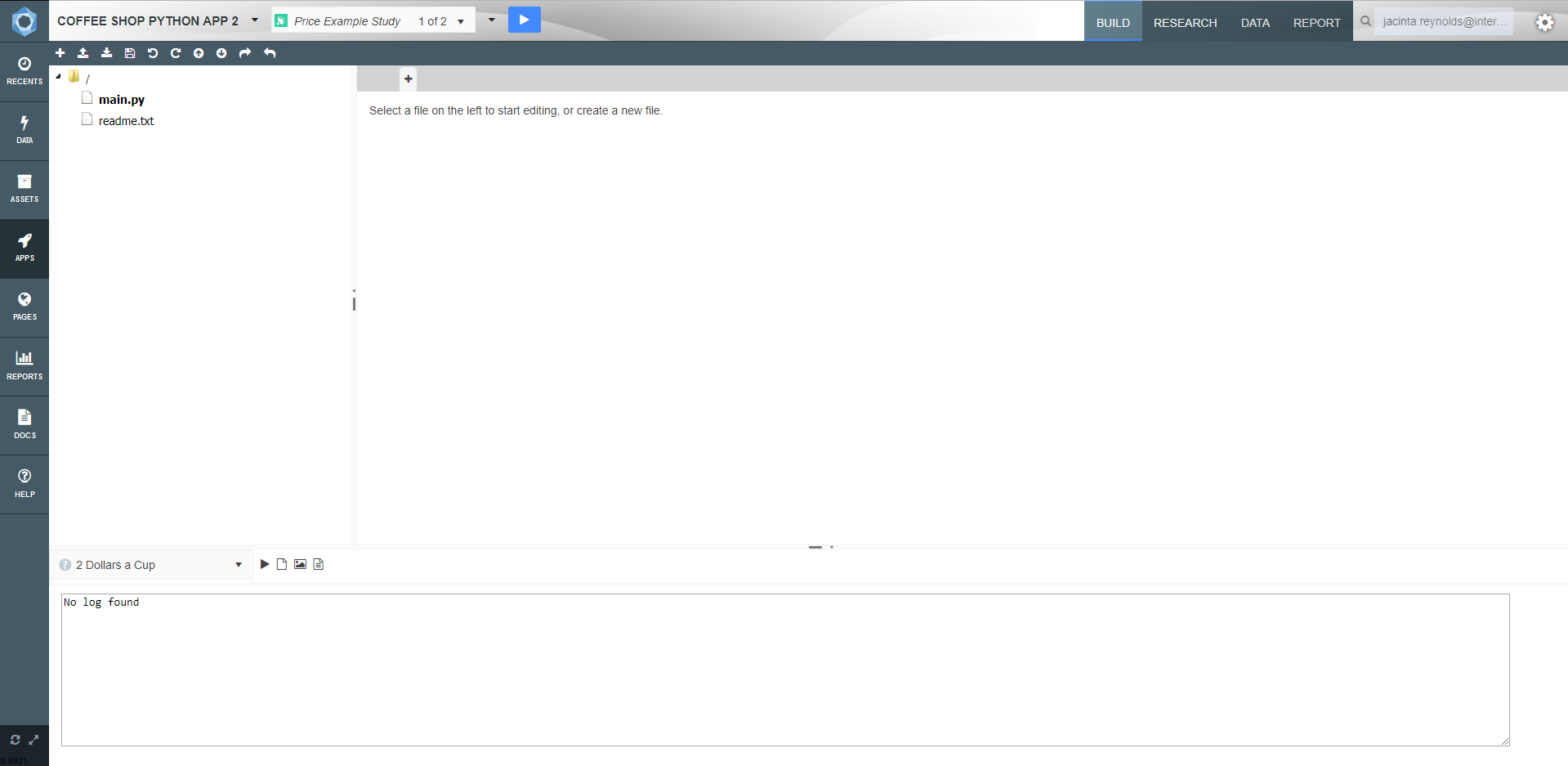

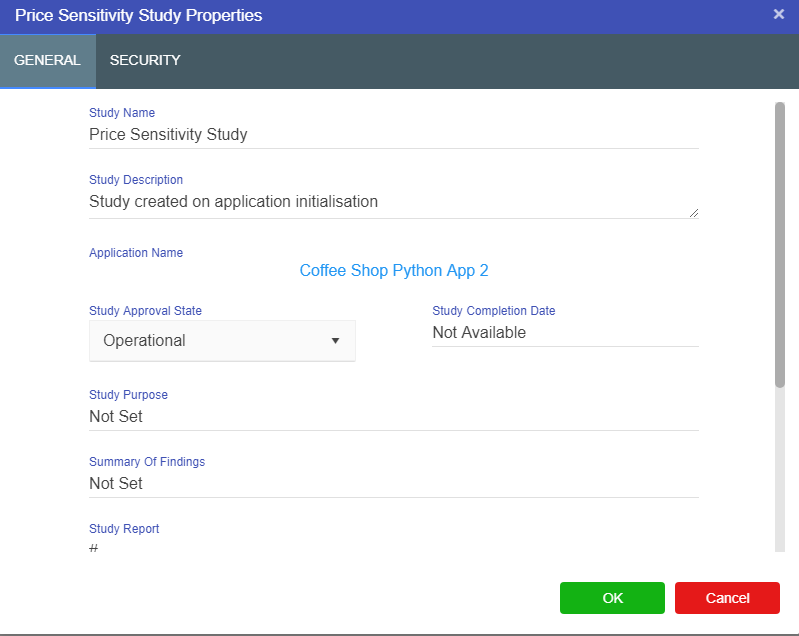

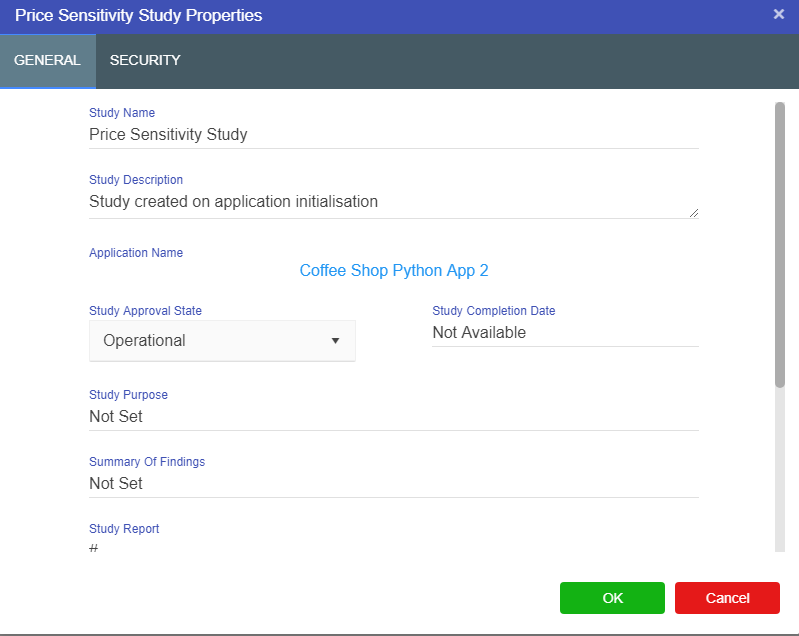

You can create an application by using code based Python or R models, or graphical Driver Models.

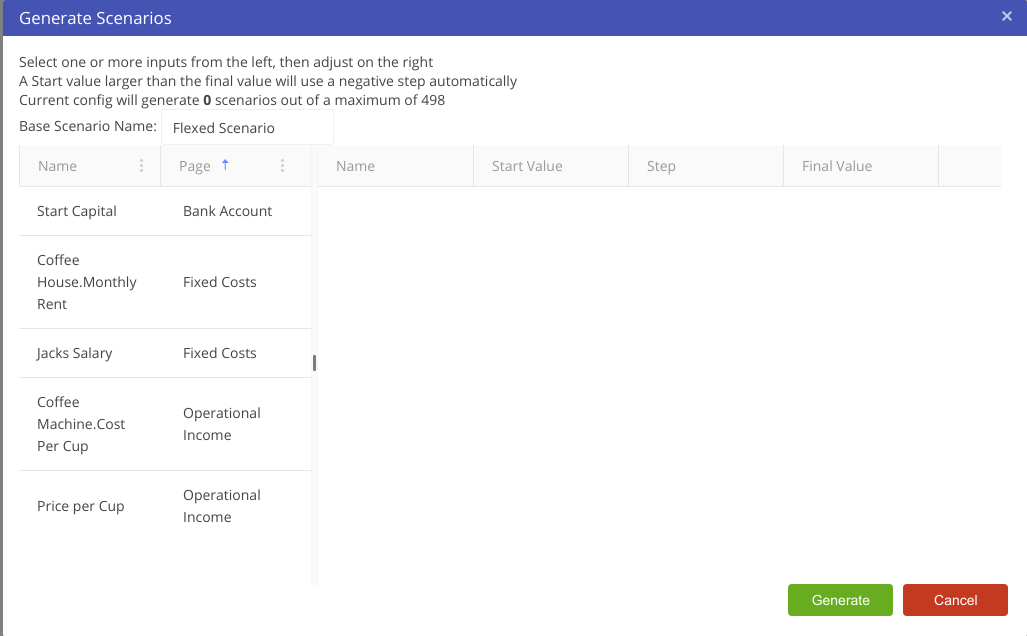

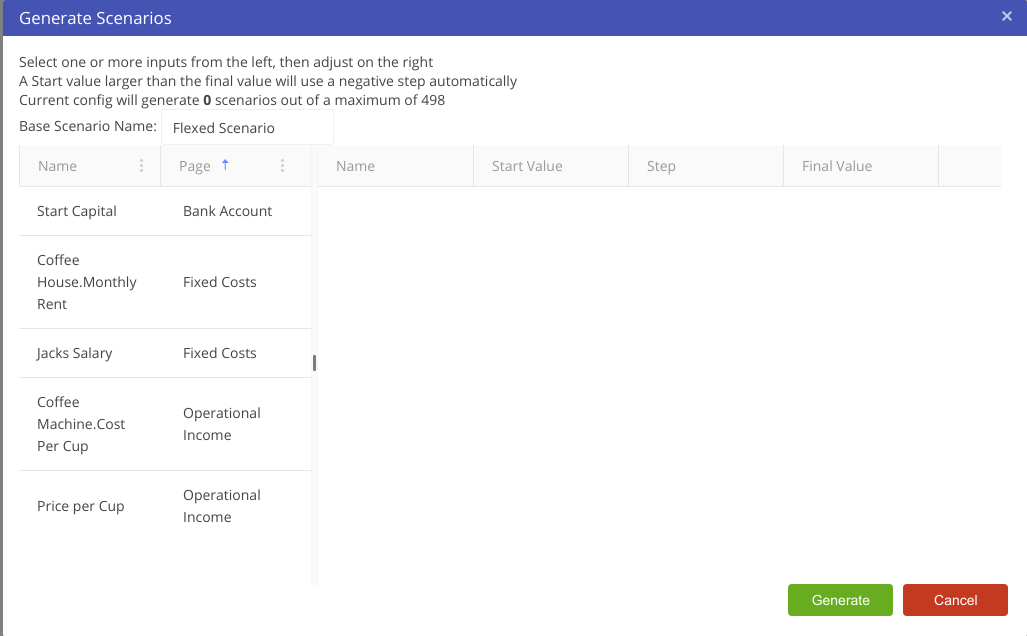

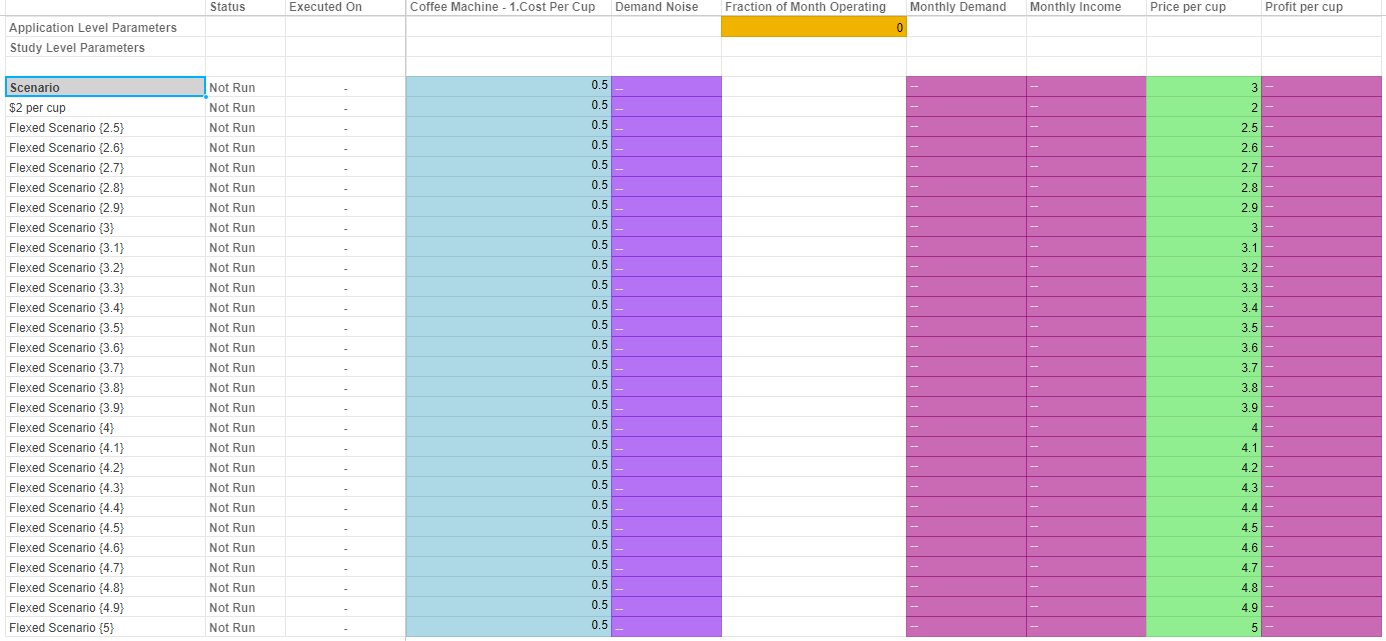

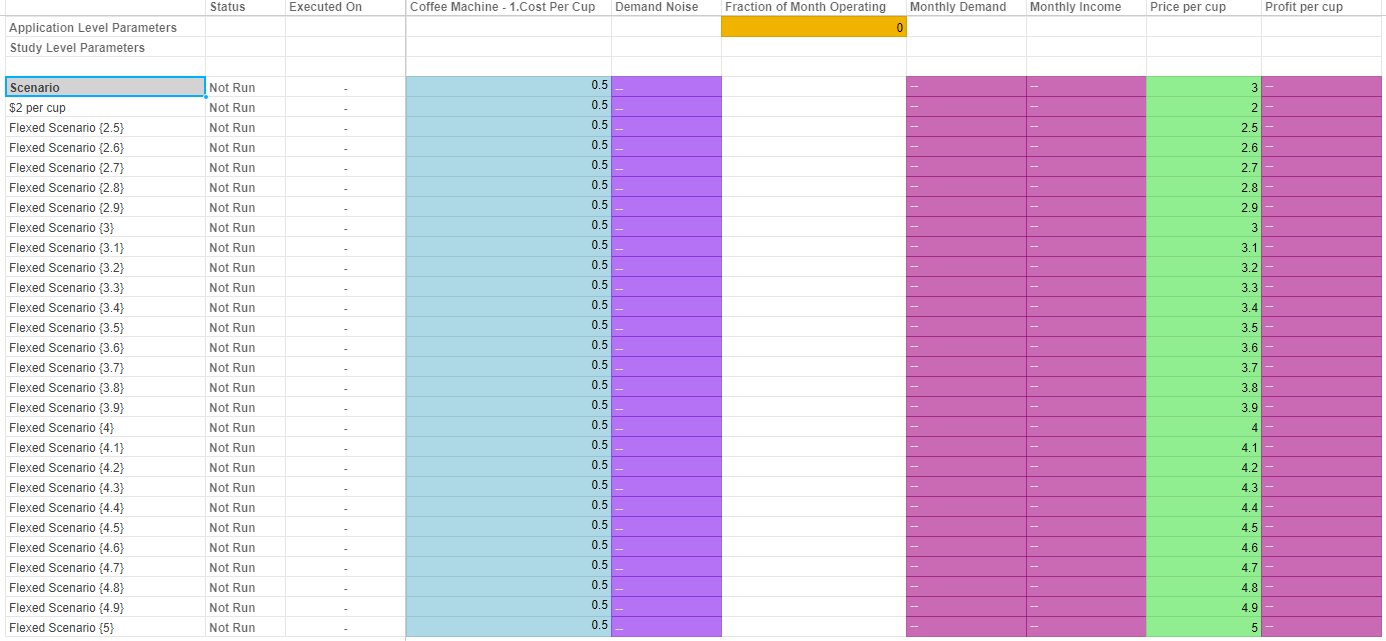

Build your model once and use it to perform multiple what-if scenarios.

Value Driver Models (VDMs) allow subject-matter experts to build graphical models, and easily share them throughout your organisation.

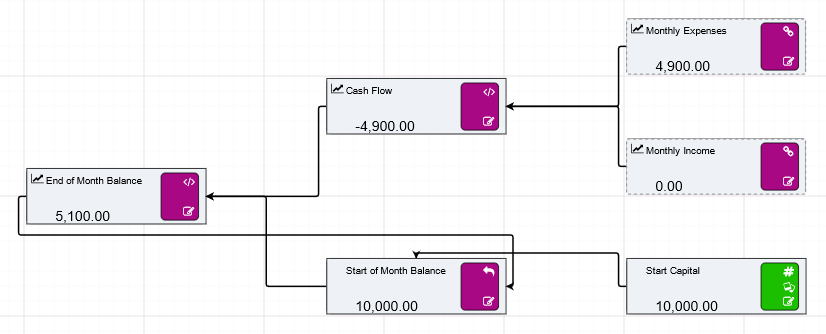

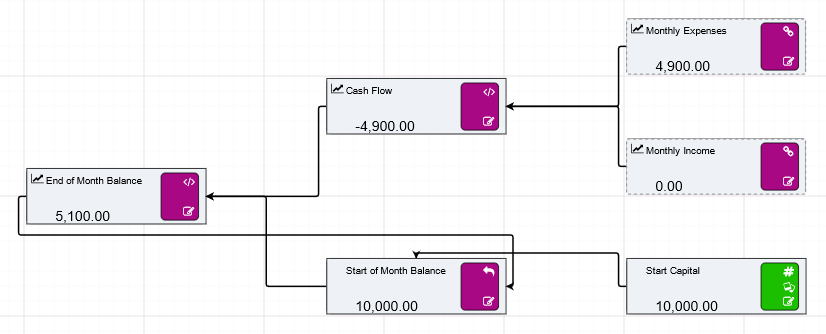

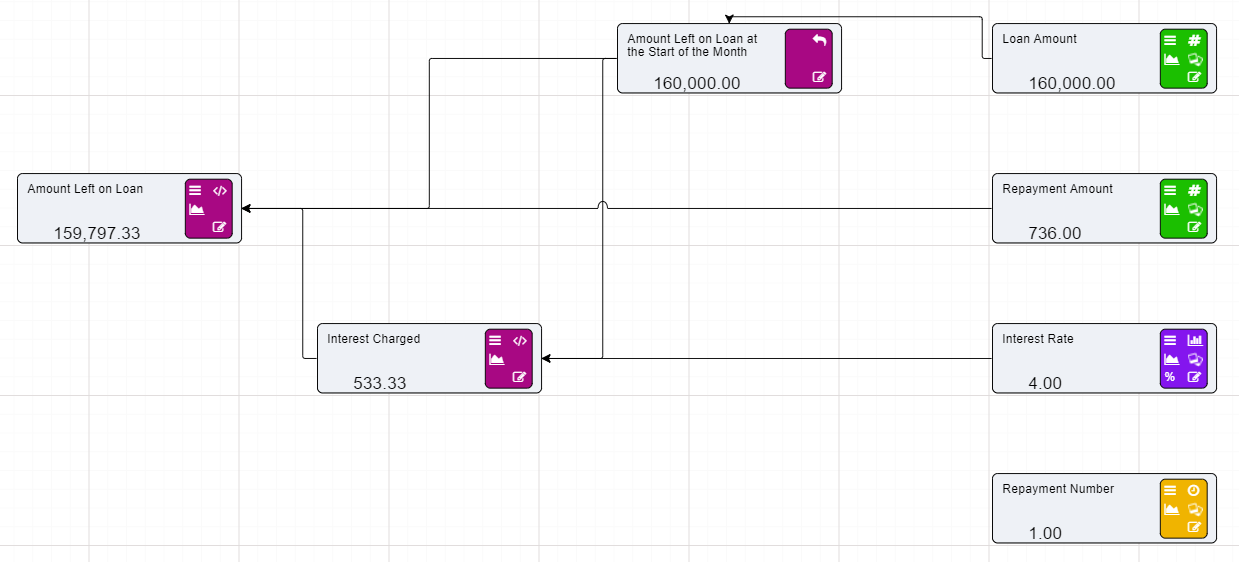

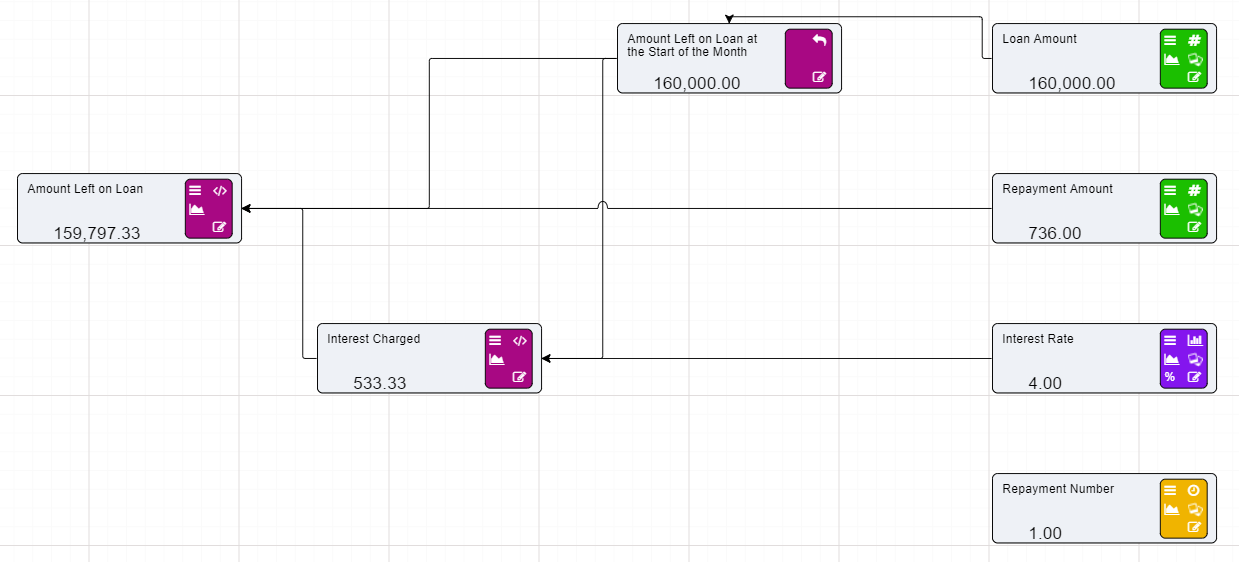

Before we launch into Driver Models, what different nodes do and how to connect them to each other, it is important to understand the very basics of a Driver Model.

For this manual we will be using different examples to explain each node. It is recommended that you create multiple Applications so that you can experiment with the Driver Models described in this manual, expand on them, or even use them to create something entirely different.

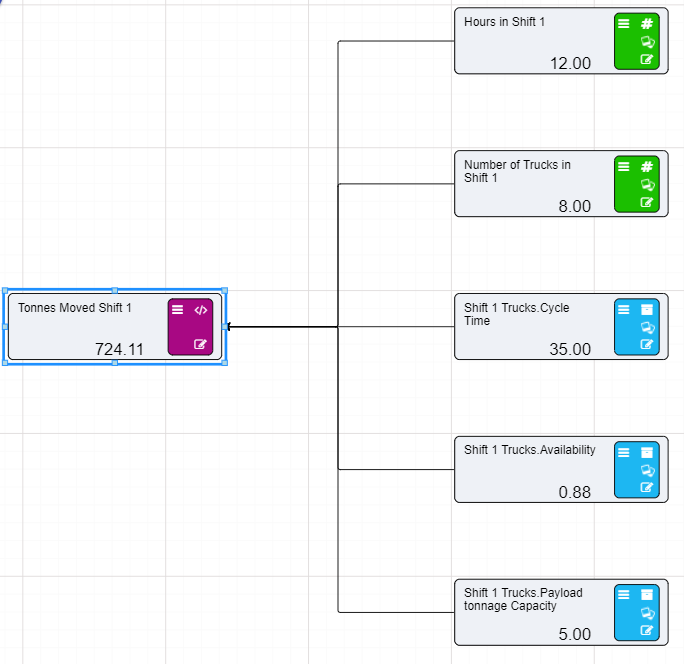

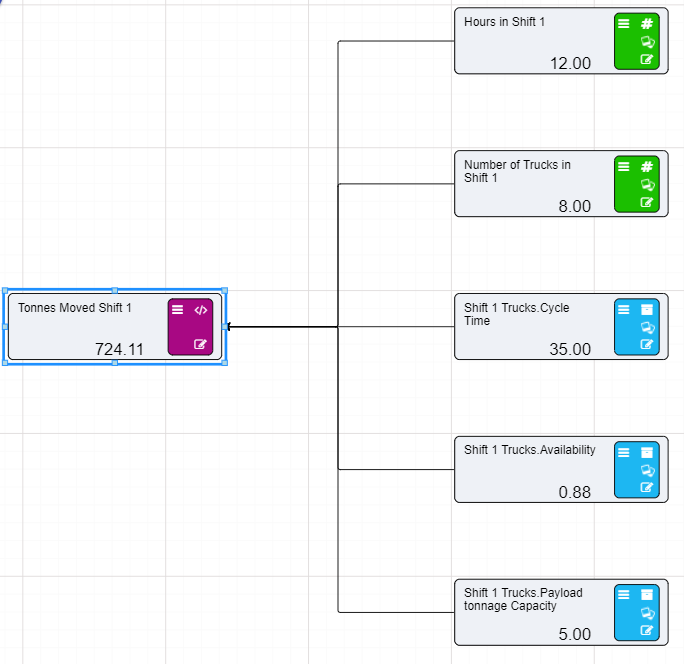

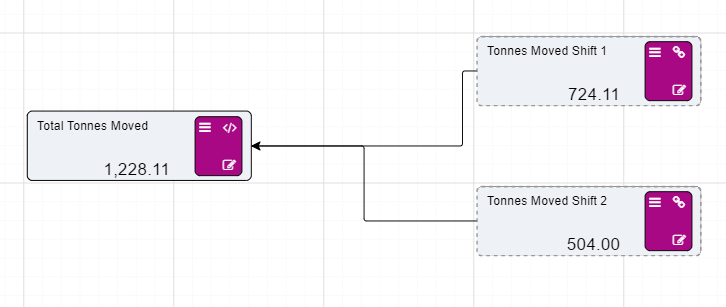

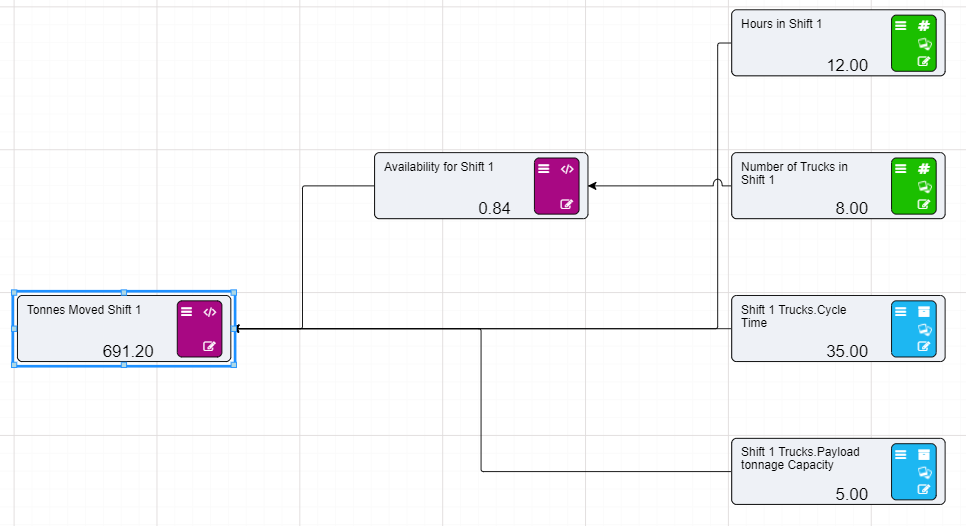

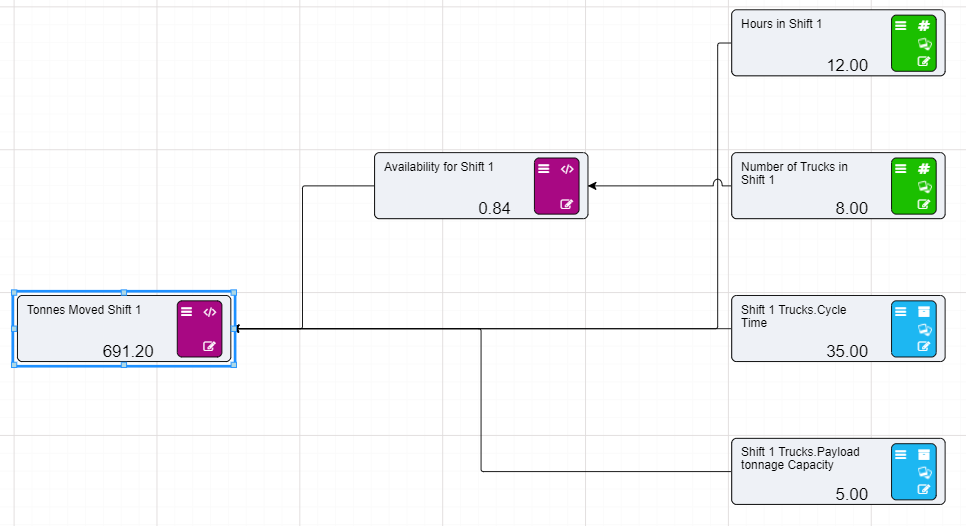

A Driver Model graphically represents inputs and equations in a free form tree diagram allowing users to determine the key factors that impact results.

Akumen Driver Models are based on Value Driver Models – a technique commonly used in Business and Process Improvement.

To create a new Driver Model:

There are few extra things to note about the creation of Applications in Akumen. The first is that if you leave your application at any time you can always get back to it by either going through the Application Manager or the RECENTS tab. Both will take you straight back to your Driver Model.

If you are looking at the Application Manager you will also notice how there is an icon that has three vertical dots at the right end of each App.

If you click on the above icon (or simply right click the application) the following options will be brought up:

All of the above options make it possible for you to modify your model’s properties, attach important information to your model, and create new versions of your model if needed.

Once you have created your new Driver Model App, you will be taken to the main Driver Model page. The main page looks like the image below.

There are ten main areas for a driver model:

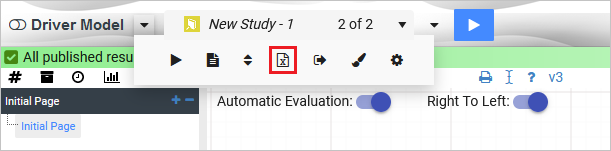

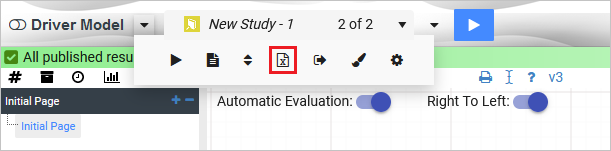

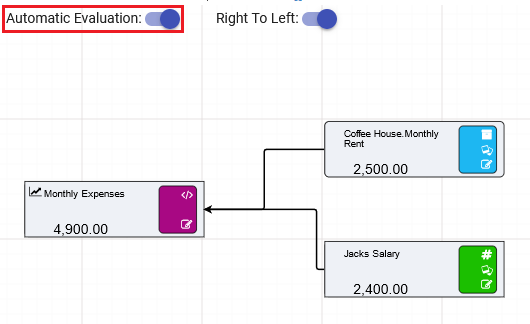

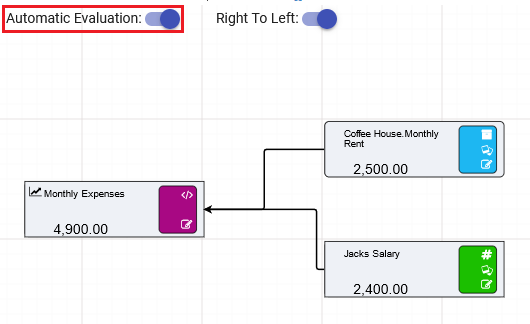

In the Driver Model Editor we pointed out the ten major areas of the editor. Number 5 allows users to decide what style of Driver Model they would like to create.

There are two options:

Both are able to be selected by clicking on the Right to Left switch at top left of the editor, next to the Automatic Evaluation switch and under the Node Palette.

By default, the style of the Driver Model is set to Right to Left.

Setting the Driver Model direction depends on the type of Driver Model you want to create.

Right to Left is for traditional driver models, such as those used in Lean.

Left to Right is more commonly associated with process flows and schematic type layouts.

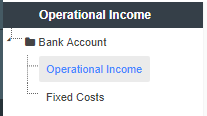

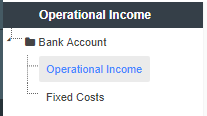

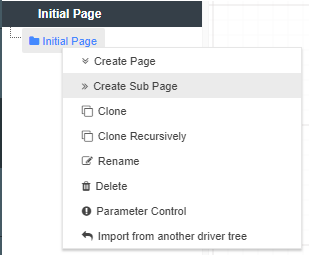

Driver Model Pages allows driver models to be broken up into different areas. Although smaller driver models can easily be accomodated in a single page, breaking the driver model up into multiple pages makes it much easier to understand the driver model. Examples of breaking driver models into pages include things like processing areas or even different business areas. Continue driver model evaluations from one page to the next is handled through

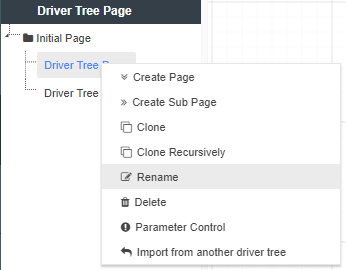

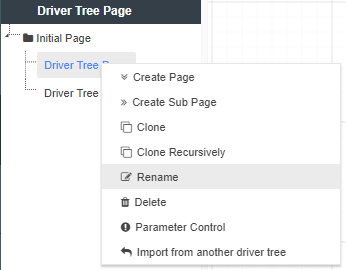

There are several different ways to create a new Page. We can:

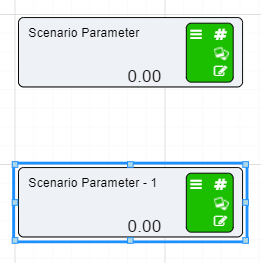

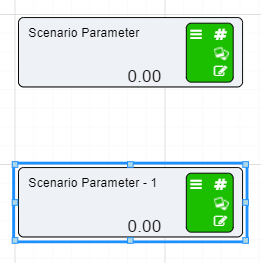

When a page is cloned, the source page and all it’s nodes are copied (the nodes are clones of the originals, not the actual originals - Akumen will automatically rename all the node names to ensure there are no clashing node names). Once cloned you can treat the new driver model page as a new page, meaning you can:

Whatever changes you make to the cloned page do not effect the parent page.

When creating new pages they can either be created on the same level as the parent page or as a subpage of the parent page. Creating a new subpage gives you a blank workspace to start with and visually links the new subpage with the parent page.

Recursive Cloning clones the selected Driver Model page and all of its children pages creating an entirely new version of your Driver Model.

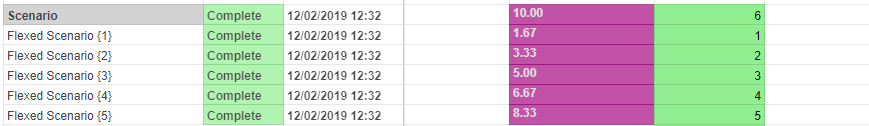

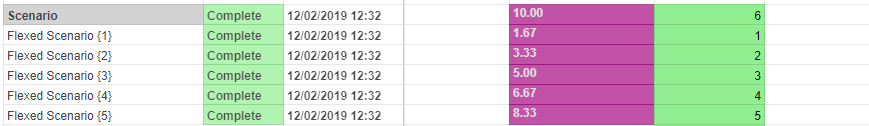

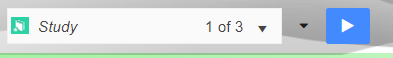

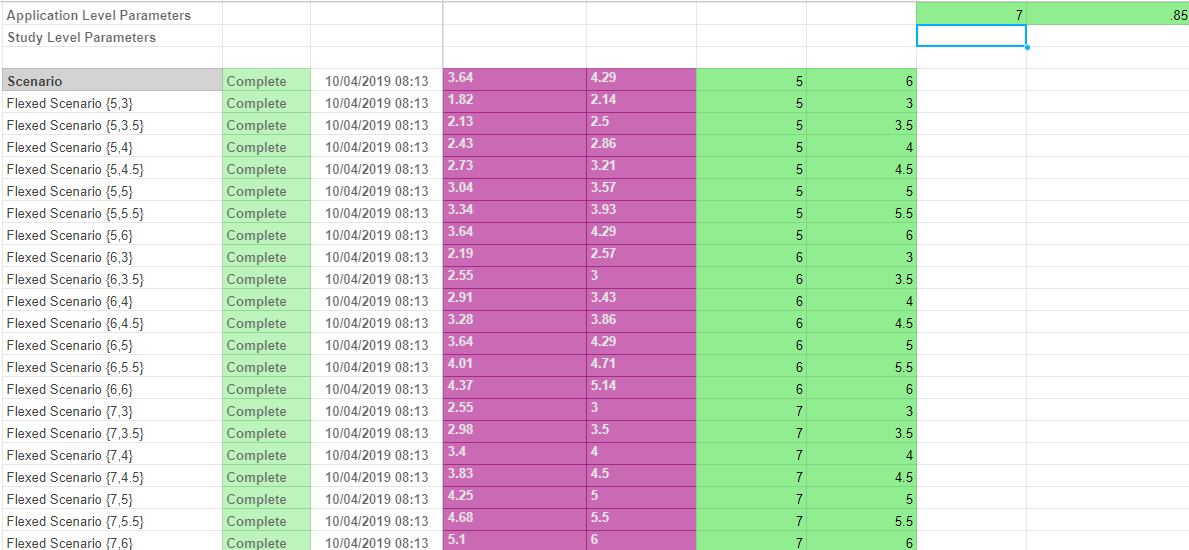

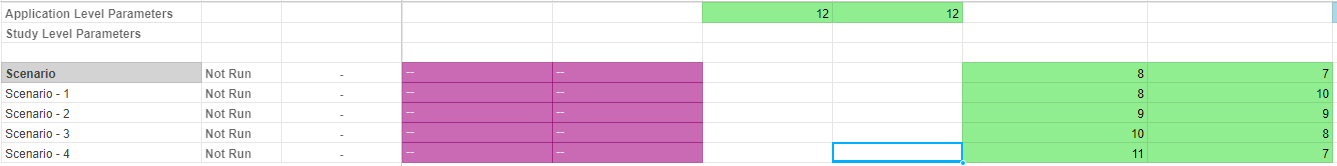

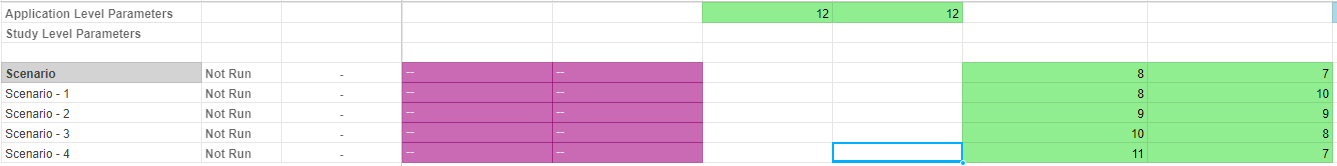

We provide the option to export data from a driver model to Excel. Only scenarios that have been executed will appear in the exported Excel document.

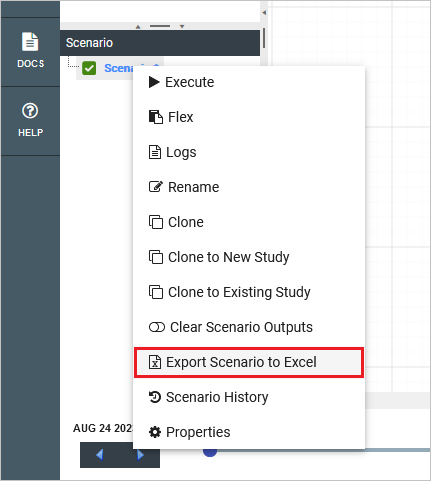

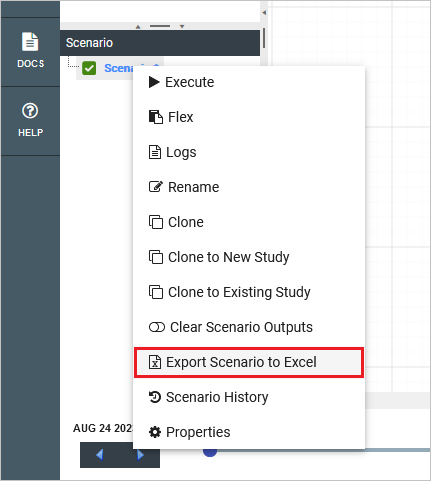

There are three ways to perform an export of VDM scenario data to Excel:

Model: Exports all studies and their associated scenarios to Excel. Only exported scenarios will appear in the Excel document.

Study: Exports an individual study and its associated scenarios to Excel. Only exported scenarios will appear in the Excel document.

Scenario: Exports an individual scenario to Excel. This is performed by right-clicking the scenario and selecting Export Scenario to Excel.

Note that this option will only appear if the scenario has been executed.

There are many different node types that will aid you in building your Driver Model.

These node types are:

Now that we have covered the basics of driver models we are ready to begin understanding the different nodes, how to connect them to create flows and build models and systems.

To do this we will use very simple examples which demonstrate a node’s capabilities.

A parameter group is used to arrange node results into named output tables. By default, node values that are published to results will appear in the “appoutput_vw” table. By specifying a parameter group, these node values can be added into either a single or multiple output tables.

Parameter group names are added to multiple output tables separated using a comma delimiter. For example, a node could be added to parameter groups “test1” and “test2” by specifying “test1,test2” in the node’s paramater groups field.

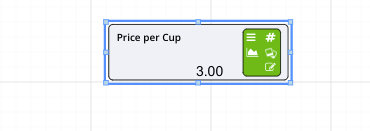

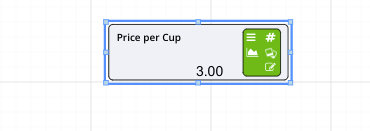

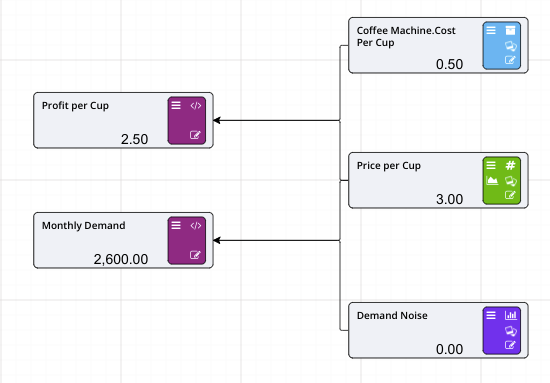

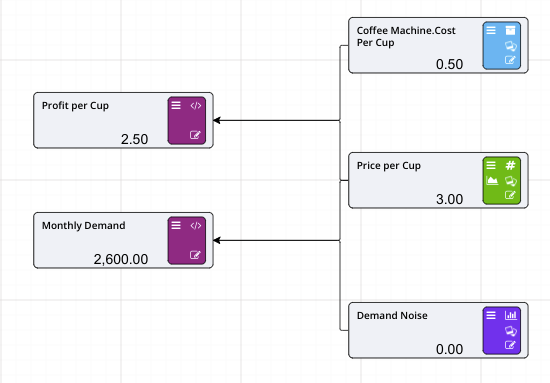

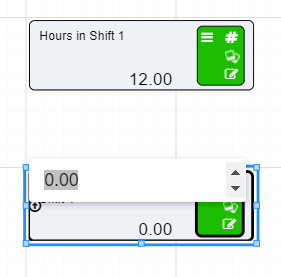

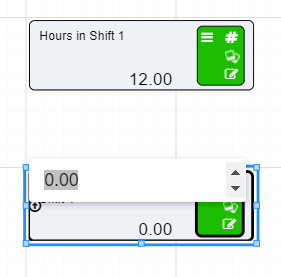

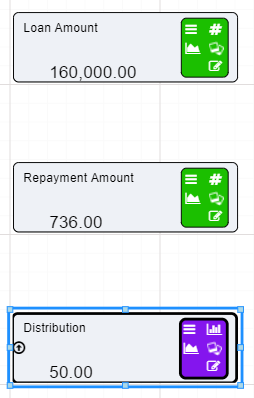

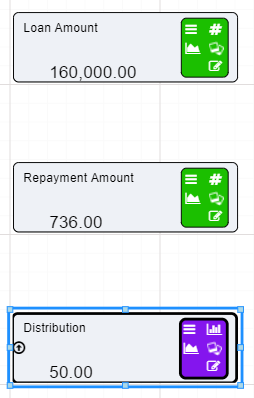

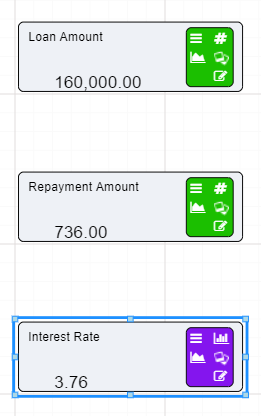

There are many different node types that can be used to create Driver Models. The simplest of these nodes is the Numeric node. Numeric nodes hold onto values to be used in the application. They are placeholder nodes. They are assigned values that will not be used until they are attached to a:

They are also excellent at demonstrating how to edit, connect, and use nodes to create a Driver Model.

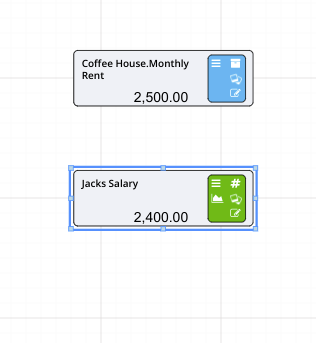

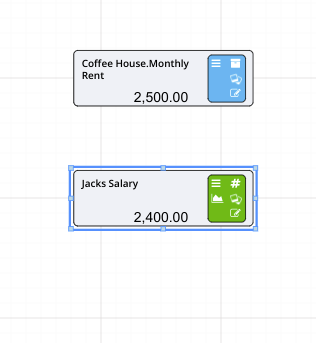

To show you how to use a Numeric node we will set up two numeric nodes in preparation for the following slide on Calculation nodes.

To set up a Numeric nodes:

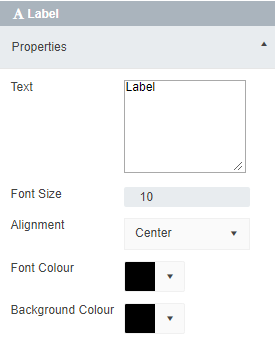

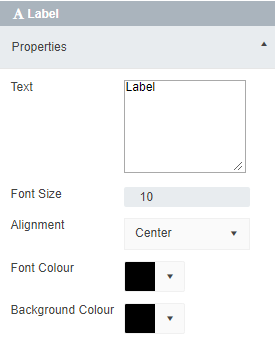

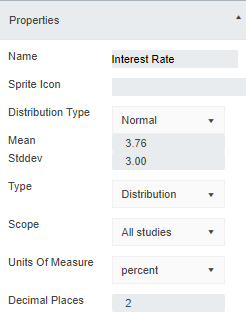

You probably noticed that in the editing window of the Numeric node you could set:

All of these things can be changed in a Numeric node. For example, if we were going to set a value of $50, we would just have to set the units of measure to $ and make sure that the number of decimal places visible was set to 2.

This list in the editing window is the same for the following nodes:

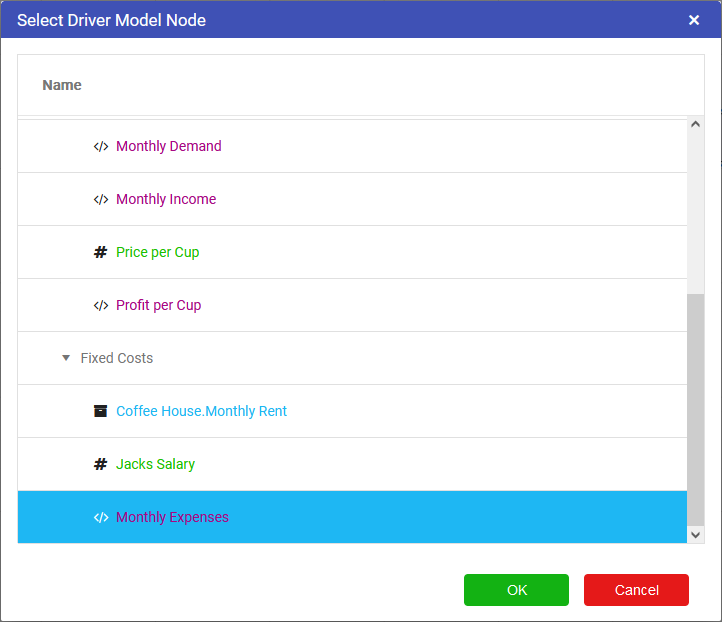

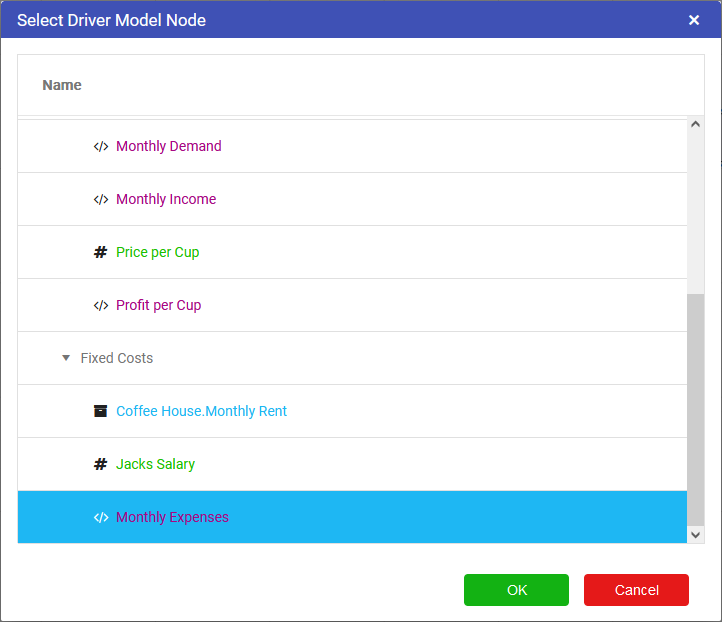

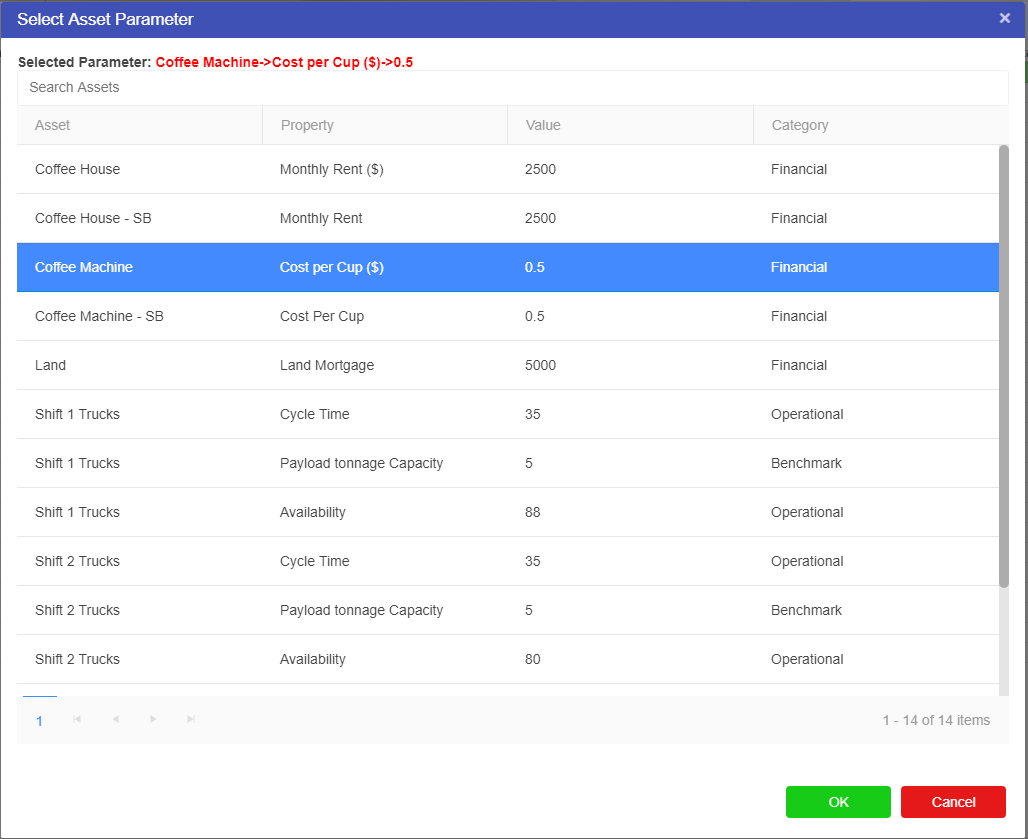

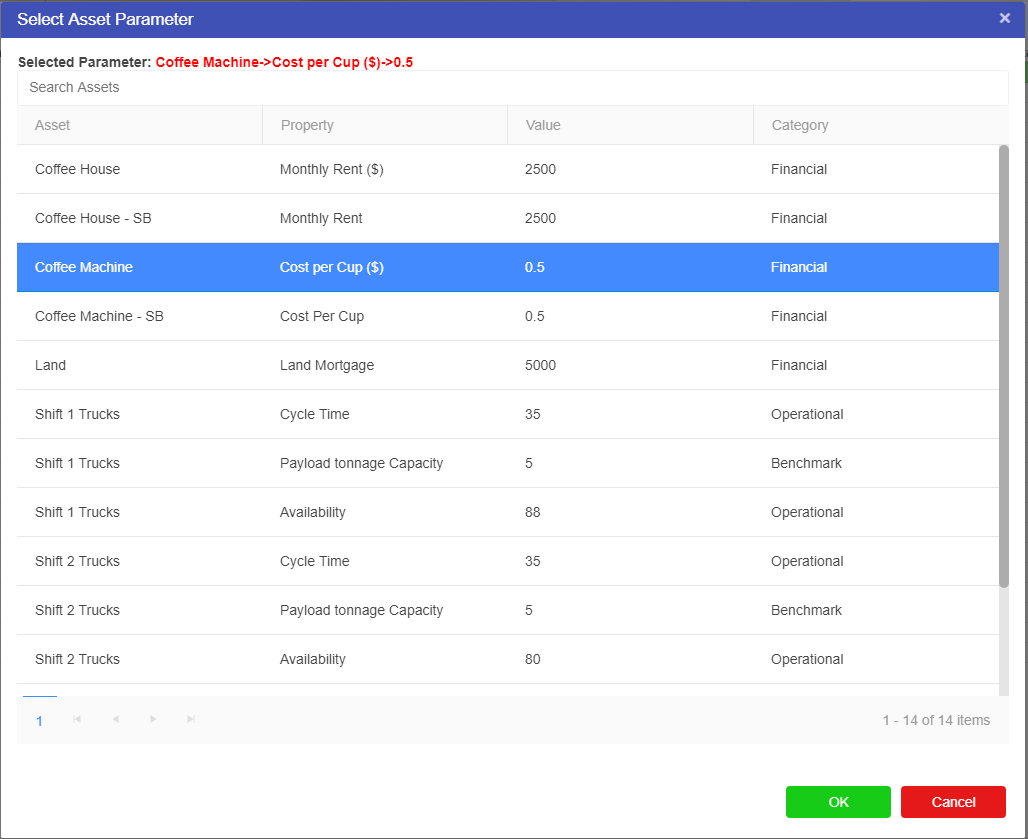

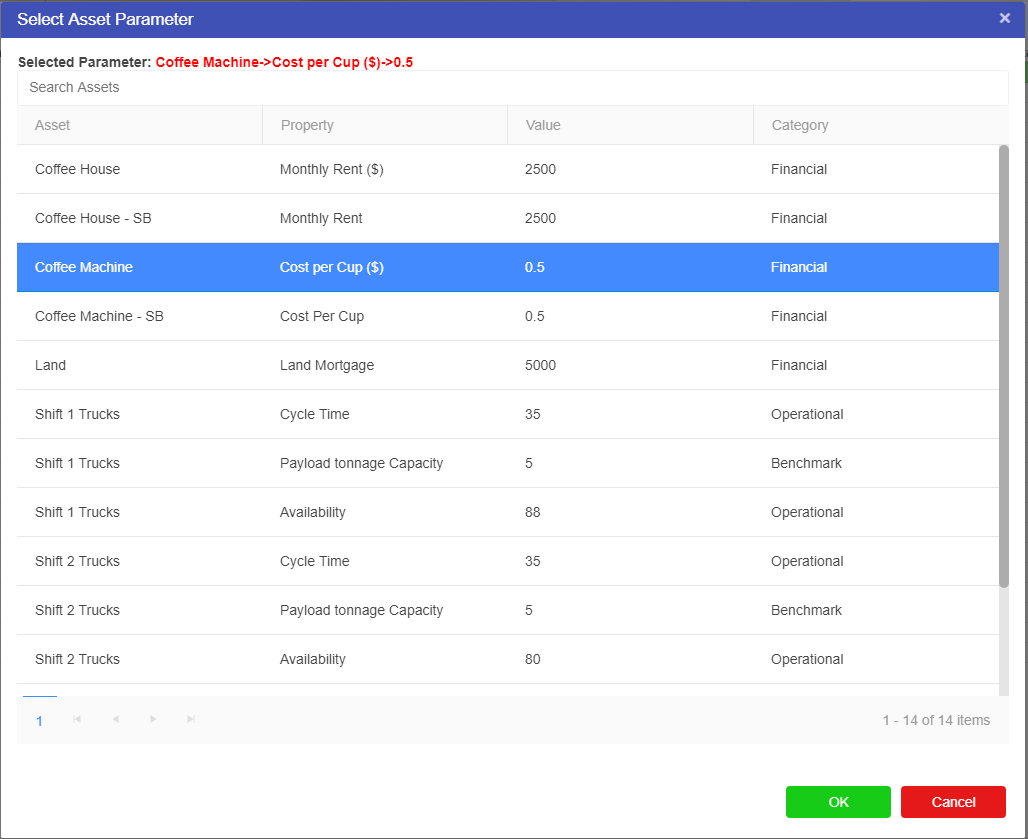

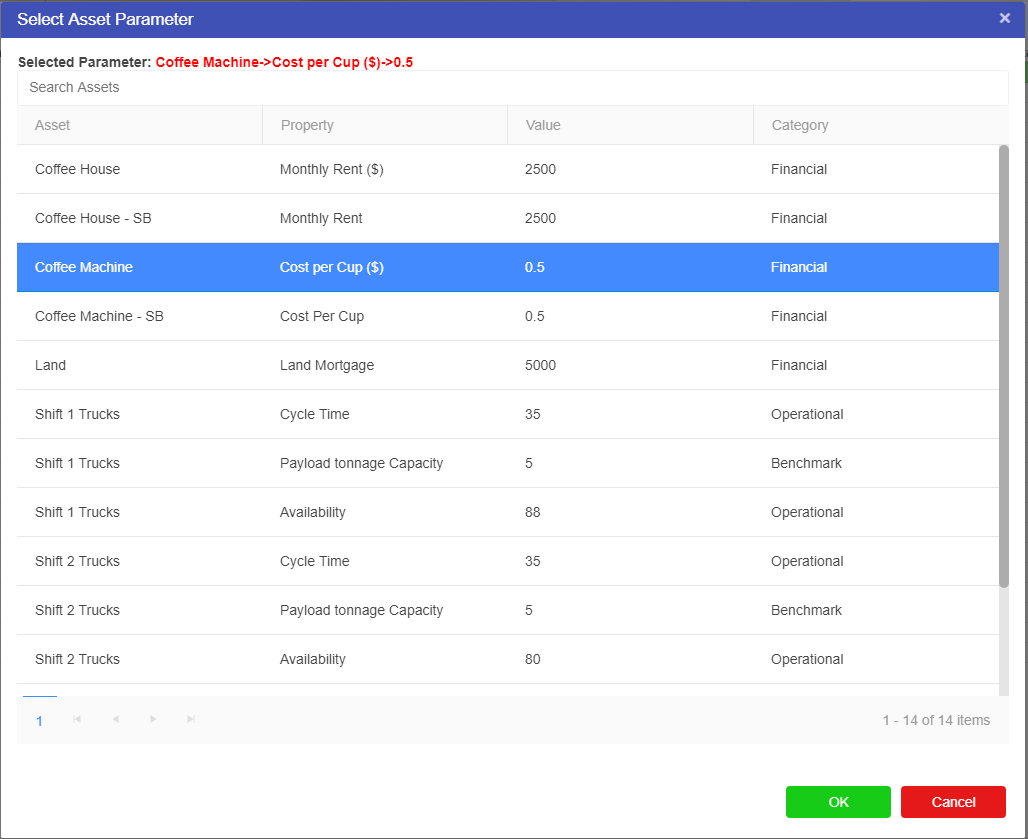

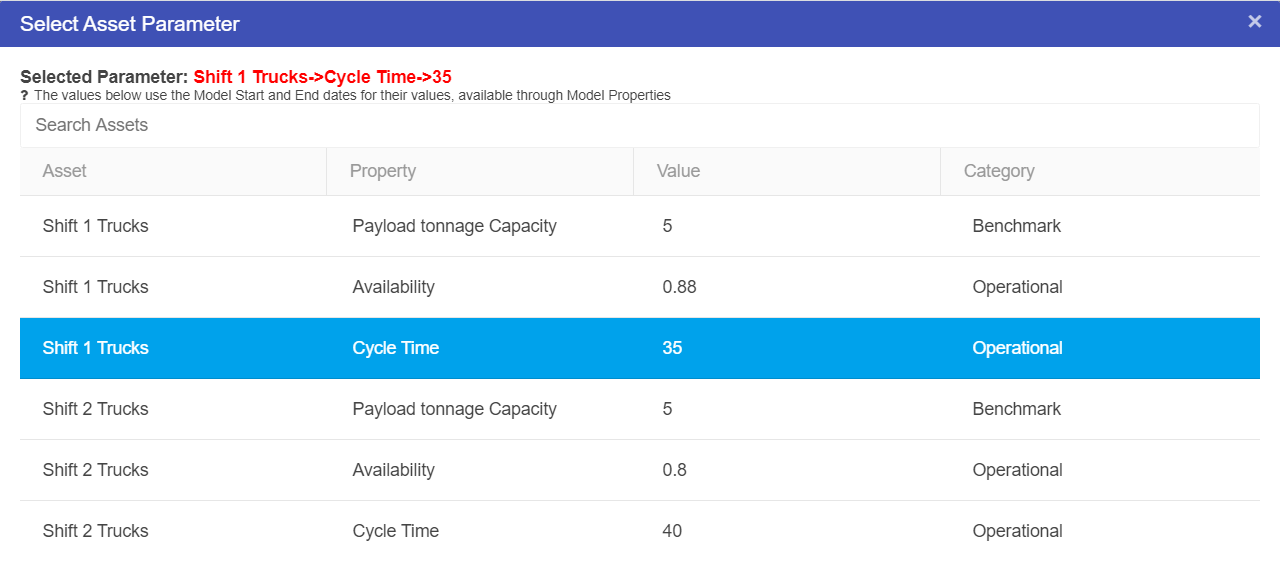

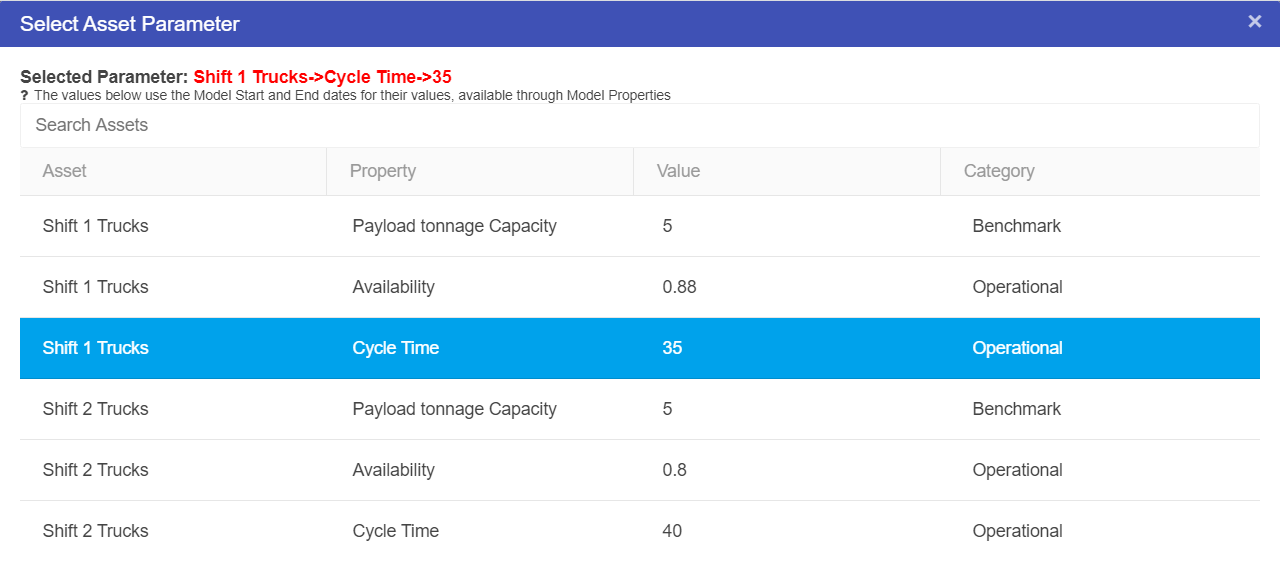

The Asset Parameter node is the connection between the Asset Library and Driver Models. By using an Asset Parameter node users can get values stored in the Asset Library and bring them into the Driver Model. Since Asset values cannot be changed outside the Asset Library these values will remain the same during scenario analysis.

Click here to learn more about the Asset Library.

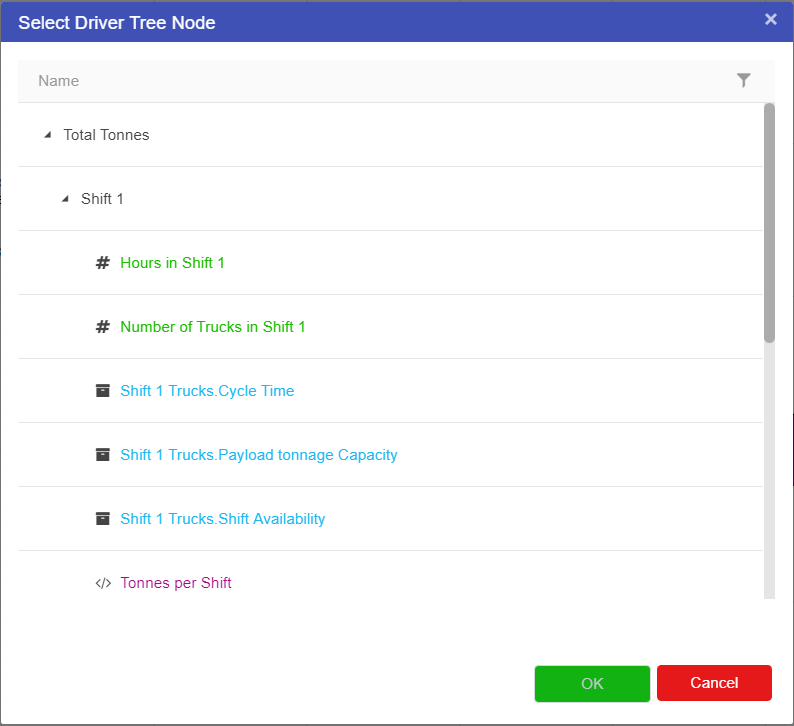

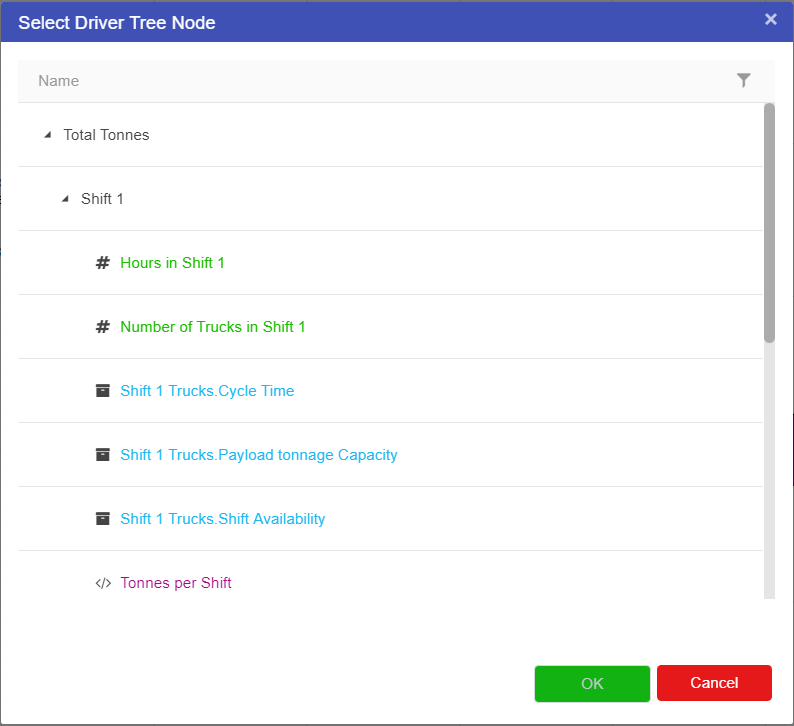

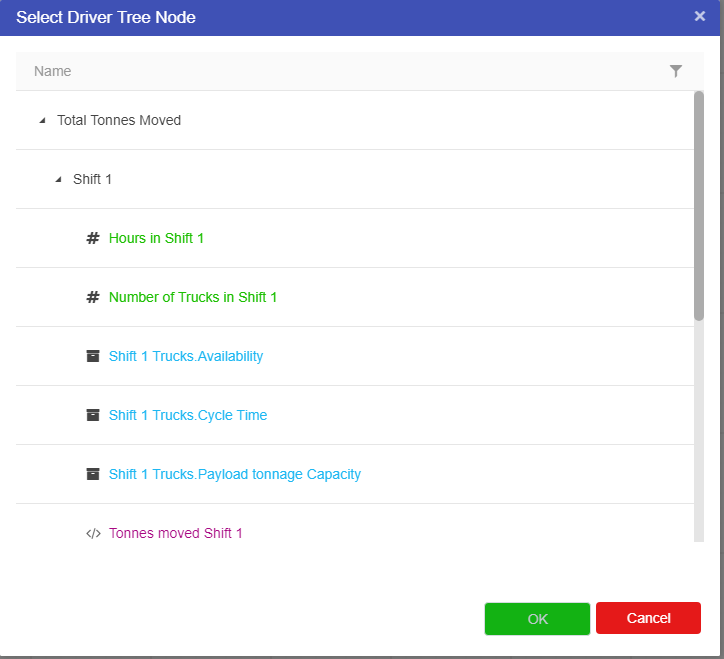

To set up an Asset Parameter node we should first have an Asset to link to the node. Once you have this Asset you can link it to the Asset Parameter node.

To do this:

onto the workspace.

onto the workspace.

The Asset Parameter node will appear blank (see screenshot below) until an Asset is selected.

You now have an Asset Parameter node.

Although asset parameter nodes cannot be changed from the driver model, they can be converted into Scenario Input parameters to see the effects of changing the value throughout the model. The best practice for this is to leave the asset parameter as is in the “base” scenario, then cloning the scenario and converting the node to a numeric, as shown in Changing Node Types.

Remember you cannot edit an Asset value outside of the Asset Library. If you do wish to edit the value of an Asset you will have to go to the Asset Library. Editing the value in the Asset Library will change the value for every model using this value.

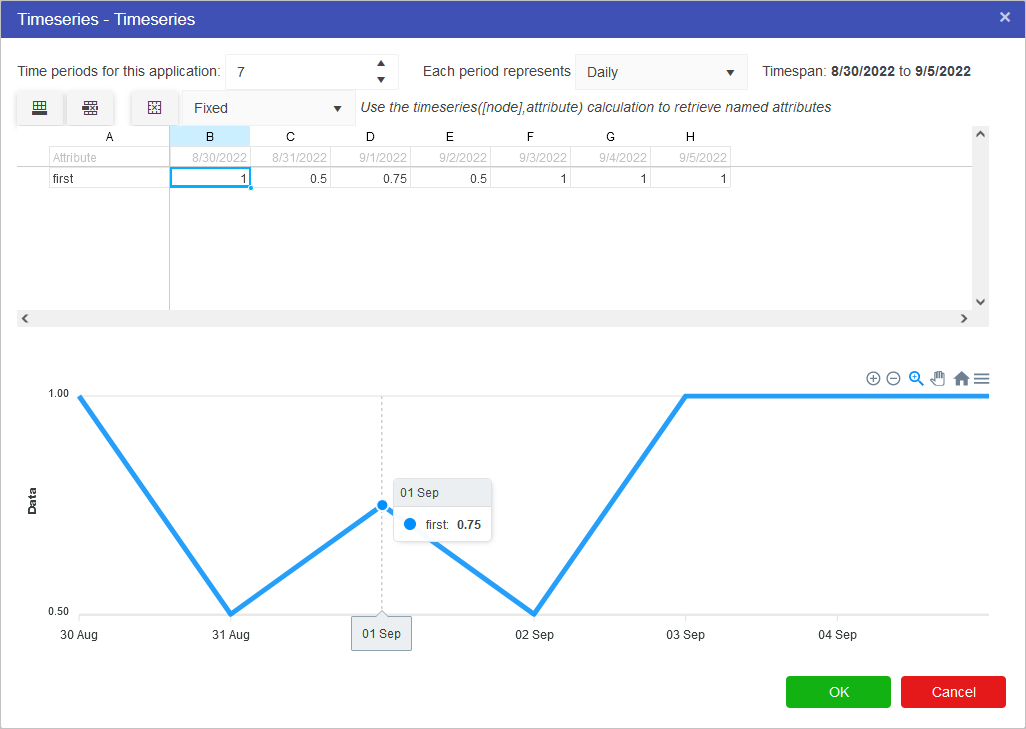

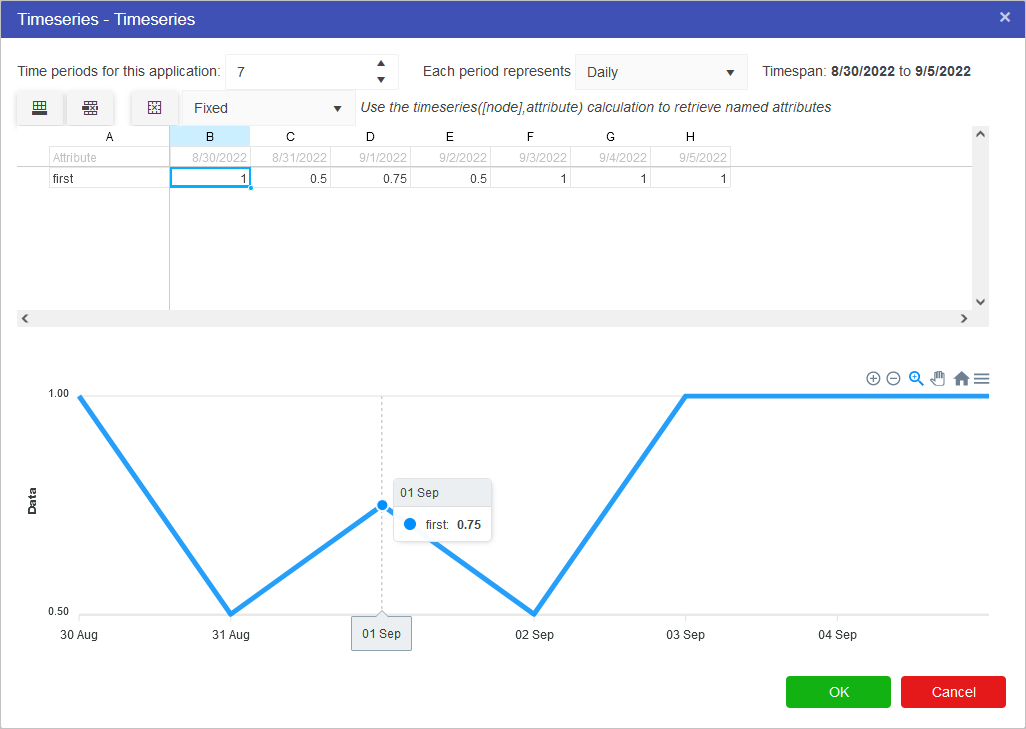

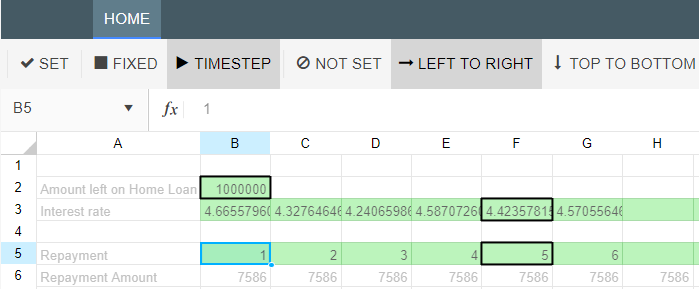

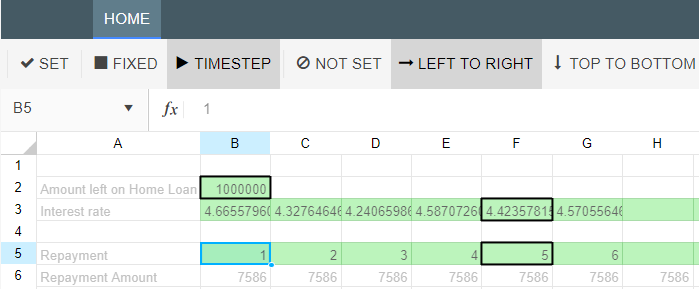

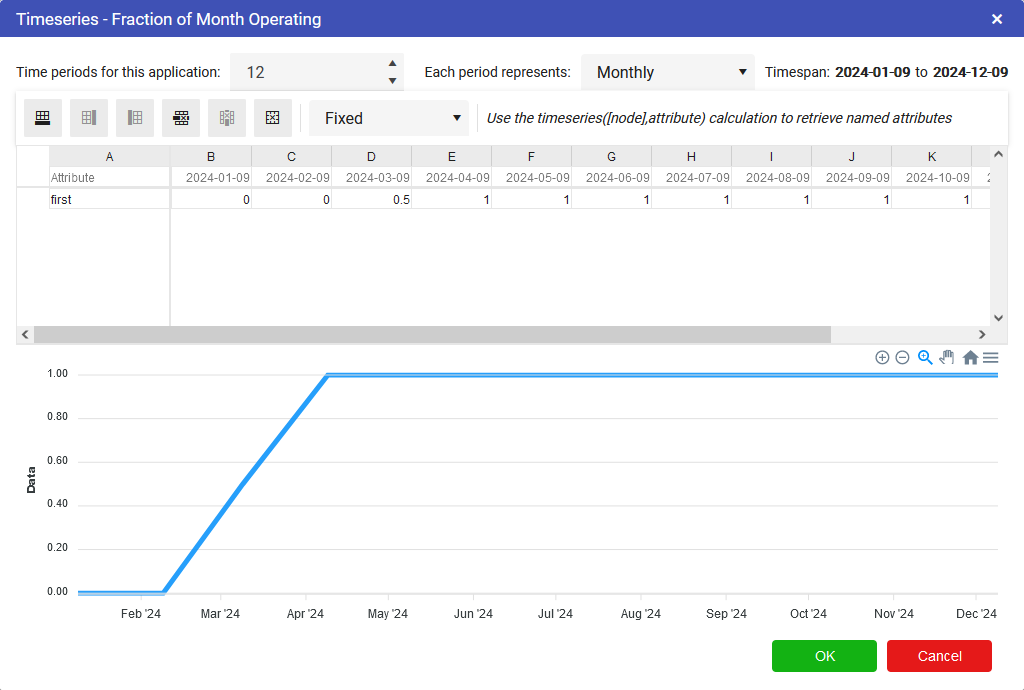

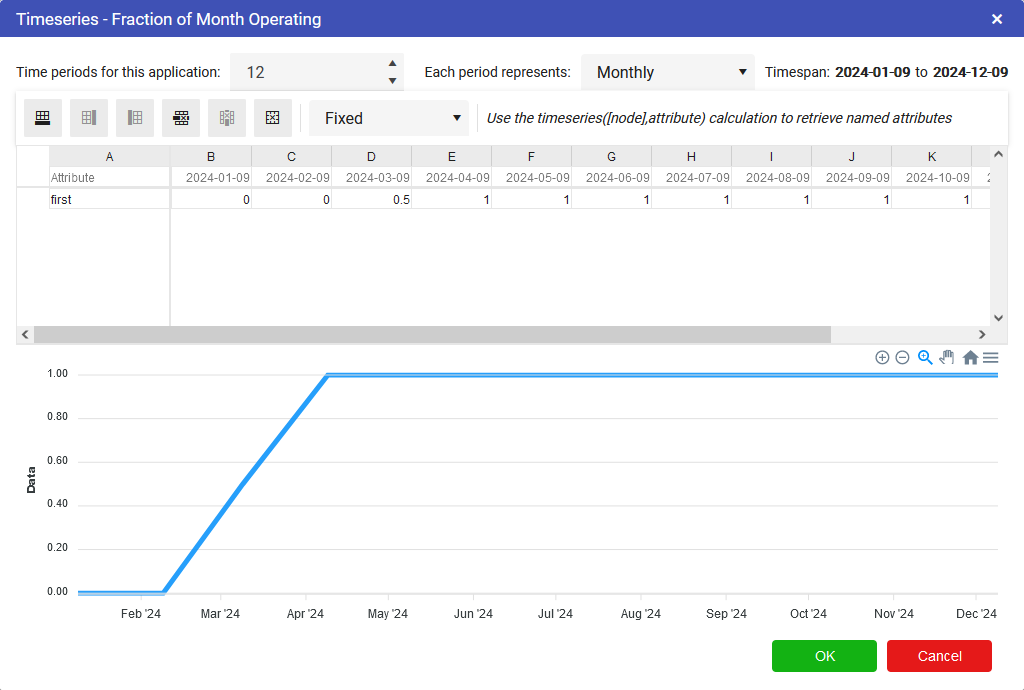

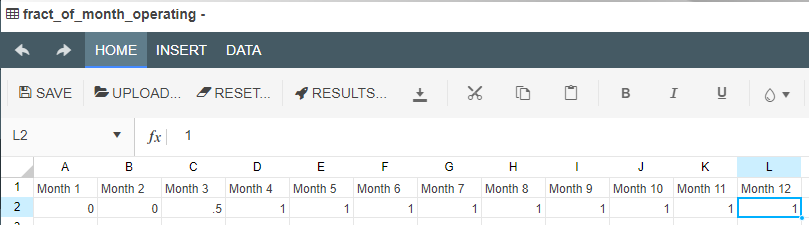

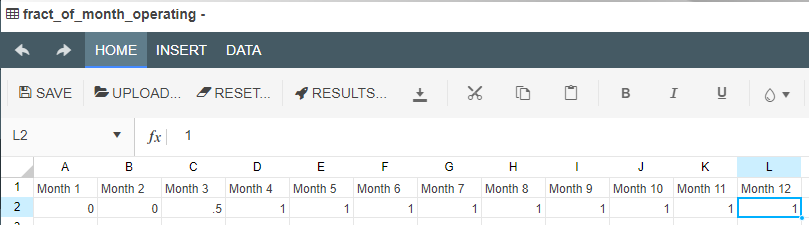

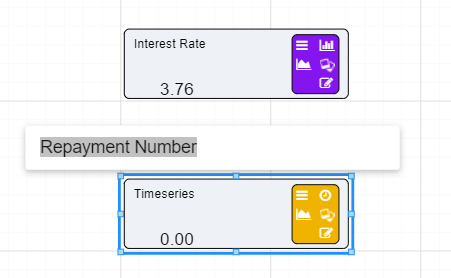

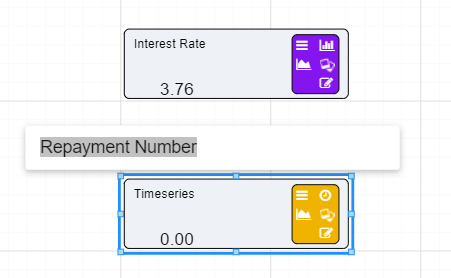

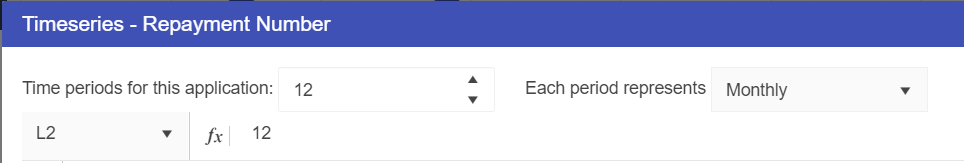

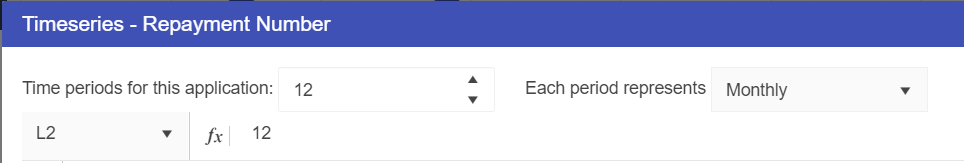

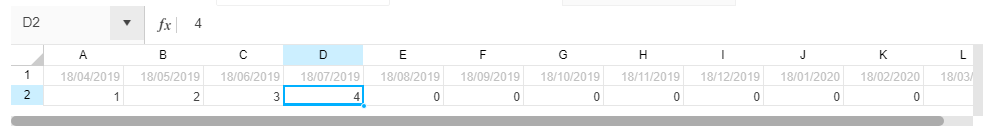

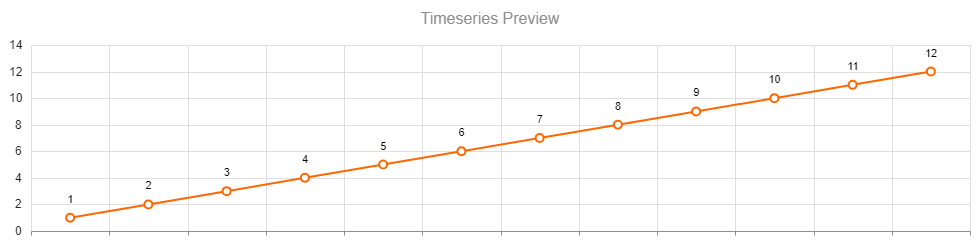

These nodes allow models to predict outcome values for the model over time. They allow users to define a time period and assign values for each of those time periods. The timeseries node will need to be connected to a Calculation node before the values in the timeseries node will affect the Driver Model.

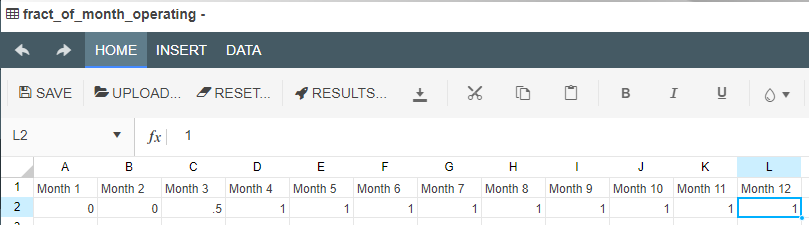

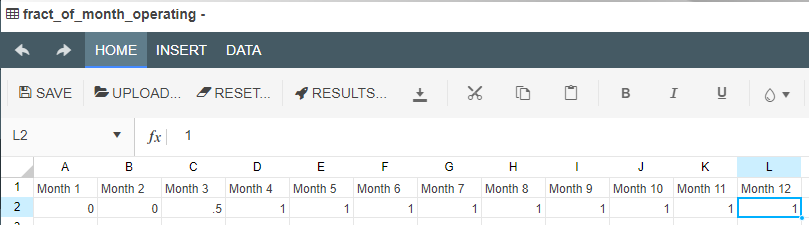

Prior to creating any timeseries nodes, the number of periods and reporting period (below) as well as the start date, can be defined by changing the model properties. In addition, it is sometimes desirable (especially in finance VDMs), to have different scenarios represent different time periods. This can be done by setting the Start Date in the scenario properties.

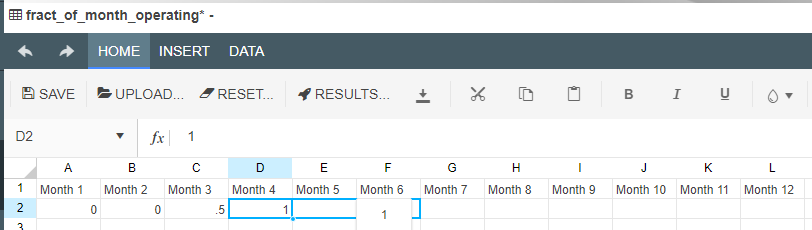

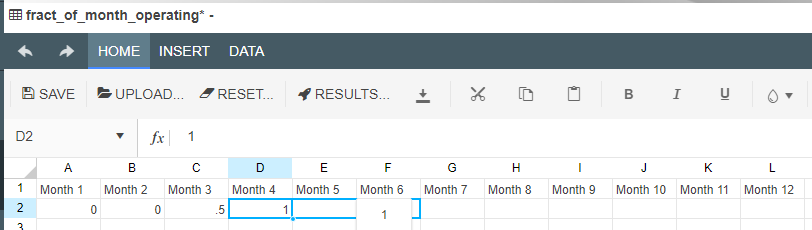

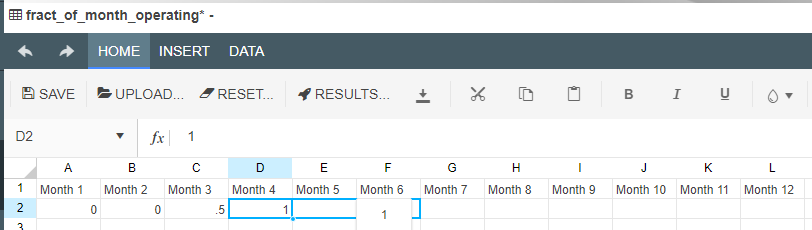

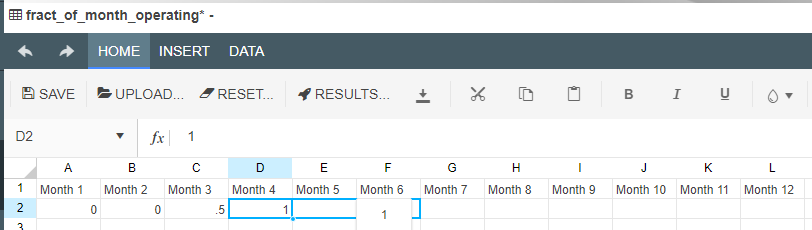

When setting up a timeseries node, users can define:

If we, for example, wanted to look at the the value of gold over the course of the week we would put in:

Note that the values specified for the number of periods and period type will affect the entire model. If you had two timeseries nodes on the page, altering the number of time periods would affect both nodes. This setting is also available in the model properties.

If we knew that the value fluctuated throughout the week between 50% of the value at the start of the week and 100% then we could plan for the worst case and the best case by using scenarios and in the second scenario we could change the timeseries values to 0.5 for 50%.

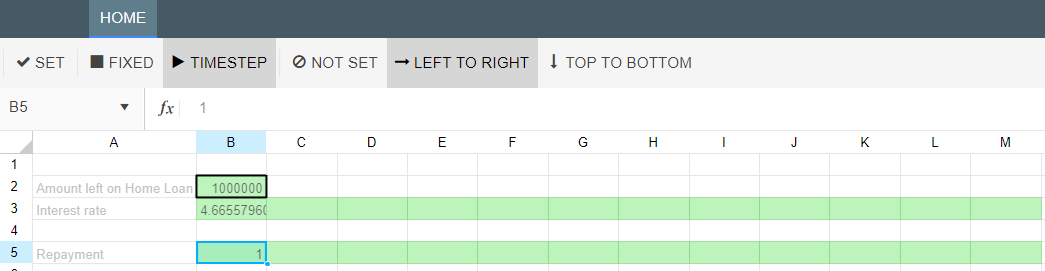

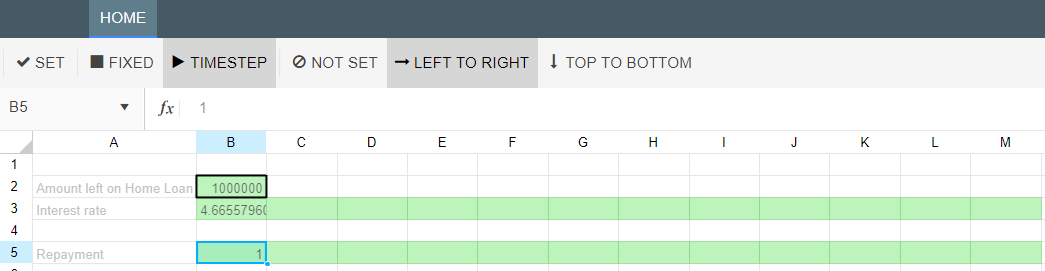

To set up a Timeseries node:

onto the workspace.

onto the workspace.

Whenever a new Timeseries node is added to the Driver Model, a slider will appear at the bottom of the screen. The number of periods displayed in the timeslider are the number of periods specified in the timeseries node OR the model properties. To see the effects over time, simply slide the bar to the next time interval.

Multiple different timeseries sets can be defined per timeseries node. The buttons highlight below control this functionality. From left to right, these options are:

The dropdown menu contains options for Fixed, Interpolate and Last Known Value. These work as follows:

The last day of the month will always be selected if the period increment is set to “Monthly” or “Quarterly” and the model start date is set to the last day of the month. For example, if the model start date is set to 28-Feb-2022 on a quarterly increment, the next period will be 31-May-2022 instead of 28-May-2022.

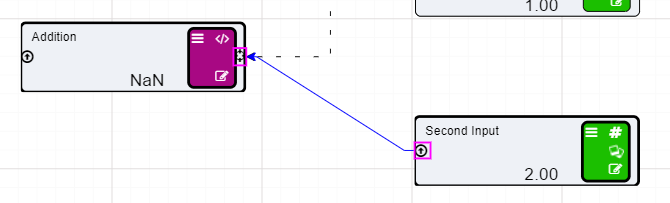

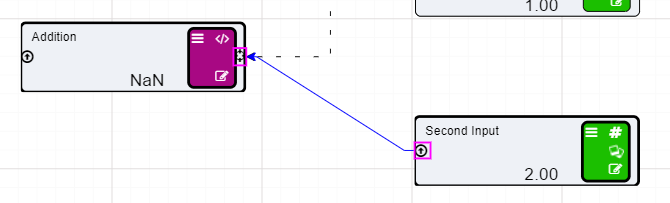

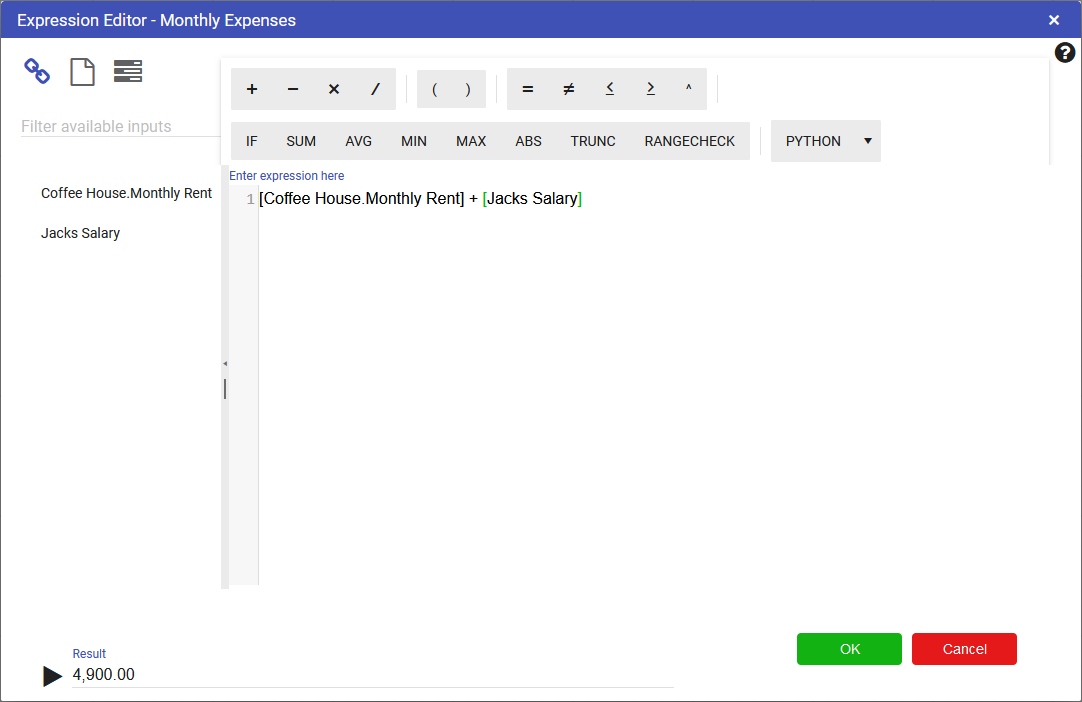

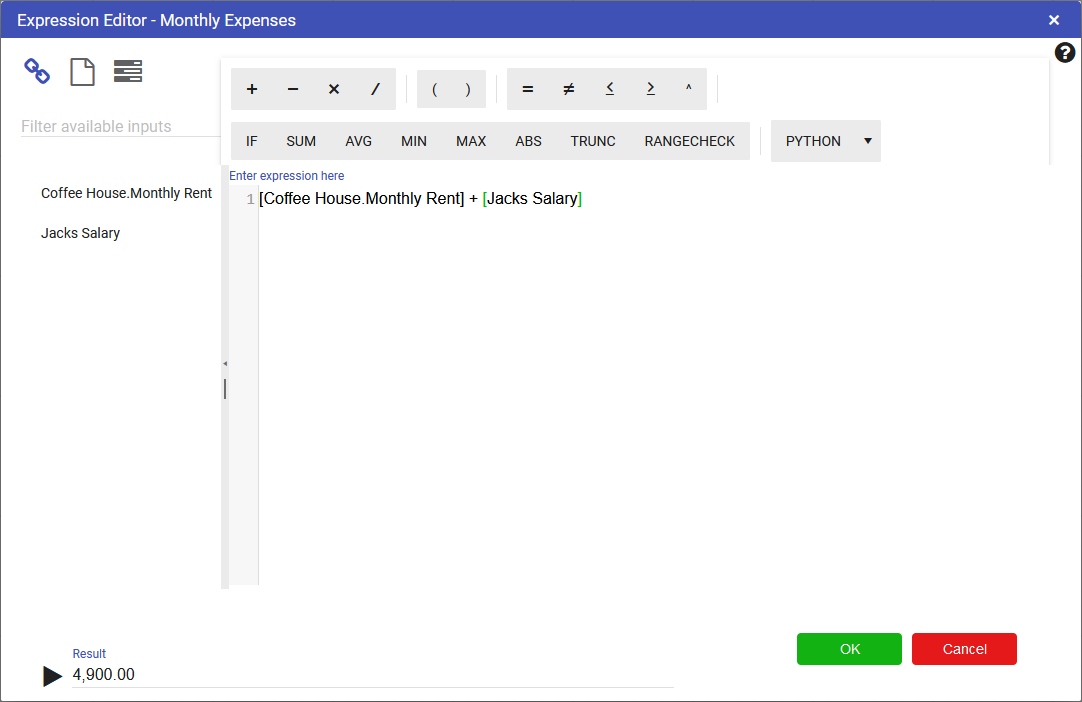

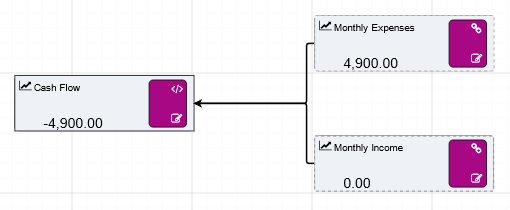

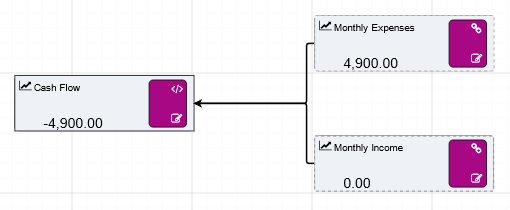

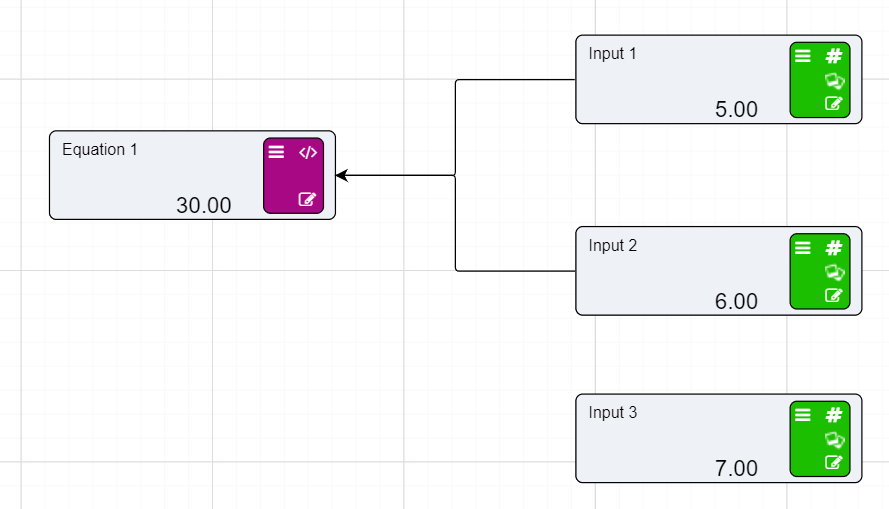

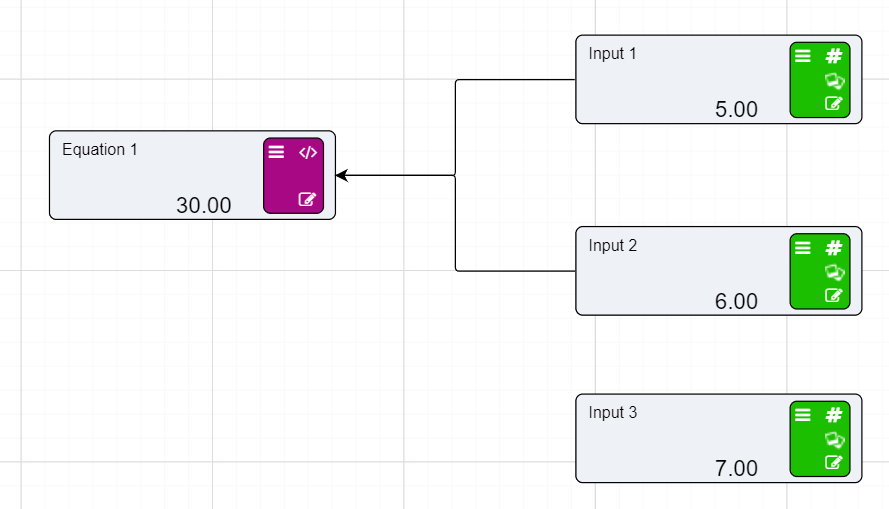

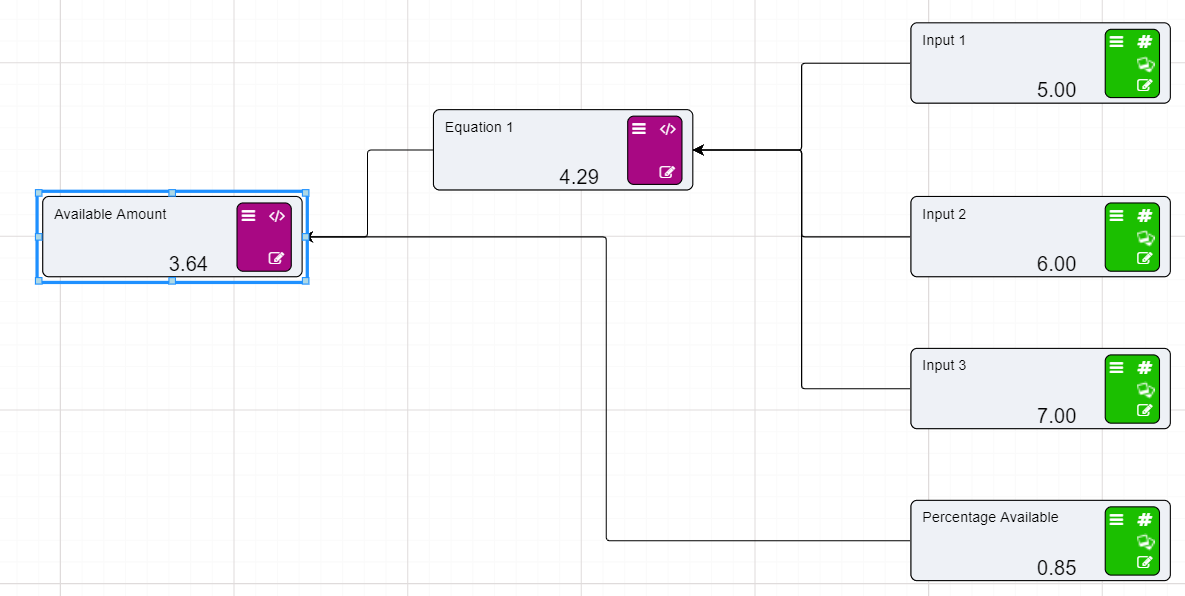

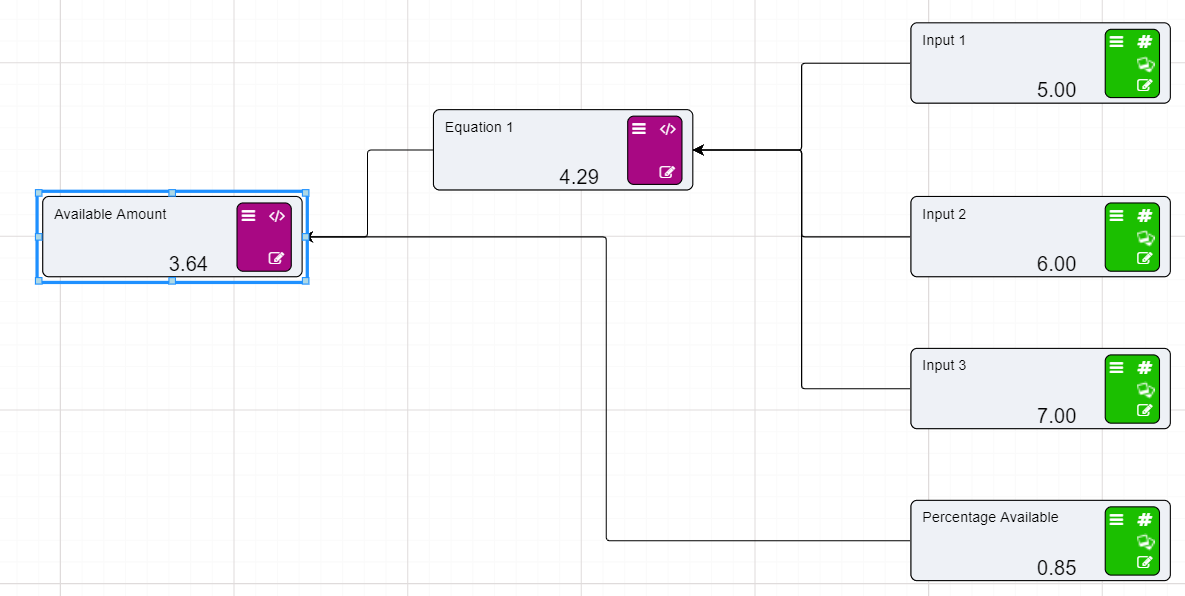

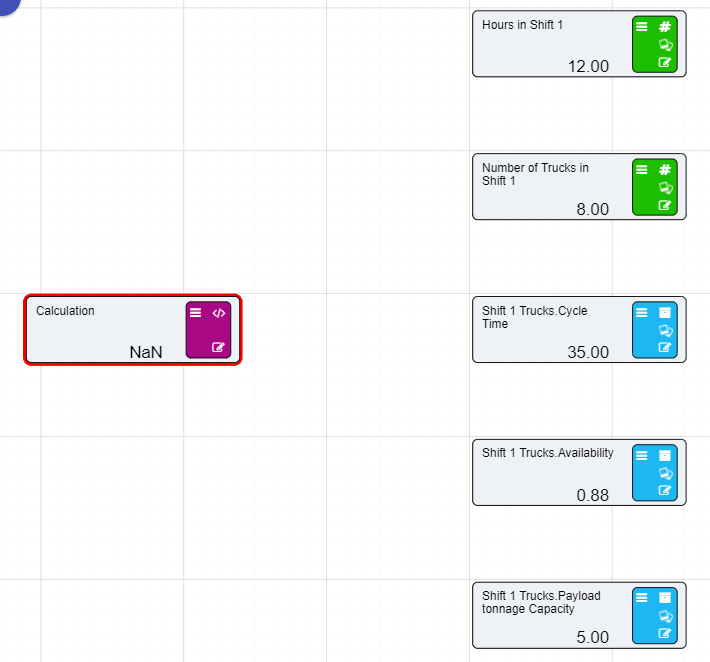

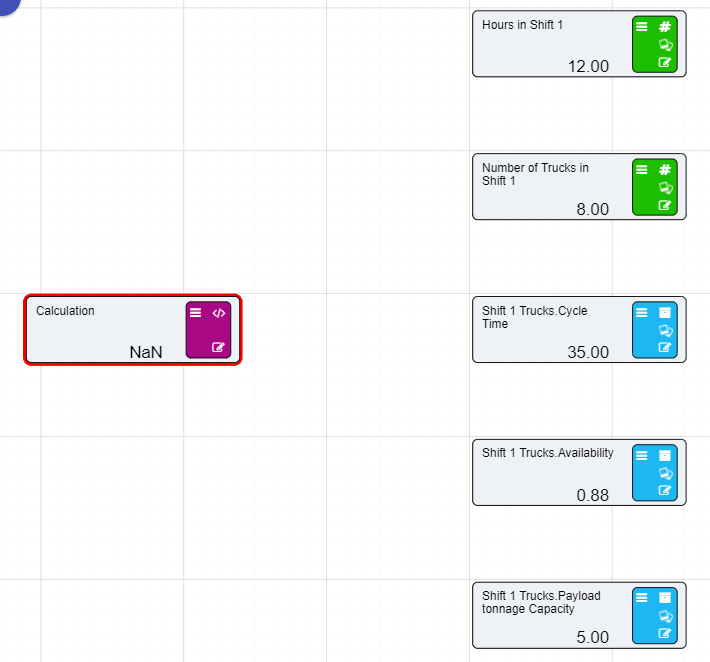

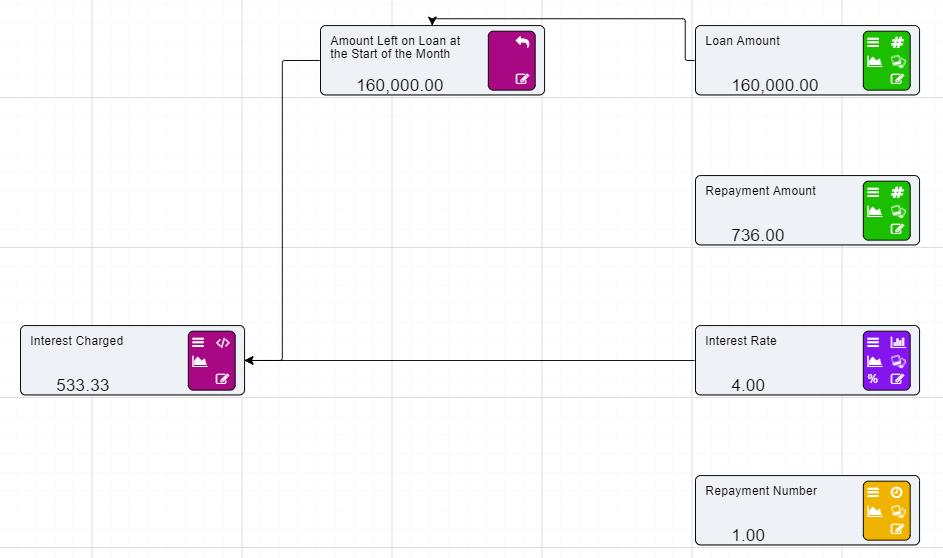

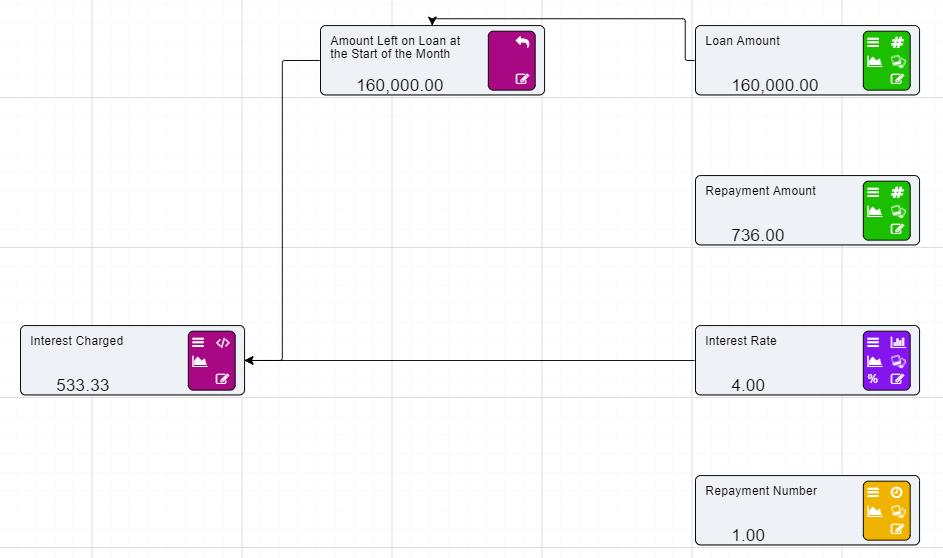

Calculation nodes are probably the most important node in a Driver Model. They take inputs from the different nodes and use their values in mathematical expressions to produce an output value.

Calculation nodes do not need to have inputs to work. Expressions and values without the input of the other nodes can be entered into a Calculation node to produce a value. However, anytime you wish to change that value you will need to go into the expression editor within the Calculation node and manually change the value. This is why we recommend that for any expression entered into a Calculation node there is always a node which holds the input value so that:

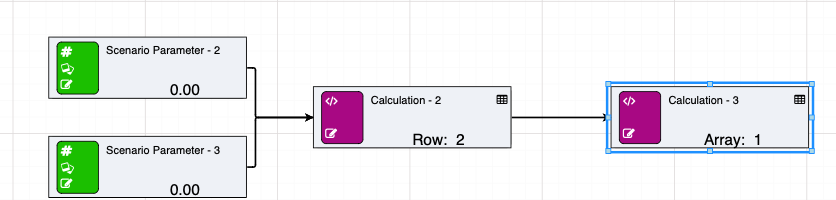

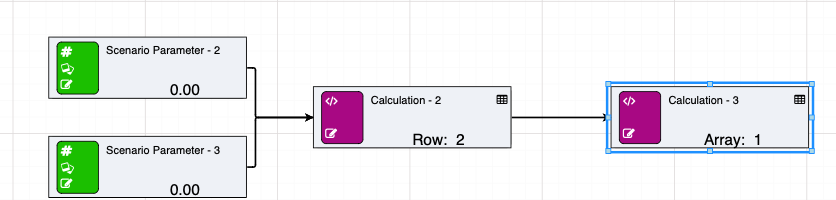

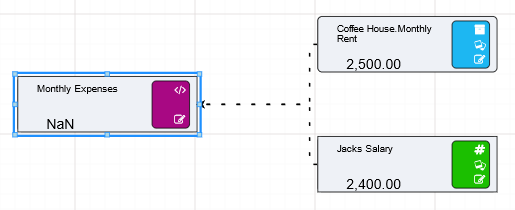

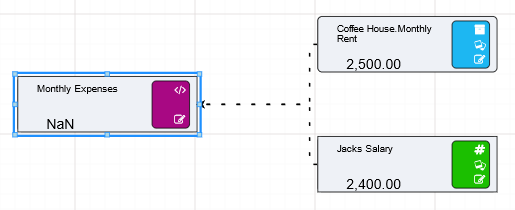

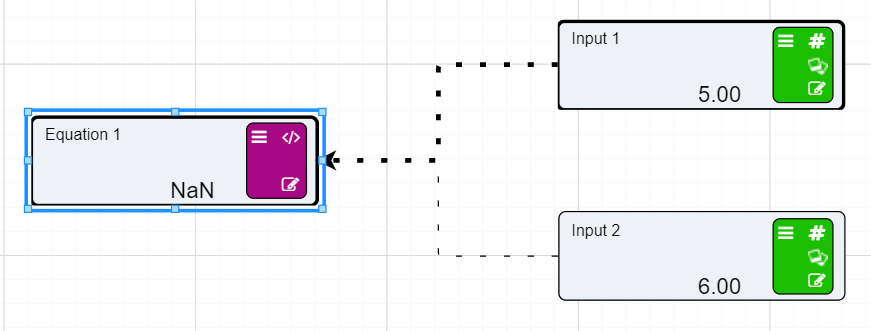

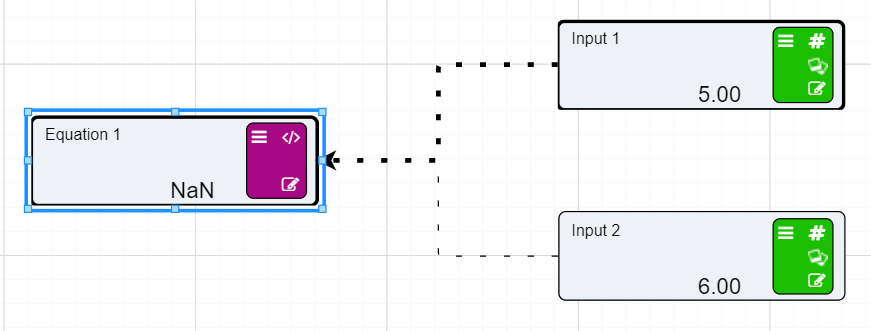

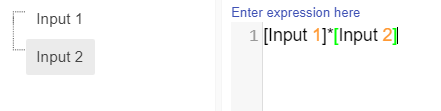

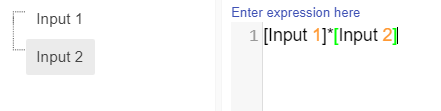

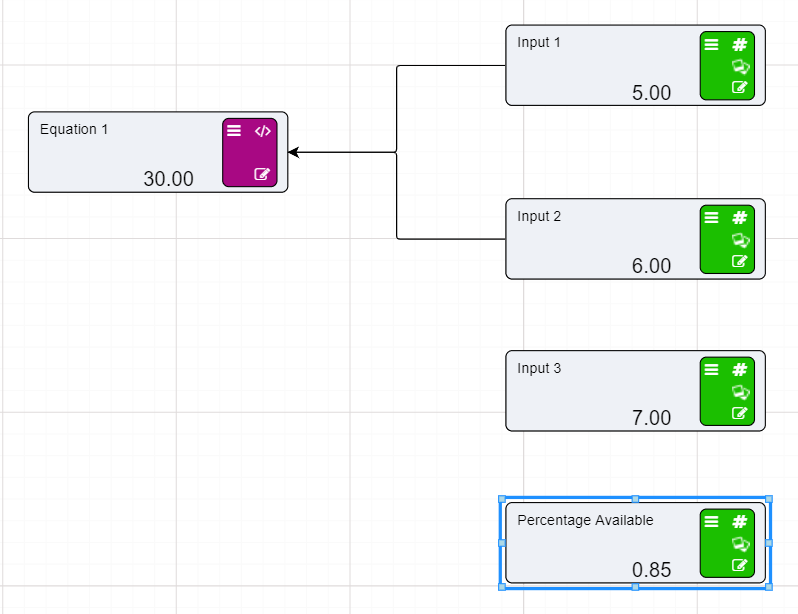

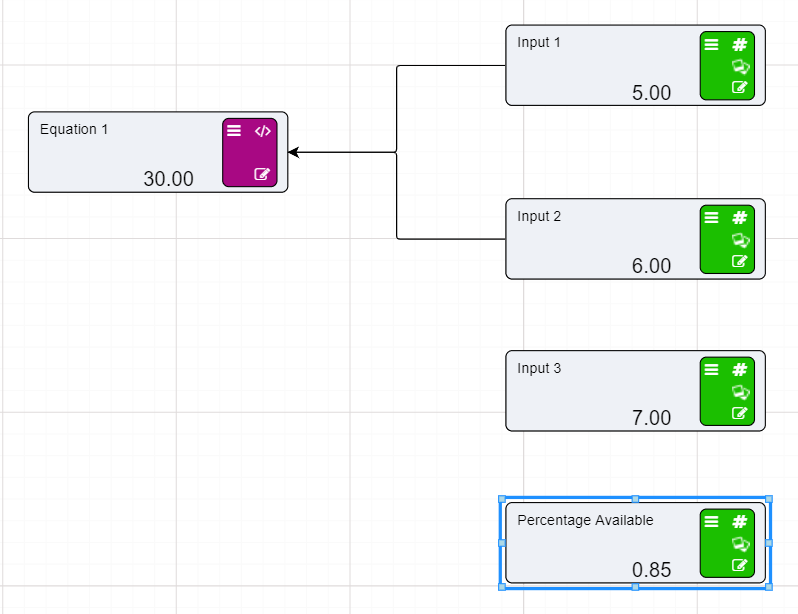

On the previous page we set up two Numeric nodes in a Driver Model workspace. We will now demonstrate how the Calculation nodes work by using the previously created Numeric nodes and putting them into an Expression in a Calculation node.

To set up a Calculation node:

Change the name of the Calculation node by clicking on the current name of the node and waiting for an editing bar to appear above the node.

This is how you would change the name of a node without going into the editing bar.

Note

Rename your Calculation node to Addition.

Now that we have set up our Calculation node we will need to add the inputs to the Calculation node.

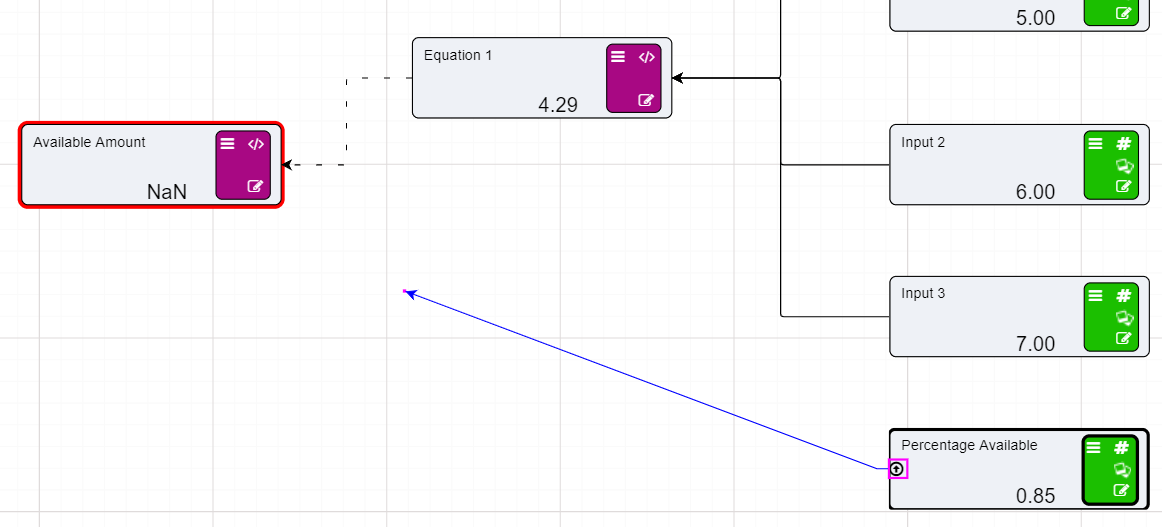

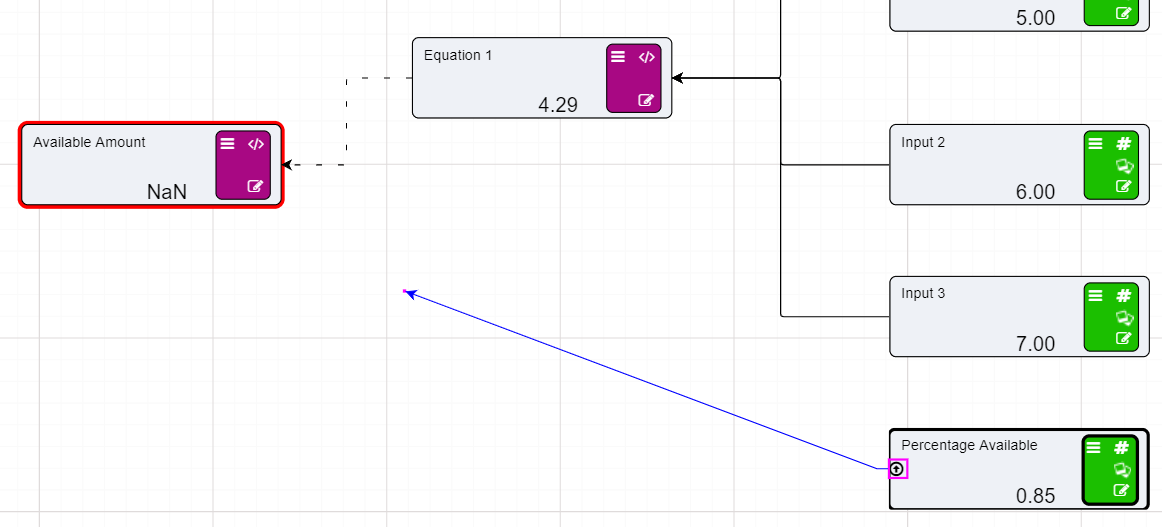

You will notice that the two Numeric nodes are now connected to the Calculation node by dotted lines. These lines mean that the inputs are connected to the Calculation node but they are not being used in the expression. The lines will become solid once we add these values to our expression.

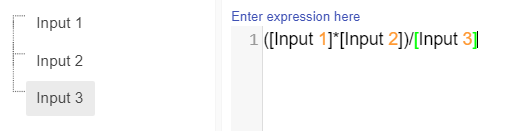

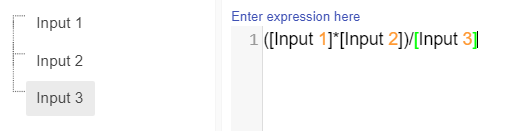

Expressions in Calculation node are written in a similar format to that of an Excel expression.

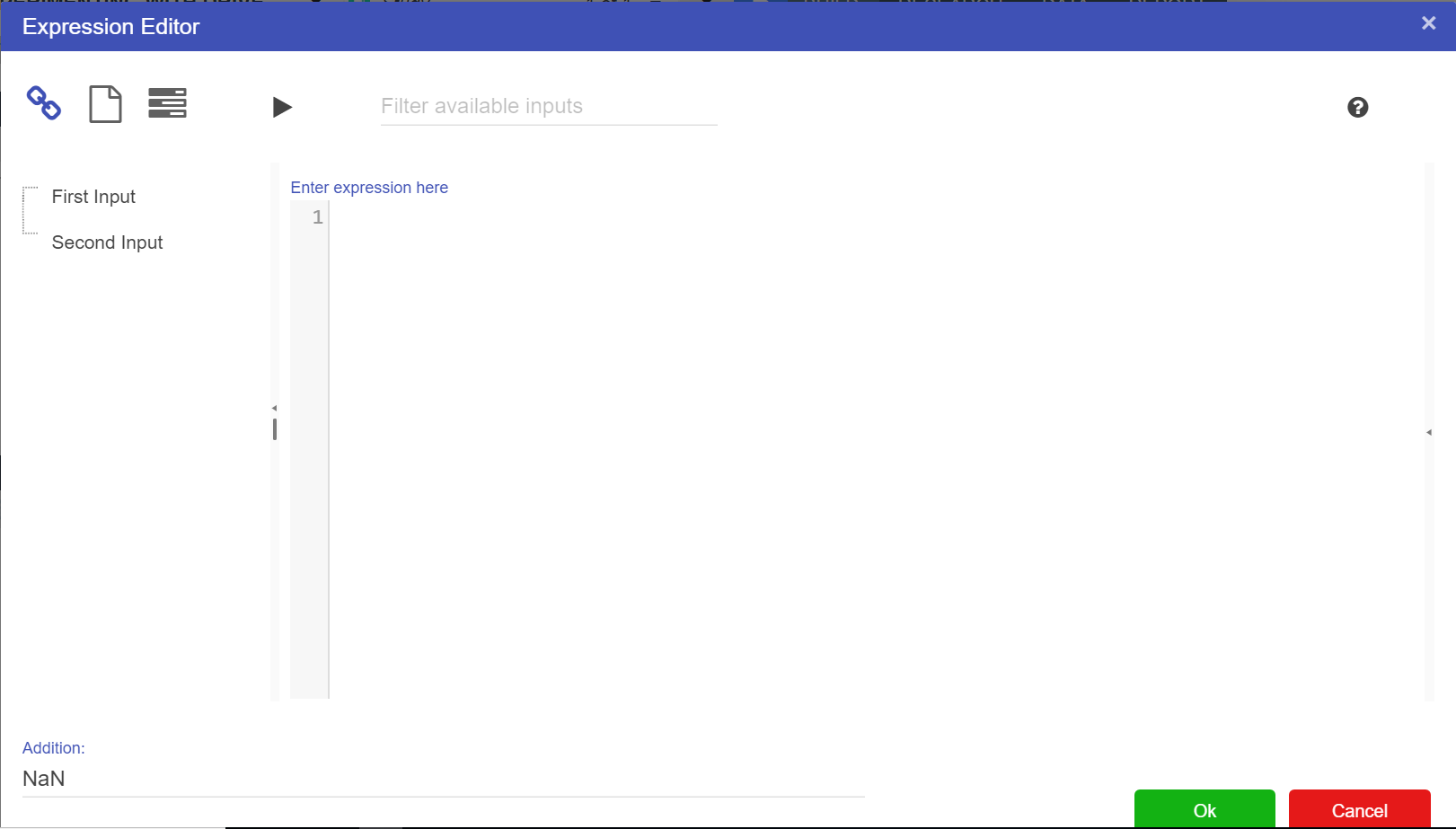

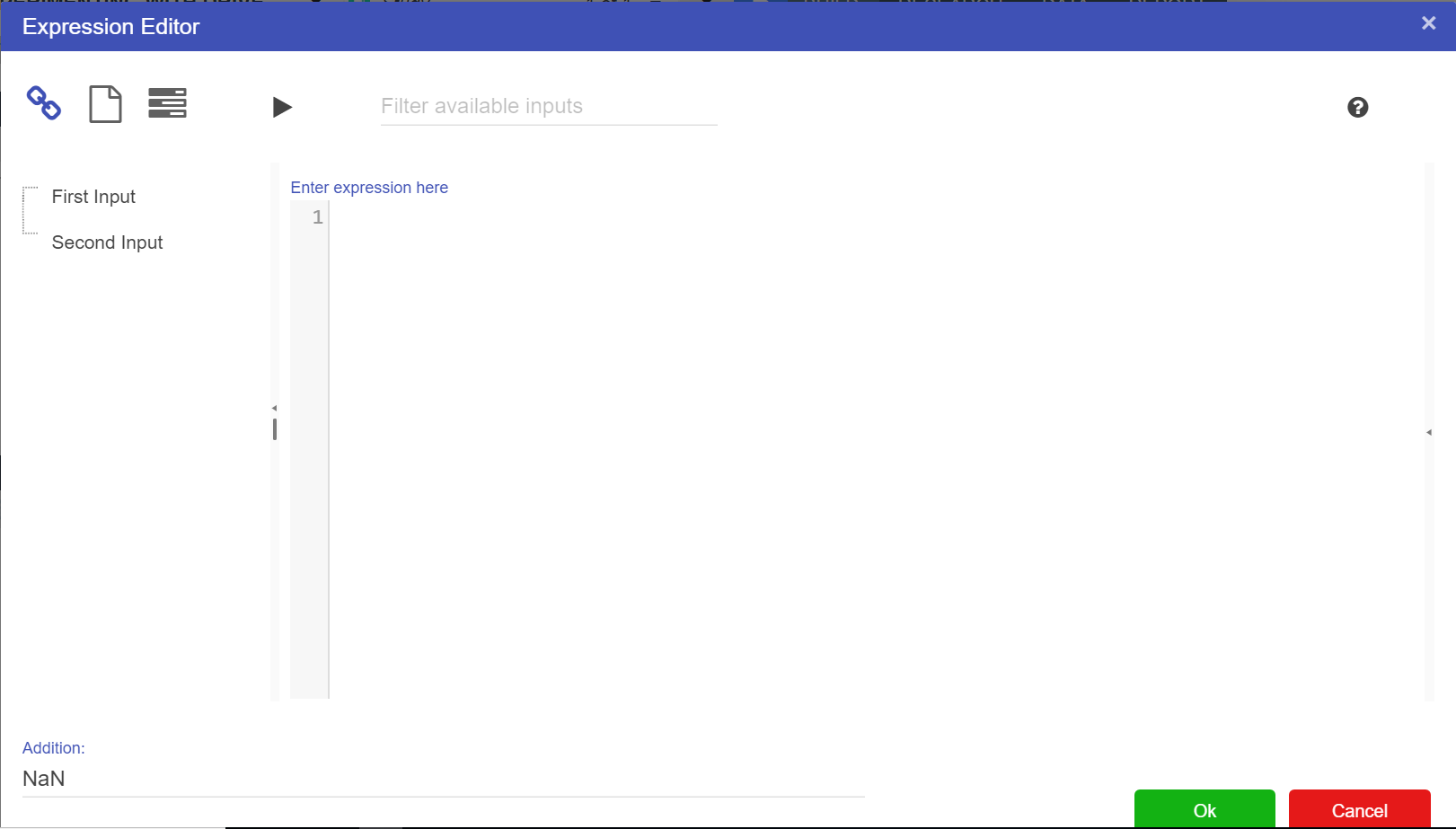

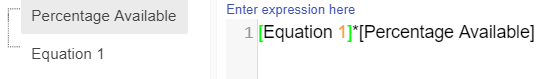

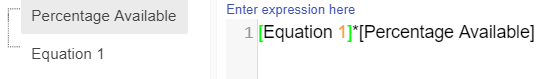

To get a result from the Calculation node we have to set up an expression. To set up an expression:

For this example we will add the two Numeric nodes together, but more complex expressions can be written in the expression editor.

For this example we will add the two Numeric nodes together, but more complex expressions can be written in the expression editor.Notice that the dotted lines between the nodes will now be solid as the values of those nodes are now in use.

References to nodes in the Expression Editor must surround the node name with [ ]

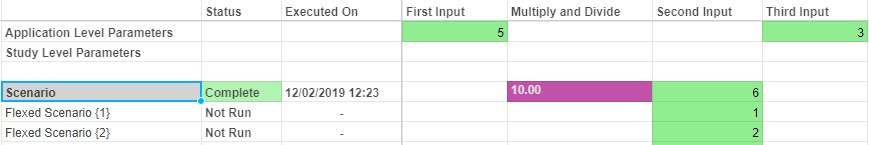

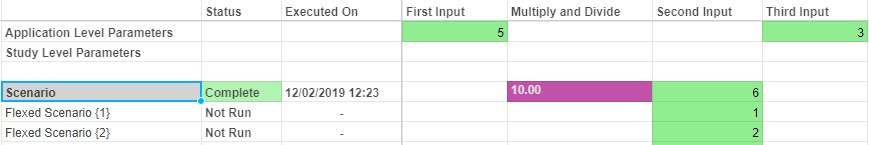

When Calculation nodes are given inputs, they become dependent on those nodes. So, if for example our First Input Node was to change from 1 to 5 the calculated value would become 7. And if the Second Input was to change from 2 to 6 then calculated value would become 11.

Calculation nodes do not have to remain the same in terms of expression. Expressions can change at any time. Should the expression change from Addition to subtraction all users would need to do would be to go into the expression editor and change the “+” to a “-”. This would result in the value going from 11 to 1. If we wanted to divide 5 by 6 we would put a “/” in between the First Input Node and the Second Input Node. And if we wanted to multiply the two nodes, we would adjust the symbol from a “/” to a “*”.

There is no limit on the amount of inputs, however some functions do have a limited number of inputs. They do have to be put into the expression though before their values will be used by the node.

Code comments can be used in calculations using // syntax.

// Calculate the average

avg([node])The below tables list the functions and operators available for Driver Models.

| Type | Operator | Notes |

|---|---|---|

| Arithmetic | + - * / | The standard arithmetic operators |

| Arithmetic | % | modulus, or remainder |

| Arithmetic | ^ ** | “to the power of”. x^y can be written as x**y or pow(x,y) |

| Logical Boolean | && || ! | And, Or, Not |

| Bitwise | & | xor | And, Or, Xor |

| Comparison | > < >= <= | Greater than, Less Than, Greater Than or Equal To, Less Than or Equal To |

| Comparison | = == | Is Equal To |

| Comparison | != | Is Not Equal To |

| Constants | e pi | Euler’s constant, approximately 2.7182818, Pi, approximately 3.14159265 |

| Function | Notes |

|---|---|

abs(Number) |

Returns the absolute value of the specified number |

acos(Number) |

Returns the angle whose cosine is the specified number |

asin(Number) |

Returns the angle whose sine is the specified number |

atan(Number) |

Returns the angle whose tangent is the specified number |

ceiling(Number) |

Returns the smallest integral value that is greater than or equal to the specified number |

cos(Angle) |

Returns the cosine of the given angle |

cosh(Angle) |

Returns the hyperbolic cosine of the specified angle |

exp(Number) |

Returns e raised to the specified power |

floor(Number) |

Returns the largest integer less than or equal to the specified number |

ln(Number) |

Returns the natural (base e) logarithm of a number |

log10(Number) |

Returns the natural (base 10) logarithm of a number |

log(Number, Base) |

Returns the logarithm of a number in a specified base |

pow(Number, Power) |

Returns a number raised to the specified power |

rand() |

Returns a random number between 0 and 1 |

rangepart(value, min[, max]) |

Returns that part of a value that lies between min and max) |

round(Number[, d]) |

Rounds the argument, (optionally to the nearest ’d’ decimal places) |

sin(Angle) |

Returns the sine of the given angle |

sinh(Angle) |

Returns the hyperbolic sine of the specified angle |

sqrt(Number) |

Returns the square root of the specified number |

tan(Angle) |

Returns the tangent of the given angle |

tanh(Angle) |

Returns the hyperbolic tangent of the specified angle |

trunc(Number) |

Rounds the specified number to the nearest integer towards zero |

| Function | Notes |

|---|---|

avg(A, B, C, ..n) |

Returns the average of the specified numbers |

max(A, B, C, ..n) |

Returns the maximum of the specified numbers |

min(A, B, C, ..n) |

Returns the minimum of the specified numbers |

sum(A, B, C, ..n) |

Returns the sum of the specified numbers |

| Function | Notes |

|---|---|

asset(AssetName, AttributeName) |

Returns the value of an attribute on an asset |

simulationruntime() |

Returns the simulation time (from the active scenario) |

periodCurrent() |

Gets the current 0 based period number |

periodAverage(N[,[start][,end][,error_value]) |

Returns the cumulative average of the argument over all the model iterations to date, or between start and end if set. Error value applies prior to values prior to the range, or if not set the period 0 value |

periodWeightedAverage(N, W[,[start][,end][,error_value]) |

Returns the cumulative weighed average of the argument over all the model iterations to date, or between start and end if set. Error value applies prior to values prior to the range, or if not set the period 0 value |

periodCount([N,[start][,end]) |

Returns the number of model iterations to date, or between start and end if set. |

periodFirst(N) |

Returns the value of the argument at the first model iteration. |

periodLast(N) |

Returns the value of the argument at the last model iteration. |

periodMax(N[,start][,end][,error_value]) |

Returns the high-water-mark of the argument over all the model iterations to date, or between start and end if set. Error value applies prior to values prior to the range, or if not set the period 0 value |

periodMin(N[,start][,end][,error_value]) |

Returns the low-water-mark of the argument over all the model iterations to date, or between start and end if set. Error value applies prior to values prior to the range, or if not set the period 0 value |

periodSum(N[,start][,end][,error_value]) |

Returns the cumulative sum of the argument over all the model iterations to date, or between start and end if set. Error value applies prior to values prior to the range, or if not set the period 0 value |

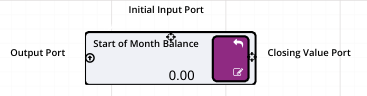

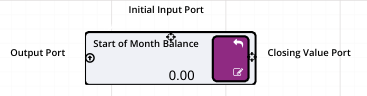

periodOpeningBalance(I[,A[,R]]) |

Returns the start-of-period balance, based on an Initial balance and accruing Additions and Removals on each subsequent period. |

periodClosingBalance(I[,A[,R]]) |

Returns the end-of-period balance, based on an Initial balance and accruing Additions and Removals on each period. |

periodPresentValue(d, N) |

Returns the present value of parameter N using the specified discount rate of d |

periodNPV(d, N) |

Returns the NPV of parameter N using the specified discount rate of d |

periodDelay(p, N[, default]) |

Returns the value of the argument N, but p periods later. Prior to that, it returns optional [default], else 0 |

periodVar(N) |

Returns the variance of the argument N |

relativePeriodSum([node], N) |

Returns the period sum of “node” over a rolling window into the past. The value of “N” determines the number of periods to go into the past including the current period. |

| Function | Notes |

|---|---|

periodAverageAll(N) |

Returns the average of the argument over all of the time periods |

periodCountAll() |

Returns the total number of periods |

periodLastAll(N) |

Returns the value of the argument in the last period |

periodMaxAll(N) |

Returns the maximum value of the argument over all of the time periods |

periodMinAll(N) |

Returns the minimum value of the argument over all of the time periods |

periodSumAll(N) |

Returns the sum value of the argument over all of the time periods |

periodWeightedAverageAll(N, W) |

Returns the weighted average of the argument N over all of the time periods, weighted by W |

| Function | Notes |

|---|---|

if(test,truepart,falsepart) |

If ’test’ is true, returns ’truepart’, else ‘falsepart’ |

iferror(calculation, errorresult) |

If ‘calculation’ has an error, return the error result, otherwise return the result of ‘calculation’ |

switch(value, condition, result, condition2, result2, condition3, result3, ...) |

Evaluates a value, then returns the result associated with the value |

| Function | Notes |

|---|---|

AverageIf(condition, N) |

Returns the cumulative average of the argument over all the model iterations to date if the condition is met |

CountIf(condition) |

Returns the number of model iterations to date if the condition is met |

SumIf(condition, N) |

Returns the cumulative sum of the argument over all the model iterations to date if the condition is met |

| Function | Notes |

|---|---|

execute(model name, period, input1Name, [input1Node], input2Name, [input2Node], ...) |

Executes an Akumen app (use period for the period number in driver models, 0 for Py/R). Returns 0 if successfull, otherwise -1. Note that this is not intended for large Py/R models, and could cause a performance impact. It is designed to be used as simple helper functions the driver model cannot perform. It is also limited to int/float inputs only |

executeoutput([executeNode], output1Name) |

Returns the result of the execute function, getting the value output1Name from the result. This is limited to simple int/float outputs only |

See here for information on setting up datasources, or here for further information on how to use datasources within Value Driver Models.

| Function | Notes |

|---|---|

datasource([datasource_node], value_column, aggregation_method, forwardfill, forwardfillstartingvalue, filter1column, filter1value, filter2column, filter2value, ...) |

Links a calculation to the datasource and applies an optional filter |

datasourceall([datasource_node], value_column, aggregation_method, forwardfill, forwardfillstartingvalue, filter1column, filter1value, filter2column, filter2value, ...) |

Links a calculation to the datasource and applies an optional filter |

Datasource specific filtering can be used across multiple columns in the datasource by adding in filter2column, filter2value, filter3column, filter3value etc.

datasourceall can also be used in place of datasource (using the same parameters). Instead of operating at a single time period, it operates across the entire dataset (honouring the load id and additional filter). This allows you to do things like get the stddev or mean of the entire dataset.

If only one row exists in the timeseries node, data can be referenced in the calculation formula by only providing the timeseries node name.

If multiple rows exist, the row name will need to be specified in the calculation formula as well. See grid below for calculation formula formats.

| Function | Notes |

|---|---|

timeseries([timeseries_node]) |

References time series node with name “timeseries_node” |

timeseries(timeseries([timeseries_node],"row_name")) |

References time series node with name “timeseries_node” and row label “row_name” |

timeseries([timeseries_node],[numeric_node_name]) |

References time series node with name “timeseries_node” and the row set to the 0-based value “numeric_node_name” |

| Function | Notes |

|---|---|

CurrentDayInMonth() |

The numerical day of the month of the current period |

CurrentDayInWeek() |

The numerical day of the week of the current period |

CurrentDayInYear() |

The numerical day of the year of the current period |

CurrentMonth() |

The numerical current month |

CurrentPeriodDateTimeUtc() |

The current period datetime in Excel format (ie 40000) |

CurrentYear() |

The numerical current year |

DaysInMonth() |

The numerical days in the month |

FirstPeriodDateTimeUtc() |

Period 0’s datetime in Excel format |

LastPeriodDateTimeUtc() |

The last period’s datetime in Excel format |

NumPeriods() |

The number of periods in the model |

| Function |

|---|

ContinuousUniform(lower, upper, [seed]) |

Lognormal(mu, signma, [seed]) |

Normal(mean, stddev, [seed]) |

Pert(min, mostlikely, max, [seed]) |

Triangular(lower, upper, mode, [seed]) |

The seed is optional, and applies at time period 0. This means that the random number generated for time period 0 will always be the same as long as the seed remains the same. The following periods reuse the random number generator meaning the pattern of numbers will be exactly the same for each execution. This guarantees consistency of results. The seed can also be applied using a scenario parameter.

Click here for more information on range checking nodes.

| Function | Notes |

|---|---|

rangecheck(sourcenode, lowlow, low, high, highhigh) |

Performs a check against of the source nodes value against the limits |

rangecheckresult(rangechecknode) |

Returns a value corresponding to which limit has been broken. -2 = lowlow, -1 = low, 0 = none, 1 = high, 2 = highhigh |

Node groups (nodegroup(), nodeungroup()) are used to collapse multiple nodes into a group that can be used in different areas.

For example, you can collapse a group of nodes and feed it into a Component. Once inside the component, the node group can be ungrouped to get the individual nodes within the component.

Node groups can also be processed into arrays. A node group will become a column in an array, with the name of the column being _NodeGroupName (note the underscore).

The underscore is there to distinguish between the node and the column (when array based functions are used),

with the names of the array rows being the inputs that make up the node group. Note that the names of the rows can be changed with the arraysetrownames() function.

There is no need for the nodeungroup() calc when using arrays as the node group is already ungrouped into the array.

Multiple node groups can be used to quickly build arrays, but they must all be the same length.

Component functions allow groups of nodes to be created as reusable components, rather than copying and pasting the nodes. These are similar to functions in normal programming.

See image below for an example.

In this example, a reusable component has been created between the Component Start and Component End nodes. The start and end nodes define the boundary of the component.

The Component Start node can only receive incoming data from a nodegroup node, which combines the incoming nodes into a single feed.

Once inside the component, the nodeungroup() function allows the modeller to split the node group into individual entries.

The overall component will run once per node group. So in this example, the component will run twice.

The nodes feeding into the component end can be retrieved using a componentresult() function.

| Dynamic | Function | Notes |

|---|---|---|

| x | componentstart() |

This is the main entry point to the component. Only nodegroups can be connected to the component start. It is possible to add another component start within an existing component but this may create unforseen issues and errors and therefore only one componentstart/component end should be used.This only supports dynamically linked nodes. It is not necessary to type in the names of the node group node. |

| x | componentend([componentstart]) |

The componentend calculation indicates the end point of the component. Every input to the component runs once between the componentstart and componentend. This only supports dynamically linked nodes. Only the componentstart node is required. |

componentresult([componentend], run_index, "calculated_node") |

This calculation fetches the result for the selected run index. The run index is the index of the node group that is passed into component start, as they are ordered on the driver model canvas - first by the y-axis, then by the x-axis.If there are multiple calculated values to fetch, then the calculated node can be optionally added. If the calculated node is not included in the expression, it defaults to the first calculated node connected to componentend.The run_index can be a fixed number referencing the index (x, y ordered) of the run, or the name of the node group that feeds into the componentstart. Note that the name of the node group should be double quoted, rather than enclosed in square brackets. | |

| x | nodegroup() |

Groups a set of nodes for use in calculations, such as components or arrays |

| x | nodeungroup(index, [componentstart]) |

Gets the node based on the index for use in calculations. |

Python nodes allow individual driver model nodes to include Python code directly in the node calculation as shown in the example below.

The number of inputs being linked into the Python (calculation) node must exactly match the number of parameters defined by the Python function, this is not dynamic.

Like dynamic nodes, however, the order of the nodes fed in matters. This is determined by positions of the nodes on the driver model canvas - first by the y-axis, then by the x-axis.

Also the names in the def akumen(…) or def runonce(…) function are NOT the names of the nodes. They are instead Python variables and therefore cannot have special characters or spaces in the names.

The value of the incoming node will come through as the variable’s value.

To create a Python function, the first line must be either of the following:

def akumen(input1, input2):def runonce(input1, input2):The syntax highlighting will automatically convert to Python highlighting. The akumen() function will run for every time period and is limited to a 1 second runtime.

The runonce() function will run for time period 0 only and cache the result. This result is then passed into every other time period.

It is limited to a 5 second runtime and can be used for data preparation tasks (e.g. retrieving output from a datasource, cleaning the data and then passing the output to a datasource calculation.

All Python nodes need a return statement which is used to output the results of the Python code to other nodes.

If there is a single scalar value in the return, it can be referenced directly within any other calculation.

There is also the option to return a dictionary of values.

These cannot be referenced directly and require the pythonresult() function (e.g. pythonresult([python_node], "first")) which will allow access to the dictionary values.

This allows the Python node to return multiple values in one function and is more efficient than using multiple Python nodes with similar code, as the code is only executed once.

There are some limitations with Python nodes:

In addition to the list associated above, a subset of Pandas functions are available as aliases. The full Pandas module cannot be imported directly due to security concerns with some of the IO functionality Pandas provides.

The following Pandas functionality is available as aliases (note they are the exact pandas functions, only prefixed with pd_):

The following helpers provide additional quality of life improvements to the Python node:

array_helper - provides helper functions for dealing with arrays out of the calc engine:

| Function | Notes |

|---|---|

array_helper.convert_dataframe_to_array(datasource, columns, category_column) |

Converts a dataframe object (from a datasource) into an array. The value set for of “columns” is the list of columns to use, and “category_column” is the optional column to use as the category. If the first column is not a number, it automatically becomes the category column. If there is no category column in the list of columns, the index of the dataframe is used as the name. |

array_helper.convert_array_to_dataframe(array) |

Converts an array into a dataframe. |

period_helper - provides helper functions for dealing with periods in the calc engine:

| Function | Notes |

|---|---|

period_helper.get_current_period() |

Gets the period that the calc engine is currently on |

period_helper.get_reporting_period() |

Gets the reporting period that is configured |

period_helper.get_num_periods() |

Gets the total number of detected periods |

period_helper.get_start_date() |

Gets the actual start date |

period_helper.get_date_from_period(period) |

Gets the date that corresponds to the selected period |

period_helper.get_period_from_date(date) |

Gets the period the period that corresponds to the date |

period_helper.convert_datetime_to_excel_serial_date(date) |

Converts the date to an excel serial date |

period_helper.convert_period_to_excel_serial_date(period) |

Converts the period to an excel date |

If preferred, alternative aliases can be used to reference the helper functions:

arrayhelper = ah (eg ah.convert_dataframe_to_array)periodhelper = ph (eg ph.get_current_period())The benefits to using Python nodes are:

pythonresult([pythonnode]).

Note there are no parameters specified. If a dictionary is returned, the first item in the dictionary is returned.When returning a dictionary, the Python node only executes once for a time period, and the result dictionary is cached. This makes it efficient to return multiple values from the Python node as a dictionary, rather than using multiple Python nodes.

Print statements can be specified in Python code (e.g. outputting the contents of variables, data frames, etc). While the print output won’t appear in the user interface when using auto-evaluation, it will appear in the normal Akumen log after performing a model execution.

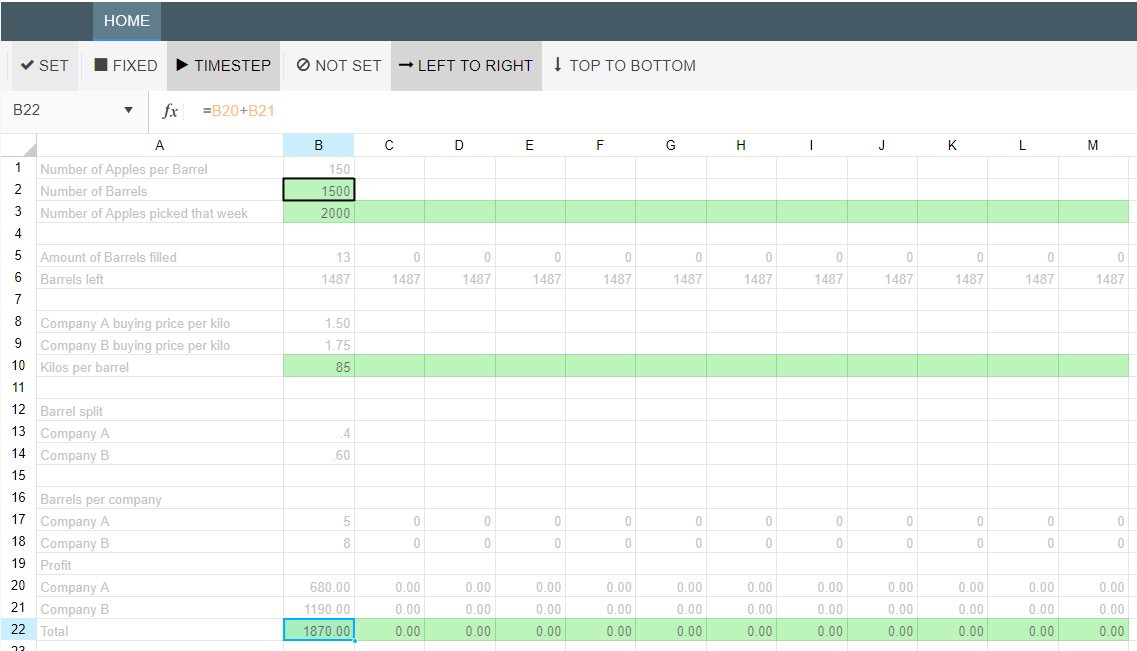

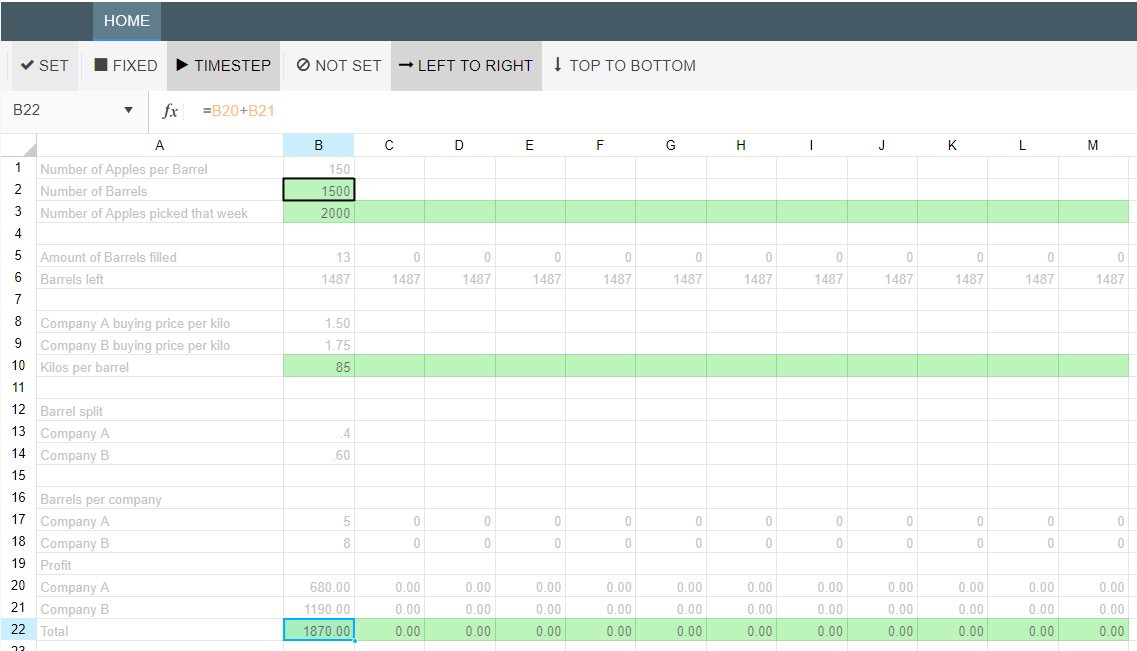

Arrays are a ground breaking new piece of functionality for driver models. They can be built in a number of ways, including dynamically, from Python output as well as from datasources. Once data has been put into arrays, there are a number of array calculations which can be used to perform bulk operations, such as aggregations like sums and averages or sorting. In addition, groups of nodes can be added to arrays to make a single row of values.

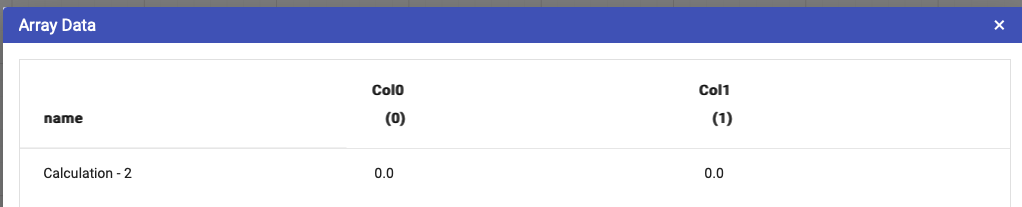

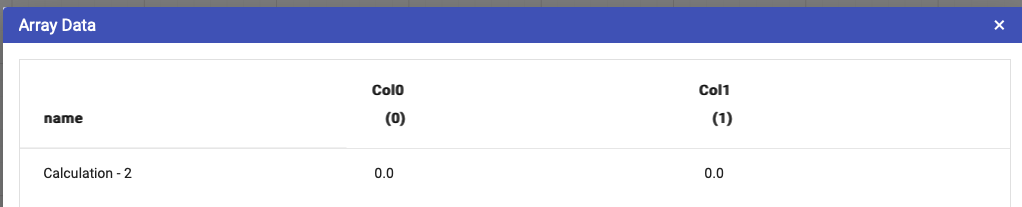

Note that clicking on the table icon at the top right of the array node pops up a window showing the individual array values.

Arrays can be built in a number of different ways. This can be:

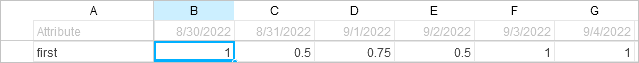

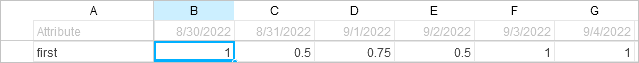

array() function (e.g. array (1, 2, 3, 4));arrayrow() function to feed data into the array to make individual rows; ornodegroup() to feed into the array to make individual columns.There are three basic modes of creating an array row:

Fixed is where values are entered directly into the node such as arrayrow(’name’,1,2,3,4,5). If a name is not provided, the name of the node will be used for the entire row. The name must have at least one alpha character.

Specified is where nodes that form the array row are entered in the column order required (e.g. arrayrow('name',[node2], [node3], [node4], [node5], [node6])).

If a name is not provided, the name of the node will be used for the row. The name must have at least one alpha character

Note that Fixed and specified entries can be intermixed.

Dynamic does not list any values or nodes within the function but rather uses nodes that are linked to the arrayrow() node (e.g. arrayrow('name')).

The order of the elements within the row is determined by the x and y locations of the linked source nodes on the driver model canvas (top to bottom, left to right).

If a name is not provided, the name of the node will be used for the row. The name must have at least one alpha character.

See below for an example of a simple arrayrow feeding into an array.

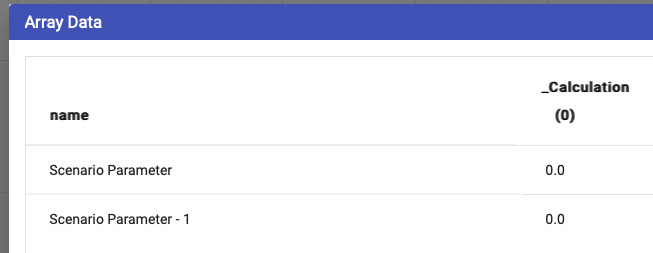

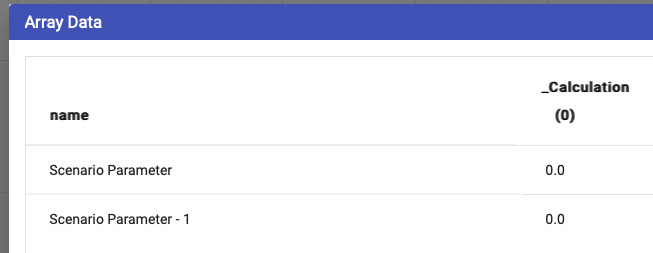

The node table contains the following data:

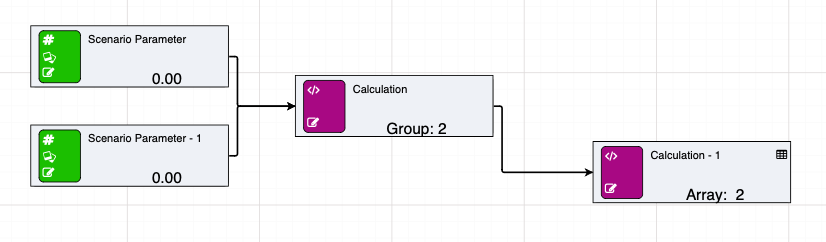

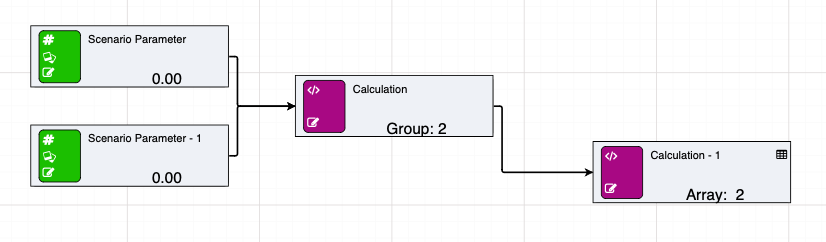

Node groups can also be used to build arrays. They are similar to arrayrows, but instead of building a row with multiple columns, the nodegroup becomes the column within the array. The row names become the node names from the first node group.

See below for an example of a simple nodegroup feeding into an array:

The node table contains the following data:

When passing a nodegroup into an array (or even individual input values), the node name is used as the column name. To distinguish between a node and a value, the calcengine prefixes an underscore (_) to the name of the column. This is done to allow calculated columns to identify the difference between a column and a node of the same name.

See table below for array functions.

| Dynamic | Function | Notes |

|---|---|---|

| Yes | array([input_1], [input_2], … [input_n]) |

Builds an array from either the supplied inputs, or dynamically from a datasource, another array (extending) or a Python node. Note if building from a Python node, then that must be the only input into the array. When building from a datasource, the datasource must be the first input (you cannot use dynamic) and the rest of the parameters are the names of the columns to bring in. Multiple columns will create rows of tuples see arraytuple(). Datasources no longer must have a date column specified. |

| Yes | arrayrow([input_1], [input_2], … [input_n]) |

Creates an array row, which is another way of saying a list of inputs. Array rows can be added to arrays, effectively forming a two dimension array. So for example, and array might be [2, 6, 3, 5], but an array of rows might be [(2, 4), (5, 6), (1, 2)]. This is useful when grouping like sets of data, such as height and weight for a person, or tonnes, iron, alumina, silica for a single product. Note that each row in the array must have the same number of columns. |

| No | arrayaverage([arraynode], column_index_or_name) |

Gets the average of the array, optionally providing the index of the column to aggregate. Not specifying the index assumes index = 0 |

| No | arraycount([arraynode]) |

Counts the number of items in the array |

| No | arraymatch([arraynode], [matchvalue], column_index_or_name, tolerance) |

Gets the index of the item matching the value. Setting approximate to true gets a close enough value, using the tolerance. When approximate and tolerance is set, the calculation used is: np.where(np.isclose(array_matches, match, atol=tolerance_value)) When just approximate is set, the calculation used is: np.where(np.isclose(array_matches, match)) When an exact match is used, the calculation used is: np.where(array_matches == match) |

| No | arrayfilter([arraynode], test_column, test, test_value) |

Filters an array (returning a new array) of the filtered items. This differs from match in that match will return a single index value, whereas filter returns a new array that is filtered based on a numeric condition. The syntax of the filter is:

|

| No | arraymin([arraynode], column_index_or_name) |

Gets the minimum value. Not specifying a column_index_or_name defaults to index = 0 |

| No | arraymax([arraynode], column_index_or_name) |

Gets the maximum value. Not specifying a column_index_or_name defaults to column_index_or_name = 0 |

| No | arraypercentile([arraynode], percentile, column_index_or_name) |

Gets the percentile of the array. This uses the calculation: np.percentile(array, self._percentile, method=linear, axis=0)Not specifying a column_index_or_name defaults to column_index_or_name = 0 |

| No | arrayslice([arraynode], start_index, end_index) |

Slices an array from start_index to either the optional end_index, or the length of the array. If the start_index or end_index references a row name, rather than a numeric value, it will lookup the index of the row name, and use that as the slice index. |

| No | arrayslicebycolumn([arraynode], start_index, end_index) |

Slices an array from start_index to either the optional end_index, or the number of columns within the array. If the start_index or end_index references a column name, rather than a numeric value, it will lookup the index of the column name and use that as the slice index.. |

| No | arraysort([arraynode], column_index_or_name, direction) |

Sorts an array. Not specifying a column_index_or_name defaults to column_index_or_name = 0 |

| No | arraysum([arraynode], column_index_or_name) |

Gets the sum of an Array. Not specifying a column_index_or_name defaults to column_index_or_name = 0 |

| No | arrayvalue([arraynode], row_index_or_name, column_index_or_name) |

Gets an individual value based on the row index or name of the row. Not specifying a column_index_or_name defaults to column_index_or_name = 0 |

| No | arrayweightedaverage([arraynode], [weightnode], value_index) |

Calculates the weighted average of an array. Note the weight node can be another array (of the same length), or the row index to use as the weights array (in the same array). The weighted average is calculated for every row, the value_index specifies the row index value to retrieve |

| No | arraycalculatedcolumn([arraynode], "calcname_1", "calculation_1", "calcname_2", "calculation_2") |

Creates a calculated column for the array. The calculation itself cannot be complicated, nor use Akumen specific functionality (eg periods etc). The calc engine will reject calculations like this. In every other way, the syntax is very similar to the Akumen formula language, with a couple of exceptions.

|

| No | arraysetcolumnnames("name")OR arraysetcolumnnames("col1", "col2") |

Feed into the array to set the names of the columns. If only one input is detected, the array will dynamically allocate the column names using, in the example snippet to the left, name 0, name, 1, name, 2 etc. |

| No | arraysetrownames("name")OR arraysetrownames("row1", "row2") |

Feed into the array to set the names of the rows. If only one input is detected, the array will dynamically allocate the row names using, in the example snippet to the left, name 0, name, 1, name 2, etc. |

| No | arrayfromcsv(names, first, secondrow, 23, 24row_2, 33, 44) |

Allows an array to be built from text pasted into the expression editor. Note that this is not designed for huge arrays. YOU WILL RUN INTO PERFORMANCE ISSUES using this piece of functionality. It is designed to quickly spin up a demo, or for small inputs to perform operations on. Also note that the data entered into the calculation node’s formula window is interpreted as a CSV and thus needs to be separated on different lines as shown in the example to the left. |

| No | arrayconcat([first_array], [second_array]) |

Joins two arrays together by column. The row names will be the row names of the first array (and they must have an equal number of rows). The columns of the second array will be appended to the columns of the first array, and a new array created. To append the rows of one array to another, simply add the two arrays to an array() calc. The number of columns must match in this case. |

| No | arraydeletecolumn([arraynode], first_column, second_column) |

Deletes one or more columns from the array, returning new array. |

These functions are designed specifically to handle supply chains.

| Function | Notes |

|---|---|

storage([incoming], [outgoing], [opening]) |

Creates a storage node that, for each time period, both adds (incoming) and removes (outgoing) from the storage. The opening balance at time t = 0 is specified using opening |

storageavailable([storagenode]) |

The storage available for the time period (i.e. the opening value + the incoming value) |

storageclosing([storagenode]) |

The closing value of the time period (i.e. the available value - the outgoing value) |

storageopening([storagenode]) |

The opening value for a time period (i.e. the same as yesterday’s closing value) |

Arrays can be used with storage nodes. The limitation is that the arrays must be the same dimensions. The names of the array rows must match (e.g. row 0 is Product 1 and Product 1, row 1 is Product 2 and Product 2… etc) in both arrays, and the names of the first column must match (e.g. Tonnes and Tonnes).

Arrays can be used in combination with storage nodes to keep track of multiple products and their descriptive quantities, such as tonnes and grade (i.e. keeping track of analytes). Storage nodes expect nodes as input, meaning inputs cannot be array values. Therefore incoming, outgoing, and opening must be separate arrays (and therefore nodes).

The following image shows an example of an opening values array which contains two products each with two analytes:

In this example, the calculation node contains multiple arrayrow() entries with each corresponding to a product and its quantities:

This can be repeated for both incoming and outgoing arrays. All three arrays can then be input into the storage node for calculations to occur.

The below calculations are specifically used to handle financial calculations.

| Function | Notes |

|---|---|

irr([values_node], start, end) |

Calculates the IRR for the given cashflow. Optionally provide the start/end to calculate the IRR for a range with in the model. Uses numpy-financial.irr internally |

irrall([values_node]) |

Calculates the IRR for all time periods, returning the same result at each period |

npv([rate_node], [values_node], start, end) |

Calculates the NPV of a given cashflow. Optionally provide the start/end to calculate the NPV for a range within the model. Uses numpy-financial.npv internally |

npvall([rate_node], [values_node]) |

Calculates the NPV for all time periods, returning the same result at each period |

pv([rate_node], [payment_node], start, end) |

Calculates the present value. Optionally provide the start/end to calculate the PV for a range within the model. Uses numpy-financial.pv internally |

pvall([rate_node], [payment_node]) |

Calculates the PV for all time periods, returning the same result at each period |

The rate node must be a single value such as a numeric node or fixed value. An error will be thrown if a timeseries or calculation node is used where the value differs between time periods.

NPV and PV behaves slightly differently to Excel. The calculation in the calc engine actually takes the NPV/PV from t = 1, then adds the value from t = 0 automatically. In Excel, this needs to be done manually

Listed below are additional calculations that have been added. Some overlap may exist with calculation engine v3 functions, but the entire set of calculations are documented here for completeness.

| Function | Notes |

|---|---|

isclose(first, second, [rtol], [atol]) |

This is based on the math function “isclose()”. Both the rtol and atol arguments are optional and default to 1e-09 for rtol and 0 for atol.Uses https://docs.python.org/3/library/math.html#math.isclose internally. |

| Function | Notes |

|---|---|

currentdayinmonth() |

The day of the month in the current period |

currentdayinweek() |

The current day of the week, where Monday == 1 and Sunday == 7 in the current period |

currentdayinyear() |

The current day of the year in the current reporting period |

currentmonth() |

The current month of the currently selected period |

currentperioddatetimeutc() |

The current period in UTC as an Excel based integer |

firstperioddatetimeutc() |

Similar to the above, but for the first period only |

lastperioddatetimeutc() |

Similar to the above, but for the last period only |

currentyear() |

The year of the currently selected reporting period |

datetimeexcelformat() |

Similar to currentperioddatetimeutc(), but named correctly for what it returns. This uses the openpyxl package to calculate the correct date |

daysinmonth() |

The days in the month of the current period |

daysinyear() |

The days in the year of the current period |

islastdayofmonth() |

Returns 1 if it’s the last day of the month for the current period, otherwise 0 |

islastdayofyear() |

Returns 1 if it’s the last day of the year for the current period, otherwise 0 |

currentfiscalquarter([start_month], [start_day], [start_year]) |

The current fiscal quarter. This defaults to Australian, but can be overridden by providing the start_month, start_day and start_year |

currentfiscalyear([start_month], [start_day], [start_year]) |

The current fiscal year. This defaults to Australian, but can be overridden by providing the start_month, start_day and start_year |

daysinfiscalyear([start_month], [start_day], [start_year]) |

The days in the current fiscal year. This defaults to Australian, but can be overridden by providing the start_month, start_day and start_year |

isfirstdayoffiscalyear([start_month], [start_day], [start_year]) |

Returns 1 if it’s the first day of the fiscal year, otherwise 0. This defaults to Australian, but can be overridden by providing the start_month, start_day and start_year |

islastdayoffiscalyear([start_month], [start_day], [start_year]) |

Returns 0 if it’s the first day of the fiscal year, otherwise 0. This defaults to Australian, but can be overridden by providing the start_month, start_day and start_year |

numperiods() |

The total number of periods. |

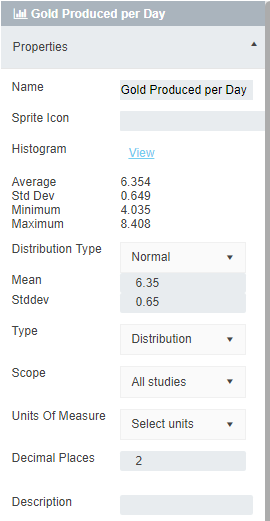

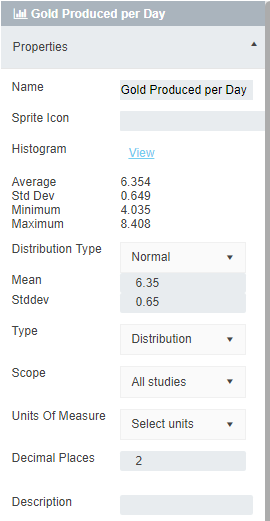

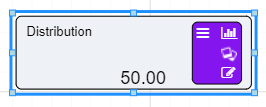

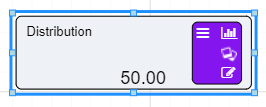

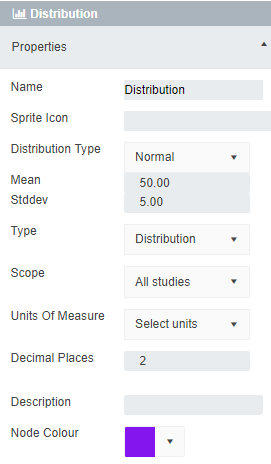

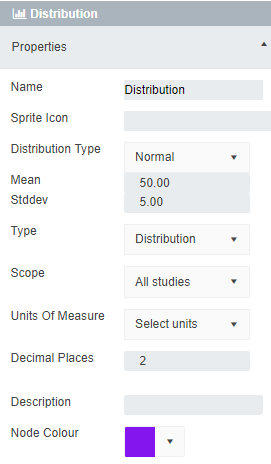

With the deprecation of Monte Carlo, it is important to highlight random functions and their abilities within calculation engine v4. There is no longer a distribution node. Instead, random nodes are created using standard calculations. They behave in a similar way to the old distribution nodes, however, they also have the option of returning an array of the distribution values, rather than just the single distribution value. In this case, a numeric seed must be passed in to the calculation either manually or through the use of a numeric node.

Seeds provide the ability to consistently return the same results for a particular random value. There are two ways of setting seeds. The first is creating a numeric value called SEED (note the case) which will be set at the global level and apply to all random numbers used by the model. The second is to pass the seed directly into the random calculation. Seeds do need to change per time period, otherwise each time period will have the same value. A calculation is applied to ensure that the seed is different per time period. Each time the model is run, the random number will be the same for each time period.

When a sample size is passed into the random calculation, rather than returning a single value, the calculation will return an array. The array will have one column, with an entry for each iteration returned by the underlying random number calculator. This is a very efficient way of returning a distribution of values, as it is not looping through multiple iterations like in calculation engine v3.

| Function | Notes |

|---|---|

normal(mean, sigma, [seed], [size]) |

Returns either a single value from a normal distribution, or an array of values |

continuousuniform(lower, upper, [seed], [size]) |

Returns either a single value from a continuous uniform distribution, or an array of values |

lognormal(mean, sigma, [seed], [size]) |

Returns either a single value from a lognormal distribution, or an array of values |

pert(minimum, mostlikely, maximum, [seed], [size]) |

Returns either a single value from a pert distribution, or an array of values |

triangular(lower, upper, mode, [seed], [size]) |

Returns either a single value from a triangular distribution, or an array of values |

weibull(a, [seed], [size]) |

Returns either a single value from a weibull distribution, or an array of values |

Using arrays with random calculations allows for the distribution of historical data to be approximated and passed into further calculations. A simple example being an approximation of the distribution of truck load sizes stemming from an excavator, and truck cycle times, to estimate the total tonnage moved within an operating day. See below for a basic example.

In this example, an array of excavator values takes the role of historical data from which the distribution is approximated. Ideally this would be a data source node with the customer’s real records. From this data, you can calculate the mean and the variance for input into the “Truck Loads - Distribution” node. In this example, the distribution is normal, but the parameters will be different depending on what distribution is being approximated. Noise can be added to both the mean and variance to increase the randomness and provide avenues for further testing.

The size parameter will determine the length of the array that is returned. This parameter can also be a node. Here you are returning an array of length 20.

Similarly, this process can be repeated to approximate the truck cycle times.

Combined, the resulting arrays can be used to determine total tonnage moved within a specific time period by calculating the number of possible trips and summing the load values up to that point.

Similarly, random calculations can be used to generate single values from the distribution by omitting the [size] parameter.

If you wish to get a range of values, you can flex [seed] across scenarios for testing purposes.

The SEED node will be automatically referenced by any random calculation within the scenario/model. This means the SEED node can be omitted from the function parameters.

Random calculations will automatically detect the seed node, as long as the node name is SEED.

Subiterations provide a way of performing iterations for a group of nodes and then to return the output of those iterations.

They are structured similarly to components in that they have clearly defined boundaries using subiterationstart and subiterationend nodes.

The only difference with components is that it is not necessary to pass in nodegroups.

| Function | Notes |

|---|---|

subiterationstart(iterations) |

Defines the starting point of the sub iteration. The iterations variable is the number of iterations to run the group of nodes within the start and end boundaries |

subiterationinput([subiteration_start_node], "input_node") |

Gets the input node that has been fed into the subiterationstart() node for use within the subiteration loop. |

subiterationend([subiteration_start_node], [exit condition]) |

Defines the end point of the subiteration. The exit condition is optional and provides the ability to exit the subiteration early (e.g. if an iteration converges to a result). This can be used with the new isclose() function rather than == as an exit condition may be close enough to exit (i.e. the 40th decimal point might be different.) |

subiterationresult([subiteration_end_node], node_index_or_name) |

Gets the result feeding into the subiterationend() node. This can be by index (ordered by x and y coordinates) or by name. |

subiterationarrayresult([subiteration_end_node], node_index_or_name) |

This is the same as subiterationresult(), however rather than returning the last iterated value, this will return an array of the iterated values. |

subiterationcurrent() |

Returns the current iteration within an iteration loop |

The user interface in Akumen cannot display iterations as node values.

Therefore, it only displays the values as if there were no iterations (similar to components that display only the first nodegroup).

Stepping through time using the time slider DOES NOT step through the iterations, it steps through the time periods as if there were no iterations at all.

The iterations occur under the hood and are only exposed by the suberationeresult() calculation.

PriorValue nodes are used widely in Akumen to be able to fetch the value of a calculation from the previous time period.

The priovalue calculation has been improved to detect when it is within an iteration, and can be used to fetch the results of a calculation from a previous iteration.

Used in combination with isclose(), you can effectively check the last value compared to the current value to find convergence based on a boolean condition you define within the subiterationend() node.

The above diagram is a simple example of a subiteration, with two inputs going into the subiteration. The start node shows that 20 iterations have been defined. The end node shows that there was an exit condition defined, and that it exited after 3 iterations. The two result nodes fetch the same calculated value, one returns the last value, the second returns an array of the 3 iteration values for the same result node.

The DatasourceArray formula allows users to extract rows in a Datasource filtered by date for each model period. This allows users to operate on categorical data, where multiple line items appear per date in a datasource, or to perform custom aggregation on data (for example, if the Datasource data is daily but the model is monthly).

The DatasourceArray formula returns an array with the relevant rows, returning an array with zero rows if no data appears in the selected period.

Note that only numeric columns will be returned, as arrays do not support non-numeric data.

| Function | Notes |

|---|---|

datasourcearray([Datasource Node], 'ColumnName') |

Basic Syntax of datasourcearray function. Will return an array with default row names (Row 0, Row 1, etc.). |

datasourcearray([Datasource Node], 'columns:ColumnName1, ColumnName2') |

Select multiple columns using a comma separated list. Will return an array with default row names (Row 0, Row 1, etc.). |

datasourcearray([Datasource Node], 'columns{;}:ColumnName1; ColumnName2') |

Specify a custom separator if there are commas in the column names. Will return an array with default row names (Row 0, Row 1, etc.). |

datasourcearray([Datasource Node], 'columns:all') |

Select all (numeric) columns. Will return an array with default row names (Row 0, Row 1, etc.). |

datasourcearray([Datasource Node], 'ColumnName', 'RowNameColumn') |

Specify a column to use for row names. Note that if the string in the third argument is not a column name, the string will be used as a prefix for the row names (e.g. datasourcearray([Datasource Node], 'ColumnName', 'CustomRowName')) which will result in the following row names:CustomRowName 0, CustomRowName 1, etc. |

datasourcearray([Datasource Node], 'ColumnName', 'RowNameColumn', 'FilterColumn1', 'FilterValue1', 'FilterColumn2', 'FilterValue2', etc.) |

Filter the returned rows in the same way as other Datasource calls. |

Range check calculations are a special type of calculation that allows the user to validate a node is within hard or soft limits. The node changes colour depending on it’s condition at each time period, and logs warnings in the error log if outside the limits.

The log is accessible by right clicking a scenario and clicking on the view log button

In addition to colouring the nodes, there is another type of calculation called rangecheckresult([rangecheck_node]) that returns

-2 => below lowlow

-1 => below low

0 => ok

1 => above high

2 => above highhigh

Although Excel supports Circular References and there are times when it must be used, Circular References in Excel are not recommended due to the possibility of errors throughout the spreadsheet. Under the hood, Excel basically iterates through a number of times, and when it gets to the end of the iterations, that is your solution. Akumen’s Driver Models do not support circular references, except for Prior Value Nodes.

Prior Value Nodes are used to handle Circular References in Value Driver Models.

Prior Value Nodes can also be used to, in effect, create a recirculation, as shown in the example below.

In this case we are not relying on iterations to give us the correct result, we are recirculating the charity back into the profit in the next time period.

At time t = 0, we have no profit, move the timeslider to t = 1 and we have the initial profit as in the example above using the optimiser. At time t = 2, the profit has circulated through and gives us our final after tax profit.

The calculations used in this are:

| Node | Description |

|---|---|

| Tax | A global node, used only as the tax rate for the profit calculations |

| Revenue | A fixed input node (though we could flex this through scenarios to do a what if on profit) |

| Other Expenses | Again, a fixed input node |

| Before Tax Profit | [Revenue] - [Other Expenses] - [Charity] |

| Profit Closing Balance | This is the prior value node - note there is no initialisation value for this for t = 0 |

| After Tax Profit | [Profit Closing Balance] * (1 - [Tax]) |

| Charity | [After Tax Profit] * 0.1 |

Note that in this example, Charity is calculated based on After Tax Profit, and it’s value is then used in Before Tax Profit.

Driver model nodes have the ability to execute other apps within Akumen, and retrieve the results. There are two basic use cases for this. The first is Driver Models can be componentised, that is, smaller well tested, purpose built driver models can be constructed that link together in a larger driver model.

Secondly, there are cases where driver models do not have the capabilities of a full language, such as Python. A Python application can be created, where the driver model can execute it, pass in values from the driver model, execute the Py (or R) model, and return the results back to the driver model.

There is a performance consideration for using Py/R apps within a driver model. Akumen still has to queue up the request to execute the Py/R app, meaning there is a short delay before the results are retrieved. A larger Py model can render the driver model almost unusable if the driver model must wait for the results to come back.

execute(app name, period, input1Name, [input1Node], ...)

where:

| Parameter | Description |

|---|---|

app name |

The name of the application to execute |

period |

set to 0 for Py/R models, as it is not used. For Driver Models, set to the period you wish to fetch |

input1Name |

The name of the input in the other app to execute |

input1Node |

The node who’s value will be sent to the application to execute under input1Name |

Multiple inputs can be specified, using the inputName, [inputNode] syntax

Executions cannot be chained together. For example, a driver model cannot call itself, and a driver model calling another driver model that also has executions will create an error.

The results from an execution can be fetched by creating a new node, and fetching the results using executeoutput.

executeoutput([executenode], outputName)

The executeNode is the node that is running the execute (as above), and outputName is the name of the output to fetch. An error will be thrown if the output does not exist. Multiple outputs can be fetched using multiple output nodes. Note that the execute caches the results, ie it will not re-execute every time an output is fetched, it will execute once, then the results will be cached for each output node.

This page describes the process for migrating a model from Calc Engine v3 (referred to as CEv3 from now on) to Calc Engine v4 (referred to as CEv4 from now on). For most cases this should be a relatively straightforward task, however there are some exceptions to this, for example where features in CEv3 are no longer supported in CEv4, or the syntax has changed.

CEv3 is still available and can be kept as the engine running your CEv3 models, so migrating is optional.

All existing driver models will continue to use CEv3. Any new driver models will use CEv4.

It is recommended to do the migration in a clone of your existing model so you have a copy on hand to revert back to if required.

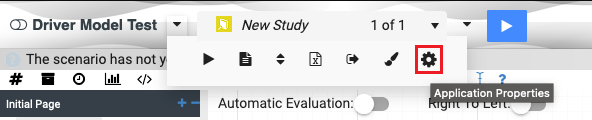

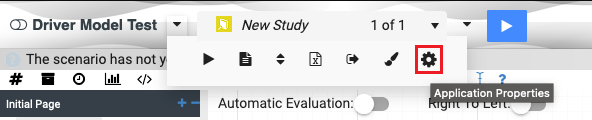

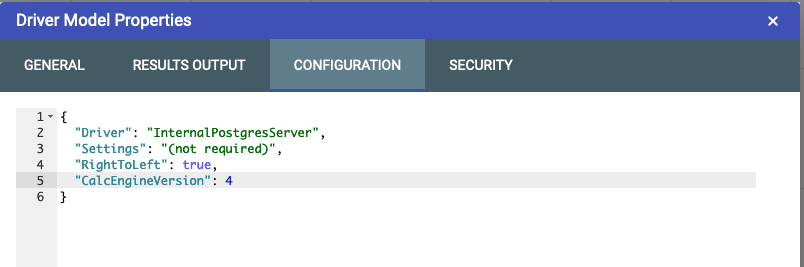

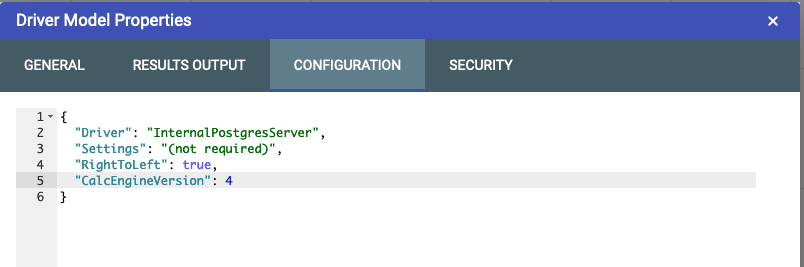

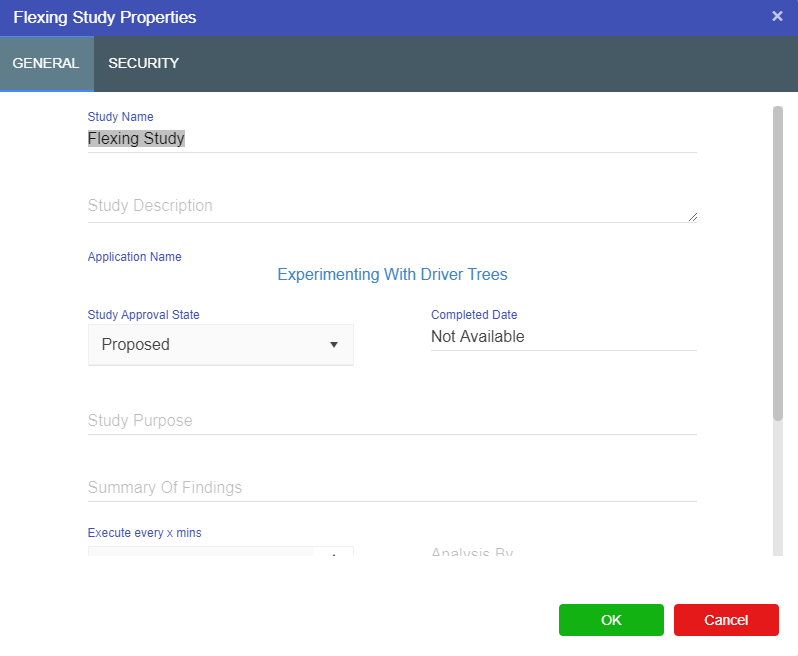

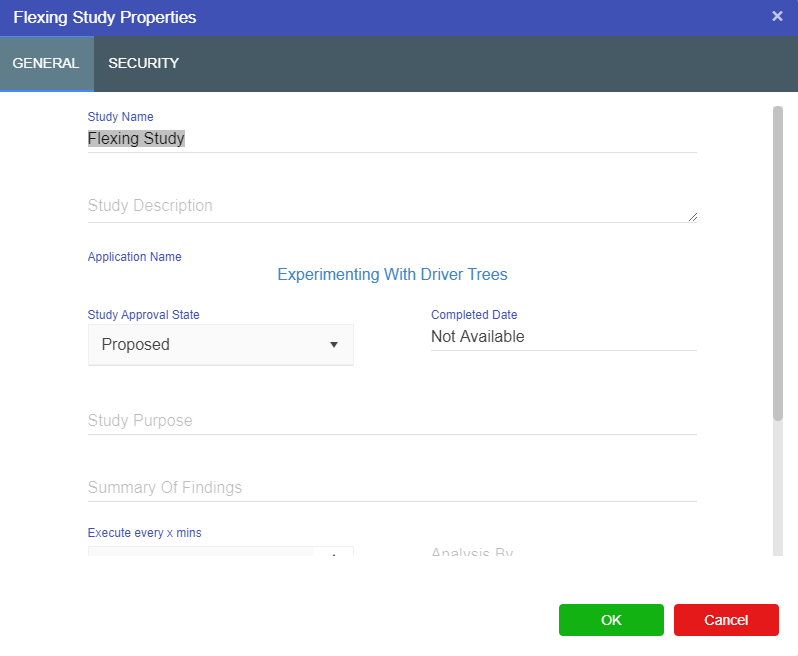

To change the calc engine version, open the Application Properties window.

This can be done by right-clicking the model on the Application Manager and selecting Properties or by selecting the model properties gear from within the model as shown below.

In the Properties window, select the Configuration tab:

Add the following line to the configuration as per the example above, also ensuring that a comma has been added to the end of the previous line:

"CalcEngineVersion": 4To revert back to CEv3, change the 4 in the line above to a 3 or alternatively remove the “CalcEngineVersion” line.

Some node types have been removed in CEv4.

When an unsupported node from CEv3 is used, and converted to CEv4 the UI will display an error indicating the node type is no longer supported:

Distribution nodes are not supported by CEv4 and have been removed. An alternative is to use the new random calculations from section Functions & Operators.

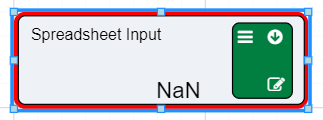

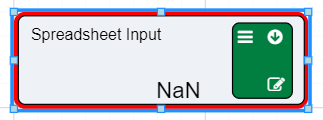

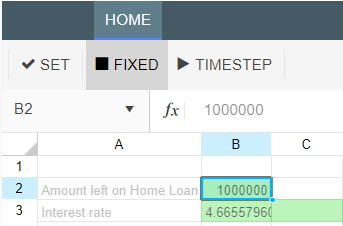

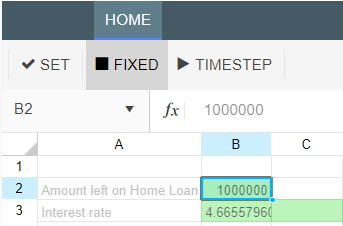

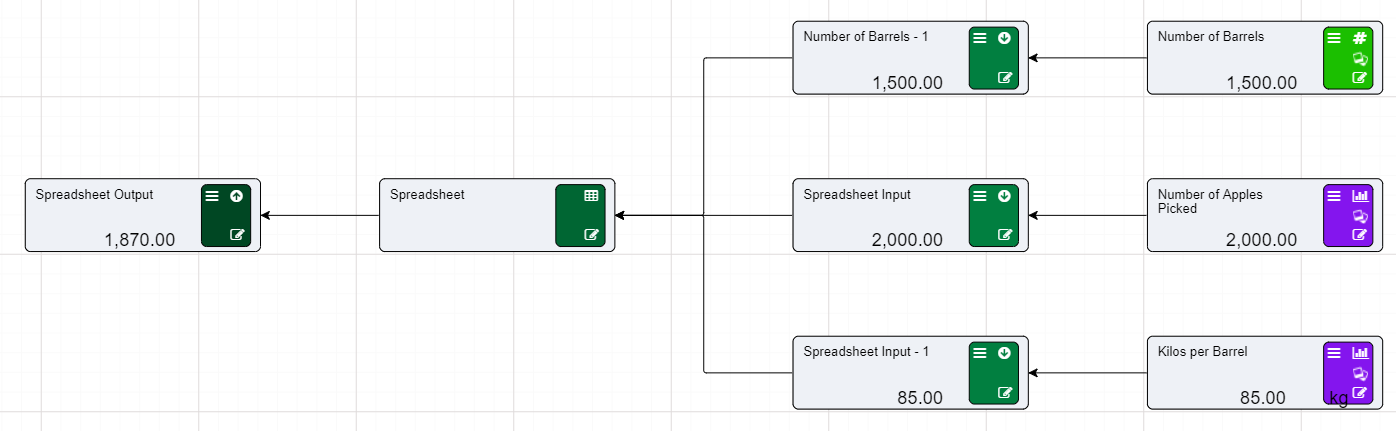

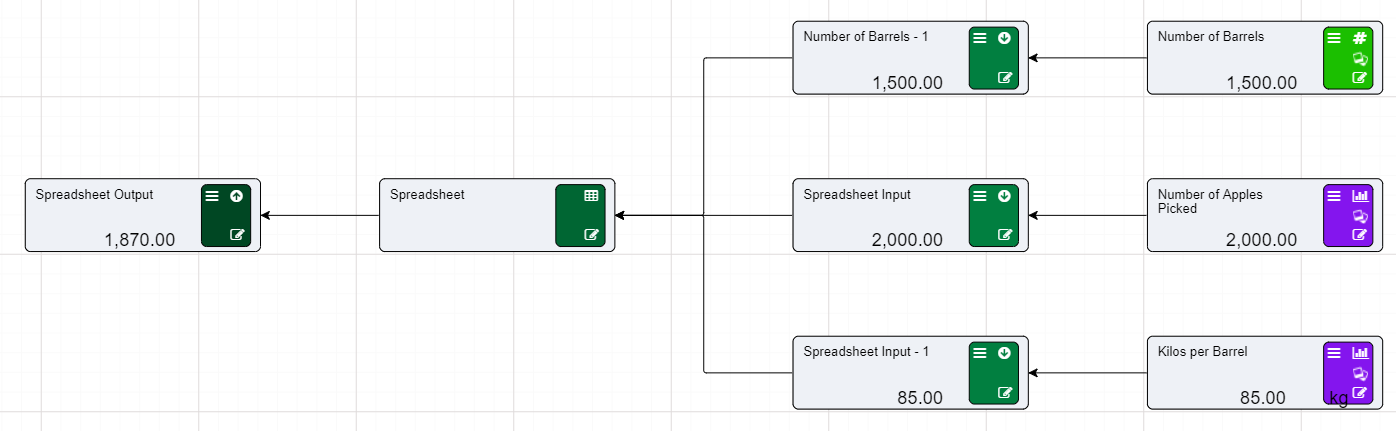

Spreadsheet Nodes/Spreadsheet Input Nodes/Spreadsheet Output Nodes are not supported by CEv4. If your CEv3 model contains Spreadsheet Nodes, you will need to replace these with other node types. If your spreadsheet nodes contain only numbers (i.e. have no formulas), consider using Asset Nodes, Numeric Nodes, Timeseries Nodes or Datasource Nodes as a replacement. If your spreadsheet nodes contain model logic (i.e. have formulas), you will need to extract that logic into calculation nodes.

CEv4 implements a few changes in syntax to enforce a stricter standard when writing calculations. If your calculations work in CEv3 but error in CEv4, check this section for known changes in syntax in CEv4.

CEv3 treats any text in between commas in a function call as a string and supports the following call:

datasource([Cost Data],value,average,true,0, Item, test item)In CEv4 this throws an invalid syntax error, and would require the user to put quotes around the parameter to succeed as per below:

datasource([Cost Data],value,average,true,0, Item, "test item")This will happen for all non number/letter characters present in these parameters. This will mostly affect datasource calls as the filtering function is free text.

CEv3 accepts node names in calculations with leading and trailing spaces.

For example, the nodes “My Value” and “My other value” could be called in a calculation as:

[ My value] + [My other value ]In CEv4 the matching is stricter and will not accept this:

[My value] + [My other value]In CEv3, it is possible to reference single-word node names in calculations with and without using square brackets. For example, a node called “MyValue” can be referred to as follows:

MyValue + 3This is not supported in CEv4. Square brackets are required when referencing nodes in calculations as follows:

[MyValue] + 3Some of the new functions for CEv4 are dynamic, in that they dynamically build their inputs based on the connected inputs

rather than having to fix the inputs in the calculation string. This is useful for building components, or knowing there may be new nodes in the future.

Functions that support dynamic inputs will be labelled as such in the documentation. To use them, simply leave the calculation with no parameters (e.g. array()).

The order nodes are laid out on the page matters for dynamic functions, such as node groups and arrays. When referencing nodes by index, the order the nodes are listed is determined from their positions on the driver model canvas - first by the y-axis, then by the x-axis.

Some of the special calculations (e.g. those that hold multiple values, such as arrays, component inputs or python nodes) don’t display the individual values like in calculation engine v3. These cannot be used directly in calculations and must be called upon by their helper nodes.

The calculations in this section are deprecated and only available when using Calculation Engine v3.

This functionality is only supported in Calculation Engine v3.

| Function | Notes |

|---|---|

optimise(numParticles, maxIterations, [evaluationnode], targetvalue, [first_input], first_input_lower_bound, first_input_upper_bound, ... to max of 5 inputs) |

Optimises a node, altering inputs until the target value is reached |

optimiseerror([optimise node]) |

Returns the error, ie how far from the target value was reached |

optimiseInput([optimise node], [Input node]) |

Returns the final input value from the optimisation |

minimise(numParticles, maxIterations, [evaluationnode], [first_input], first_input_lower_bound, first_input_upper_bound, ... to max of 5 inputs) |

Alters inputs until the minimal value of the result node is reached |

minimiseInput([minimise node], [Input node]) |

Returns the final input value from the minimisation |

maximise(numParticles, maxIterations, [evaluationnode], [first_input], first_input_lower_bound, first_input_upper_bound, ... to max of 5 inputs) |

Alters inputs until the minimal value of the result node is reached |

maximiseInput([maximise node], [Input node]) |

Returns the final input value from the maximisation |

This functionality is only supported in Calculation Engine v3.

The Optimiser functionality can be used as an option to handle Circular References in Value Driver Models.

You can use the Optimiser to in effect perform circular calculations if there is no mathematical way of solving the problem using standard nodes (within the current time period). Take the example below.

There is a circular reference in that the donation to charity is based on the after tax profit, but the profit also includes the donation to charity as a component of revenue - other expenses - charity.

The design pattern in Driver Models looks as follows:

Lets step through this node by node:

| Node | Description |

|---|---|

| Tax | A global node, used only as the tax rate for the profit calculations |